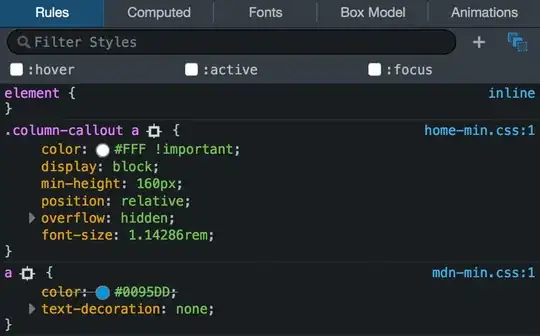

How can I do antialiasing on triangles on the entire render? Should I put it on the fragmentShader? Is there any other good solution to improve this sort of thing?

Here is my "view", with very crispy edges (not very nice).

How can I do antialiasing on triangles on the entire render? Should I put it on the fragmentShader? Is there any other good solution to improve this sort of thing?

Here is my "view", with very crispy edges (not very nice).

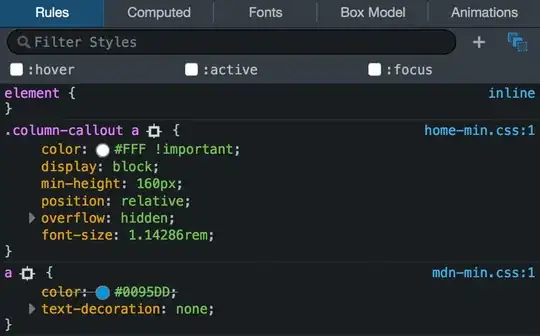

After doing some Deep research, I found that It's in fact pretty simple, and the most comonly done is to render like there was a screen 4 times bigger (or even more than 4 times). After rendering to this much more bigger screen, the GPU will take the avarege of that area and set the pixel color based on that.

It's pretty easy to enable this with this library:

https://code.google.com/p/gdc2011-android-opengl/source/browse/trunk/src/com/example/gdc11/MultisampleConfigChooser.java

However,you should keep in mind, that it will spent 4 or more times time to render everything, meaning more time to process, and perhaps, less FPS...

Also, if you are emulating an android device with OpenGL, find out if your GPU supports this kind of Multisampling. Mine for example, doesen't (Tegra).

Here is the final result, with and without multisampling: