SOMs are mainly a dimensionality reduction algorithm, not a classification tool. They are used for the dimensionality reduction just like PCA and similar methods (as once trained, you can check which neuron is activated by your input and use this neuron's position as the value), the only actual difference is their ability to preserve a given topology of output representation.

So what is SOM actually producing is a mapping from your input space X to the reduced space Y (the most common is a 2d lattice, making Y a 2 dimensional space). To perform actual classification you should transform your data through this mapping, and run some other, classificational model (SVM, Neural Network, Decision Tree, etc.).

In other words - SOMs are used for finding other representation of the data. Representation, which is easy for further analyzis by humans (as it is mostly 2dimensional and can be plotted), and very easy for any further classification models. This is a great method of visualizing highly dimensional data, analyzing "what is going on", how are some classes grouped geometricaly, etc.. But they should not be confused with other neural models like artificial neural networks or even growing neural gas (which is a very similar concept, yet giving a direct data clustering) as they serve a different purpose.

Of course one can use SOMs directly for the classification, but this is a modification of the original idea, which requires other data representation, and in general, it does not work that well as using some other classifier on top of it.

EDIT

There are at least few ways of visualizing the trained SOM:

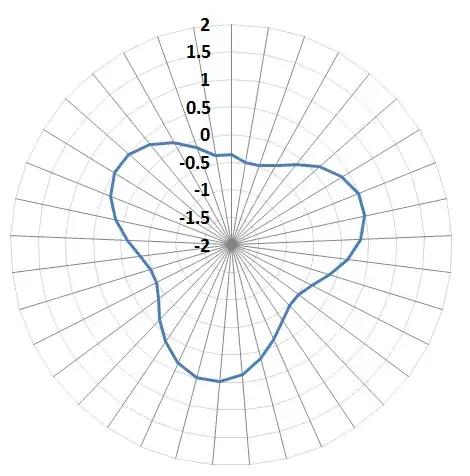

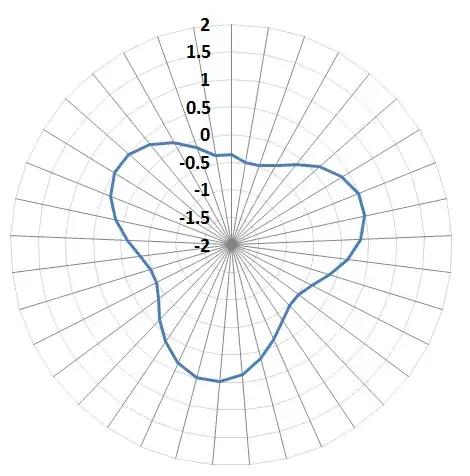

- one can render the

SOM's neurons as points in the input space, with edges connecting the topologicaly close ones (this is possible only if the input space has small number of dimensions, like 2-3)

- display data classes on the

SOM's topology - if your data is labeled with some numbers {1,..k}, we can bind some k colors to them, for binary case let us consider blue and red. Next, for each data point we calculate its corresponding neuron in the SOM and add this label's color to the neuron. Once all data have been processed, we plot the SOM's neurons, each with its original position in the topology, with the color being some agregate (eg. mean) of colors assigned to it. This approach, if we use some simple topology like 2d grid, gives us a nice low-dimensional representation of data. In the following image, subimages from the third one to the end are the results of such visualization, where red color means label 1("yes" answer) andbluemeans label2` ("no" answer)

- onc can also visualize the inter-neuron distances by calculating how far away are each connected neurons and plotting it on the

SOM's map (second subimage in the above visualization)

- one can cluster the neuron's positions with some clustering algorithm (like K-means) and visualize the clusters ids as colors (first subimage)