I had done it in 3 steps this way:

- Dump the MillionRow table into HashDump table, which has only 2 columns: Id int identity PK, and Hash varbinary(20). This table shall be indexed on its Hash column.

- Dump the HashDump table into HashUni ordered by Hash column. In between would be a Script Component that check whether the current row's Hash column value is same as the previous row. If same, direct row to Duplicate output, else Unique. This way you can log the Duplicate even if what you need is just the Unique.

- Dump the MillionRow table into MillionUni table. In between would be a Lookup Component that uses HashUni to tell which row is Unique.

This method allows me to log each duplicates with a message such as: "Row 1000 is a duplicate of row 100".

I have not found a better way than this. Earlier, I made a unique index on MillionUni, to dump directly the MillionRow into it, but I was not able to use "fast load", which was way too slow.

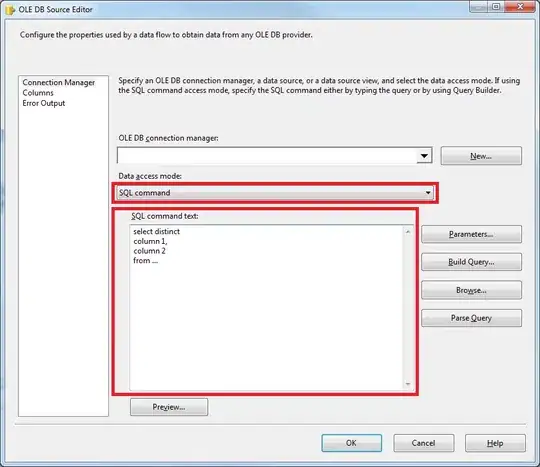

Here is one way to populate the Hash column:

public override void Input0_ProcessInputRow(Input0Buffer Row)

{

StringBuilder sb = new StringBuilder();

sb.Append(Row.Col1String_IsNull ? "" : Row.Col1String); sb.Append("|");

sb.Append(Row.Col2Num_IsNull ? "" : Row.Col2Num.ToString()); sb.Append("|");

sb.Append(Row.Col3Date_IsNull ? "" : Row.Col3Date.ToString("yyyy-MM-dd"));

var sha1Provider = HashAlgorithm.Create("SHA1");

Row.Hash = sha1Provider.ComputeHash(Encoding.UTF8.GetBytes(sb.ToString()));

}

If 200 columns prove to be a chore for you, part of this article shall inspire you. It is making a loop for the values of all column objects into a single string.

And to compare the Hash, use this method:

byte[] previousHash;

int previousRowNo;

public override void Input0_ProcessInputRow(Input0Buffer Row)

{

if (StructuralComparisons.StructuralEqualityComparer.Equals(Row.Hash, previousHash))

{

Row.DupRowNo = previousRowNo;

Row.DirectRowToDuplicate();

}

else

{

Row.DirectRowToUnique();

}

previousHash = Row.Hash;

previousRowNo = Row.RowNo;

}