I have a class, like this:

public class MyClass

{

public int Value { get; set; }

public bool IsValid { get; set; }

}

In actual fact it's much larger, but this recreates the problem (weirdness).

I want to get the sum of the Value, where the instance is valid. So far, I've found two solutions to this.

The first one is this:

int result = myCollection.Where(mc => mc.IsValid).Select(mc => mc.Value).Sum();

The second one, however, is this:

int result = myCollection.Select(mc => mc.IsValid ? mc.Value : 0).Sum();

I want to get the most efficient method. I, at first, thought that the second one would be more efficient. Then the theoretical part of me started going "Well, one is O(n + m + m), the other one is O(n + n). The first one should perform better with more invalids, while the second one should perform better with less". I thought that they would perform equally. EDIT: And then @Martin pointed out that the Where and the Select were combined, so it should actually be O(m + n). However, if you look below, it seems like this is not related.

So I put it to the test.

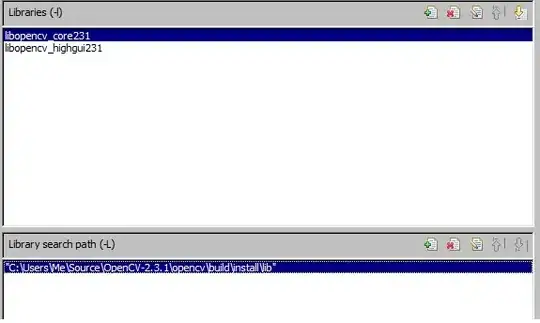

(It's 100+ lines, so I thought it was better to post it as a Gist.)

The results were... interesting.

With 0% tie tolerance:

The scales are in the favour of Select and Where, by about ~30 points.

How much do you want to be the disambiguation percentage?

0

Starting benchmarking.

Ties: 0

Where + Select: 65

Select: 36

With 2% tie tolerance:

It's the same, except that for some they were within 2%. I'd say that's a minimum margin of error. Select and Where now have just a ~20 point lead.

How much do you want to be the disambiguation percentage?

2

Starting benchmarking.

Ties: 6

Where + Select: 58

Select: 37

With 5% tie tolerance:

This is what I'd say to be my maximum margin of error. It makes it a bit better for the Select, but not much.

How much do you want to be the disambiguation percentage?

5

Starting benchmarking.

Ties: 17

Where + Select: 53

Select: 31

With 10% tie tolerance:

This is way out of my margin of error, but I'm still interested in the result. Because it gives the Select and Where the twenty point lead it's had for a while now.

How much do you want to be the disambiguation percentage?

10

Starting benchmarking.

Ties: 36

Where + Select: 44

Select: 21

With 25% tie tolerance:

This is way, way out of my margin of error, but I'm still interested in the result, because the Select and Where still (nearly) keep their 20 point lead. It seems like it's outclassing it in a distinct few, and that's what giving it the lead.

How much do you want to be the disambiguation percentage?

25

Starting benchmarking.

Ties: 85

Where + Select: 16

Select: 0

Now, I'm guessing that the 20 point lead came from the middle, where they're both bound to get around the same performance. I could try and log it, but it would be a whole load of information to take in. A graph would be better, I guess.

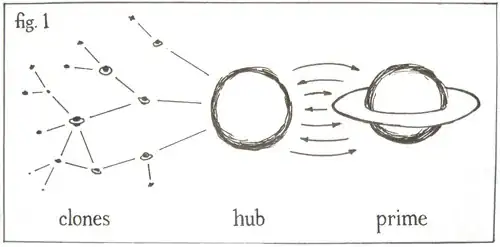

So that's what I did.

It shows that the Select line keeps steady (expected) and that the Select + Where line climbs up (expected). However, what puzzles me is why it doesn't meet with the Select at 50 or earlier: in fact I was expecting earlier than 50, as an extra enumerator had to be created for the Select and Where. I mean, this shows the 20-point lead, but it doesn't explain why. This, I guess, is the main point of my question.

Why does it behave like this? Should I trust it? If not, should I use the other one or this one?

As @KingKong mentioned in the comments, you can also use Sum's overload that takes a lambda. So my two options are now changed to this:

First:

int result = myCollection.Where(mc => mc.IsValid).Sum(mc => mc.Value);

Second:

int result = myCollection.Sum(mc => mc.IsValid ? mc.Value : 0);

I'm going to make it a bit shorter, but:

How much do you want to be the disambiguation percentage?

0

Starting benchmarking.

Ties: 0

Where: 60

Sum: 41

How much do you want to be the disambiguation percentage?

2

Starting benchmarking.

Ties: 8

Where: 55

Sum: 38

How much do you want to be the disambiguation percentage?

5

Starting benchmarking.

Ties: 21

Where: 49

Sum: 31

How much do you want to be the disambiguation percentage?

10

Starting benchmarking.

Ties: 39

Where: 41

Sum: 21

How much do you want to be the disambiguation percentage?

25

Starting benchmarking.

Ties: 85

Where: 16

Sum: 0

The twenty-point lead is still there, meaning it doesn't have to do with the Where and Select combination pointed out by @Marcin in the comments.

Thanks for reading through my wall of text! Also, if you're interested, here's the modified version that logs the CSV that Excel takes in.