I am having an issue with using neural networks. I started with something simple. I just used nntool with one hidden layer(with one neuron) with linear activation function. For the output also, I used the linear activation function. I just fed my Xs and Ys to the neural network tool and got the results on the testing set.

I compared that with normal ridge function in matlab.

I could see that neural network one performed much worse than ridge function. The first reason is that there are lots of negative values in the predictions, when my target is only positive. Ridge regression gave about 800 -ve values while nn gave around 5000 -ve values which totally ruined the accuracy of nntool.

Since nntool can be used to perform linear regression, why nntool is not performing as well as ridge regression?

What about the negative values what's the reason behind it and how to enforce positive values?

Here is what I use to train the network

targets = dayofyear_targets(:, i+1);

net = newfit(train_data', targets', 5);

net.performFcn = 'mae';

net.layers{2}.transferFcn = 'purelin';

net.trainParam.max_fail = 10;

net.layers{1}.transferFcn = 'tansig';

net = train(net, train_data', targets');

results{n}(:, i) = sim(net, train_data')

Here is the link to my data https://www.dropbox.com/s/0wcj2y6x6jd2vzm/data.mat

Max value of target = 31347900 Min value of target = 12000 Std of target = 7.8696e+06 Mean of target = 1.6877e+07

Mean of input data(all features) = 0

Max of input features = 318.547660595906 170.087177689426 223.932169893425 168.036356568791 123.552142071032 119.203127702922 104.835054133360 103.991193950830 114.185533613098 89.9463148033190 146.239919217723 87.4695246220901 54.0670595471470 138.770752686700 206.797850609643 66.1464335873203 74.2115064643667 57.5743248336263 34.9080123850414 51.0189601377110 28.2306033402457 59.0128127003956 109.067637217394 307.093253638216 103.049923948310 62.8146642809675 200.015259541953 116.661885835164 62.5567327185901 53.8264756204627 58.8389745246703 176.143066044763 109.758983758653 60.7299481351038 58.6442946860097 46.1757085114781 336.346653669636 188.317461118279 224.964813627679 131.036150096149 137.154788108331 101.660743039860 79.4118778807977 71.4376953724718 90.5561535067498 93.4577679861134 336.454999007931 188.478832826684 225.143399783080 131.129689699137 137.344882971079 101.735403131103 79.4552027696783 71.4625401815804 90.6415702940799 93.4391513416449 143.529912145748 139.846472255779 69.3652595100658 141.229186078884 142.169055101267 61.7542261599789 152.193483162673 142.600096412522 100.923921522930 117.430577166104 95.7956956529542 97.2020336095432 53.3982366051064 67.5119662506151 51.6323341924432 45.0561119607012 42.9378617679366 129.976361335597 142.673696349981 80.3147691198763 71.3756376053104 63.4368122986219 44.5956741629581 53.4495610863871 58.7095984295653 45.6460094353149 39.1823704174863

Min of input features = -20.4980450089652 -216.734809594199 -208.377002401333 -166.153721182481 -164.971591950319 -125.794572986012 -120.613947913087 -90.5034237473168 -134.579349373396 -83.3049591539539 -207.619242338228 -61.4759254872546 -53.9649370954913 -160.211101485606 -19.9518644140863 -71.7889519995308 -53.8121825067231 -63.5789507316766 -51.1490159556167 -45.3464904959582 -31.6240685853237 -44.3432050298007 -34.2568293807697 -266.636505655523 -146.890814672460 -74.1775783694521 -132.270950595716 -98.6307112885543 -74.9183852672982 -62.4830008457438 -50.9507510122653 -140.067268423566 -93.0276674484945 -46.0819928136273 -59.2773430879897 -42.5451478861616 -31.2745435717060 -167.227723082743 -165.559585876166 -111.610031207207 -115.227936838215 -114.221934636009 -100.253661816324 -92.8856877745228 -86.1818201082433 -70.8388921500665 -31.4414388158249 -167.300019804654 -165.623030944544 -111.652804647492 -115.385214399271 -114.284846572143 -100.330328846390 -93.0745562342156 -86.1595126080268 -70.9022836842639 -255.769604133190 -258.123896542916 -55.1273177937196 -254.950820371016 -237.808870530211 -48.7785774080310 -213.713286177228 -246.086347088813 -125.941623423708 -116.383806139418 -79.2526295146070 -73.5322630343671 -59.5627573635424 -59.8471670606059 -64.6956071579830 -44.2151862981818 -37.8399444185350 -165.171915536922 -61.7557905578095 -97.6861764054228 -48.1218110960853 -57.4061842741057 -55.2734701363017 -45.7001129953926 -46.0498982933589 -40.8981619566775 -38.8963700558353

std of input features = 32.6229352625809 23.9923892231470 20.2491752921310 17.7607289226108 16.0041198617605 14.0220141286592 12.5650595823472 11.8017618129464 11.3556667194196 10.5382275790401 79.9955119586915 23.4033030770963 13.6077112635514 70.2453437964039 20.5151528145556 16.0996176741868 14.1570158221881 12.9623353379168 10.9374477375002 8.96886512490408 8.14900837031189 7.08031665751228 6.91909266176659 78.5294157406654 29.1855289103841 17.8430919295327 76.8762213278391 26.2042738515736 17.0642403174281 14.5812208141282 11.0486273595910 76.5345079046264 27.0522533813606 15.3463708931398 14.6265102381665 11.1878734989856 39.6000366966711 26.1651093368473 23.0548487219797 17.7418206149244 16.6414818214387 13.3202865460648 12.3418432467697 11.6967799788894 10.8462000495929 10.4594143594862 39.5881760483459 26.1554037787439 23.0480628017814 17.7384413542873 16.6400399748141 13.3209601910848 12.3455390551215 11.6986154850079 10.8471011424912 10.4616180751664 84.5166510619818 84.2685711235292 17.6461724536770 84.5782246722891 84.1536835974735 15.4898443616888 84.4295575869672 59.7308251367612 27.7396138514949 24.5736295499757 18.5604346514449 15.5172516938784 12.5038199620381 11.8900580903921 10.7970958504272 9.68255544149509 8.96604859535919 61.8751159200641 22.9395284949373 20.3023241153997 18.6165218063180 13.5503823185794 12.1726984705006 11.1423398921756 9.54944172482809 8.81223325514952 7.92656384557323

Number of samples = 5113

Dimension of input = 5113x83 Dimension of output = 5113x1

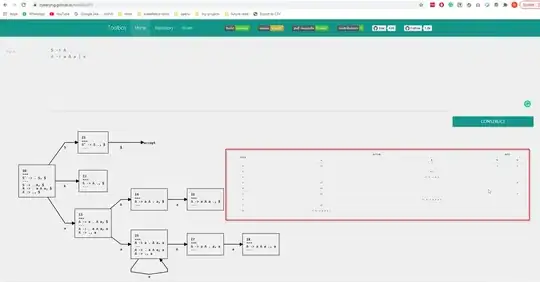

Actual target

Predicted values