This is a follow up question related to this question.

Thanks to previous help I have successfully imported a netCDF file (or files with MFDataset) and am able to compare the different times to one another to create another cumulative dataset. Here is a piece of the current code.

from numpy import *

import netCDF4

import os

f = netCDF4.MFDataset('air.2m.1979.nc')

atemp = f.variables['air']

ntimes, ny, nx = atemp.shape

cold_days = zeros((ntimes, ny, nx), dtype=int)

for i in range(ntimes):

for b in range(ny):

for c in range(nx):

if i == 1:

if atemp[i,b,c] < 0:

cold_days[i,b,c] = 1

else:

cold_days[i,b,c] = 0

else:

if atemp[i,b,c] < 0:

cold_days[i,b,c] = cold_days[i-1,b,c] + 1

else:

cold_days[i,b,c] = 0

This seems like a brute force way to get the job done, and though it works it takes a very long time. I'm not sure if it takes such a long time because I'm dealing with 365 349x277 matrices (35,285,645 pixels) or if my old school brute force way is simply slow in comparison to some built in python methods.

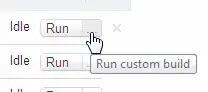

Below is an example of what I believe the code is doing. It looks at Time and increments cold days if temp < 0. If temp >= 0 than cold days resets to 0. In the below image you will see that the cell at row 2, column 1 increments each Time that passes but the cell at row 2, column 2 increments at Time 1 but resets to zero on Time 2.

Is there a more efficient way to rip through this netCDF dataset to perform this type of operation?