I'm using the iOS face detector on all possible orientations of an image like this

for (exif = 1; exif <= 8 ; exif++)

{

@autoreleasepool {

NSNumber *orientation = [NSNumber numberWithInt:exif];

NSDictionary *imageOptions = [NSDictionary dictionaryWithObject:orientation forKey:CIDetectorImageOrientation];

NSTimeInterval start = [NSDate timeIntervalSinceReferenceDate];

glFlush();

features = [self.detector featuresInImage:ciimage options:imageOptions];

//features = [self.detector featuresInImage:ciimage];

if (features.count > 0)

{

NSString *str = [NSString stringWithFormat:@"-I- found faces using exif %d",exif];

[faceDetection log:str];

NSTimeInterval duration = [NSDate timeIntervalSinceReferenceDate] - start;

str = [NSString stringWithFormat:@"-I- facedetection total runtime is %f s",duration];

[faceDetection log:str];

self.exif=[[NSNumber alloc] initWithInt:exif];

break;

}

else {

features = nil;

}

}

}

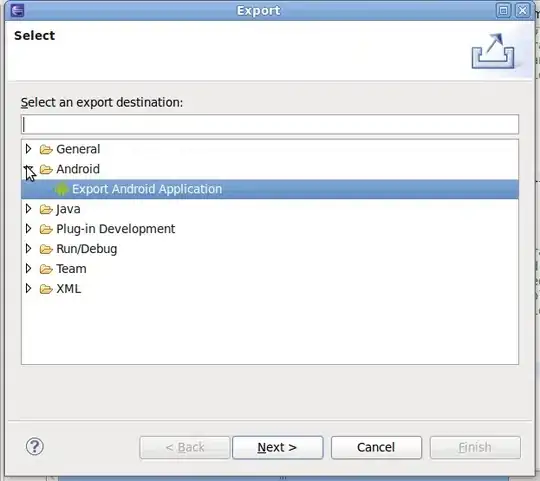

but it seems in the profiler that the memory is growing each time:

Not sure if this is true, and if so how to solve the issue