I wrote a PyMC model for fitting 3 Normals to data using (similar to the one in this question).

import numpy as np

import pymc as mc

import matplotlib.pyplot as plt

n = 3

ndata = 500

# simulated data

v = np.random.randint( 0, n, ndata)

data = (v==0)*(10+ 1*np.random.randn(ndata)) \

+ (v==1)*(-10 + 2*np.random.randn(ndata)) \

+ (v==2)*3*np.random.randn(ndata)

# the model

dd = mc.Dirichlet('dd', theta=(1,)*n)

category = mc.Categorical('category', p=dd, size=ndata)

precs = mc.Gamma('precs', alpha=0.1, beta=0.1, size=n)

means = mc.Normal('means', 0, 0.001, size=n)

@mc.deterministic

def mean(category=category, means=means):

return means[category]

@mc.deterministic

def prec(category=category, precs=precs):

return precs[category]

obs = mc.Normal('obs', mean, prec, value=data, observed = True)

model = mc.Model({'dd': dd,

'category': category,

'precs': precs,

'means': means,

'obs': obs})

M = mc.MAP(model)

M.fit()

# mcmc sampling

mcmc = mc.MCMC(model)

mcmc.use_step_method(mc.AdaptiveMetropolis, model.means)

mcmc.use_step_method(mc.AdaptiveMetropolis, model.precs)

mcmc.sample(100000,burn=0,thin=10)

tmeans = mcmc.trace('means').gettrace()

tsd = mcmc.trace('precs').gettrace()**-.5

plt.plot(tmeans)

#plt.errorbar(range(len(tmeans)), tmeans, yerr=tsd)

plt.show()

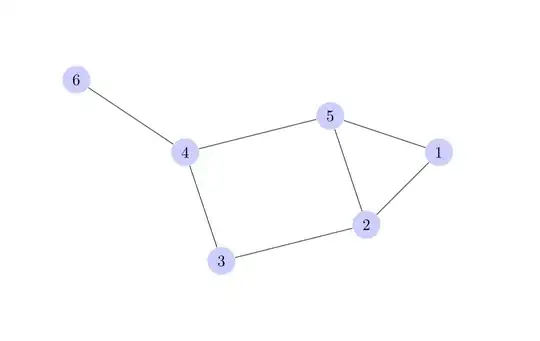

The distributions from which I sample my data are clearly overlapping, yet there are 3 well distinct peaks (see image below). Fitting 3 Normals to this kind of data should be trivial and I would expect it to produce the means I sample from (-10, 0, 10) in 99% of the MCMC runs.

Example of an outcome I would expect. This happened in 2 out of 10 cases.

Example of an outcome I would expect. This happened in 2 out of 10 cases.

Example of an unexpected result that happened in 6 out of 10 cases. This is weird because on -5, there is no peak in the data so I can't really a serious local minimum that the sampling can get stuck in (going from (-5,-5) to (-6,-4) should improve the fit, and so on).

Example of an unexpected result that happened in 6 out of 10 cases. This is weird because on -5, there is no peak in the data so I can't really a serious local minimum that the sampling can get stuck in (going from (-5,-5) to (-6,-4) should improve the fit, and so on).

What could be the reason that (adaptive Metropolis) MCMC sampling gets stuck in the majority of cases? What would be possible ways to improve the sampling procedure that it doesn't?

So the runs do converge, but do not really explore the right range.

Update: Using different priors, I get the right convergence (appx. first picture) in 5/10 and the wrong one (appx. second picture) in the other 5/10. Basically, the lines changed are the ones below and removing the AdaptiveMetropolis step method:

precs = mc.Gamma('precs', alpha=2.5, beta=1, size=n)

means = mc.Normal('means', [-5, 0, 5], 0.0001, size=n)