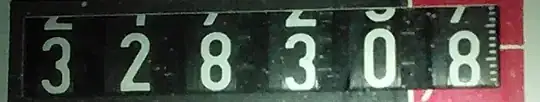

Two things will fix this completely:

Remove everything which is not text from the image. You need to use some CV to find the plate area (for example by color, etc) and then mask out all of the background. You want the input to tesseract to be black and white, where text is black and everything else is white

Remove skew (as mentioned by FrankPI above). tesseract is actually supposed to work okay with skew (see "Tesseract OCR Engine" overview by R. Smith) but on the other hand it doesn't always work, especially if you have a single line as opposed to a few paragraphs. So removing skew manually first is always good, if you can do it reliably. You will probably know the exact shape of the bounding trapezoid of the plate from step 1, so this should not be too hard. In the process of removing skew, you can also remove perspective: all license plates (usually) have the same font, and if you scale them to the same (perspective-free) shape the letter shapes would be exactly the same, that would help text recognition.

Some further pointers...

Don't try to code this at first: take a really easy to OCR (ie: from directly in front, no perspective) picture of a plate, edit it in photoshop (or gimp) and run it through tesseract on the commandline. Keep editing in different ways until this works. For example: select by color (or flood select the letter shapes), fill with black, invert selection, fill with white, perspective transform so corners of plate are a rectangle, etc. Take a bunch of pictures, some harder (maybe from odd angles, etc). Do this with all of them. Once this works completely, think about how to make a CV algorithm that does the same thing you did in photoshop :)

P.S. Also, it is better to start with higher resolution image if possible. It looks like the text in your example is around 14 pixels tall. tesseract works pretty well with 12 point text at 300 dpi, this is about 50 pixels tall, and it works much better at 600 dpi. Try to make your letter size be at least 50 preferably 100 pixels.

P.P.S. Are you doing anything to train tesseract? I think you have to do that, the font here is different enough to be a problem. You probably also need something to recognize (and not penalize) dashes which will be very common in your texts, looks like in the second example "T-" is recognized as H.