A production environment became very slow recently. The cpu of the process took 200%. It kept working however. After I restarted the service it functioned normal again. I have several symptoms : The Par survivor space heap was empty for a long time and garbage collection took about 20% of the cpu time.

JVM options:

X:+CMSParallelRemarkEnabled, -XX:+HeapDumpOnOutOfMemoryError, -XX:+UseConcMarkSweepGC, - XX:+UseParNewGC, -XX:HeapDumpPath=heapdump.hprof, -XX:MaxNewSize=700m, -XX:MaxPermSize=786m, -XX:NewSize=700m, -XX:ParallelGCThreads=8, -XX:SurvivorRatio=25, -Xms2048m, -Xmx2048m

Arch amd64

Dispatcher Apache Tomcat

Dispatcher Version 7.0.27

Framework java

Heap initial (MB) 2048.0

Heap max (MB) 2022.125

Java version 1.6.0_35

Log path /opt/newrelic/logs/newrelic_agent.log

OS Linux

Processors 8

System Memory 8177.964, 8178.0

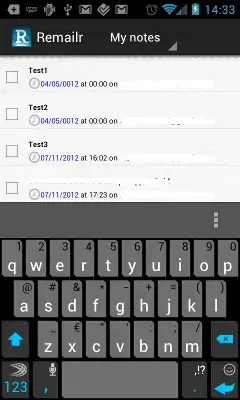

More info in the attached pic When the problem occurred on the non-heap the used code cache and used cms perm gen dropped to half.

I took the info from the newrelic.

The question is why does the server start to work so slow.

Sometimes the server stops completely, but we found that there is a problem with PDFBox, when uploading some pdf and contains some fonts it crashes the JVM.

More info: I observed that every day the Old gen is filling up. Now I restart the server daily. After restart it's all nice and dandy but the old gen is filling up till next day and the server slows down till needs a restart.