I'd like to generate unique random numbers between 0 and 1000 that never repeat (i.e. 6 doesn't show up twice), but that doesn't resort to something like an O(N) search of previous values to do it. Is this possible?

-

4Isn't this the same question as http://stackoverflow.com/questions/158716/how-do-you-efficiently-generate-a-list-of-k-non-repeating-integers-between-0-and-n – jk. Oct 12 '08 at 21:03

-

2Is 0 between 0 and 1000? – Pete Kirkham Jan 19 '09 at 20:49

-

6If you are prohibiting anything over constant time (like `O(n)` in time or memory), then many of the answer below are wrong, including the accepted answer. – jww Aug 29 '14 at 03:26

-

How would you shuffle a pack of cards? – Colonel Panic Dec 24 '14 at 11:21

-

You cannot have `O(1)` if there are `N` elements in the answer. So the requirements are unclear. – ivan_pozdeev Sep 05 '16 at 16:32

-

11**WARNING!** Many of the answers given below to not produce truly random _sequences_, are slower than O(n) or otherwise defective! http://www.codinghorror.com/blog/archives/001015.html is an essential read before you use any of them or try to concoct your own! – ivan_pozdeev Sep 07 '16 at 22:34

-

Flagging as an inferior duplicate as per http://meta.stackoverflow.com/questions/334325/a-few-intersecting-questions-about-picking-k-elements-of-n – ivan_pozdeev Oct 28 '16 at 23:20

22 Answers

Initialize an array of 1001 integers with the values 0-1000 and set a variable, max, to the current max index of the array (starting with 1000). Pick a random number, r, between 0 and max, swap the number at the position r with the number at position max and return the number now at position max. Decrement max by 1 and continue. When max is 0, set max back to the size of the array - 1 and start again without the need to reinitialize the array.

Update: Although I came up with this method on my own when I answered the question, after some research I realize this is a modified version of Fisher-Yates known as Durstenfeld-Fisher-Yates or Knuth-Fisher-Yates. Since the description may be a little difficult to follow, I have provided an example below (using 11 elements instead of 1001):

Array starts off with 11 elements initialized to array[n] = n, max starts off at 10:

+--+--+--+--+--+--+--+--+--+--+--+

| 0| 1| 2| 3| 4| 5| 6| 7| 8| 9|10|

+--+--+--+--+--+--+--+--+--+--+--+

^

max

At each iteration, a random number r is selected between 0 and max, array[r] and array[max] are swapped, the new array[max] is returned, and max is decremented:

max = 10, r = 3

+--------------------+

v v

+--+--+--+--+--+--+--+--+--+--+--+

| 0| 1| 2|10| 4| 5| 6| 7| 8| 9| 3|

+--+--+--+--+--+--+--+--+--+--+--+

max = 9, r = 7

+-----+

v v

+--+--+--+--+--+--+--+--+--+--+--+

| 0| 1| 2|10| 4| 5| 6| 9| 8| 7: 3|

+--+--+--+--+--+--+--+--+--+--+--+

max = 8, r = 1

+--------------------+

v v

+--+--+--+--+--+--+--+--+--+--+--+

| 0| 8| 2|10| 4| 5| 6| 9| 1: 7| 3|

+--+--+--+--+--+--+--+--+--+--+--+

max = 7, r = 5

+-----+

v v

+--+--+--+--+--+--+--+--+--+--+--+

| 0| 8| 2|10| 4| 9| 6| 5: 1| 7| 3|

+--+--+--+--+--+--+--+--+--+--+--+

...

After 11 iterations, all numbers in the array have been selected, max == 0, and the array elements are shuffled:

+--+--+--+--+--+--+--+--+--+--+--+

| 4|10| 8| 6| 2| 0| 9| 5| 1| 7| 3|

+--+--+--+--+--+--+--+--+--+--+--+

At this point, max can be reset to 10 and the process can continue.

- 9,898

- 13

- 71

- 100

- 106,424

- 25

- 145

- 137

-

If the array doesn't start off shuffled (and not with the naive algorithm; see Jeff's post on shuffling), doesn't this introduce a subtle bias? – Mitch Wheat Jan 03 '09 at 06:09

-

-

6Jeff's post on shuffling suggests this will not return good random numbers.. http://www.codinghorror.com/blog/archives/001015.html – pro Jan 03 '09 at 09:55

-

16@Peter Rounce: I think not; this looks to me like the Fisher Yates algorithm, also quoted in Jeff's post (as the good guy). – Brent.Longborough Jan 03 '09 at 10:35

-

Perl6/Parrot will have lazy lists, that will only keep track of changed elements. Which means they won't have the initial hit that other systems would have. – Brad Gilbert Jan 04 '09 at 04:45

-

@Brad: I'm not really sure what you are talking about. What "initial hit" are you referring to and how will Perl6/Parrot avoid that exactly? – Robert Gamble Jan 04 '09 at 05:22

-

The algorithm you describe takes O(n log n) time to generate the list, or O(log n) amortized time per element. – Charles Sep 24 '10 at 20:05

-

-

@Robert: Because you're generating a (lg n)-bit random number for each number on the list, and this takes Omega(log n) time. – Charles Sep 24 '10 at 20:46

-

@Charles: I think your logic is faulty, it may take O(n) time to generate the original list of numbers if you do not already have this set up but it takes O(1) time to generate each number from the list which is that was being asked for. – Robert Gamble Sep 24 '10 at 20:53

-

1@Robert: This method does not generate the list in O(n), it takes O(n log n). I can't imagine that the request meant that we're allowed infinite pre-processing time, because in that case any method works -- just do whatever you need to do, then generate each item and store it in a table. It's clear to me that the time must include the upfront cost, which is log n per element. (Actually, the algorithm can be modified to generate each element in O(log n) time rather than O(log n) amortized time.) – Charles Sep 24 '10 at 20:59

-

@Charles: The initial list takes O(n) time to initialize (it takes twice as long to initialize 2 integers of the same size as it does initialize one such integer). The time that the random number generation takes depends on the implementation. Once you have the random number it takes constant time to execute the algorithm I presented. The OP asked for something "that doesn't resort to something like an O(N) search of previous values to do it" which indicates that the O(1) part should be the selection. Since this is the accepted answer, I think it met the OP's requirements. – Robert Gamble Sep 24 '10 at 21:36

-

1@Robert: The method requires Theta(n log n) random bits: actually, precisely lg n! bits. Are you claiming that you can generate Theta(n log n) bits in time O(n)? – Charles Sep 26 '10 at 04:40

-

@Charles, the claim is what I put in my answer. Read the question and my answer, if you feel you have a better answer you are more than free to post it. – Robert Gamble Sep 26 '10 at 16:05

-

3@robert: I just wanted to point out that it doesn't produce, as in the name of the question, "unique random numbers in O(1)". – Charles Sep 26 '10 at 16:23

-

1@Charles: Fair enough, although nobody ever said it did and the the question elaborates pretty well on what was desired which should prevent confusion although I guess the title could be updated to better reflect the question that was actually asked and answered. – Robert Gamble Sep 26 '10 at 17:19

-

@Robert: Yep. (In fairness, I didn't claim that anyone claimed this was O(1) -- I just pointed out the complexity, since it hadn't been mentioned up to that point.) – Charles Sep 26 '10 at 17:23

-

1@Charles/Robert - assuming you are dealing with fixed size 32-bit machine integers (which is a reasonable assumption given the question and scales of integers discussed) then you only need at most 32 random bits per sample. Generating that is an O(1) operation, hence this should be regarded as an O(1) algorithm for practical (as opposed to theoretical) purposes. – mikera Sep 27 '10 at 11:18

-

3@mikera: Agreed, although technically if you're using fixed-size integers the whole list can be generated in O(1) (with a large constant, viz. 2^32). Also, for practical purposes, the definition of "random" is important -- if you really want to use your system's entropy pool, the limit is the computation of the random bits rather than calculations themselves, and in that case n log n is relevant again. But in the likely case that you'll use (the equivalent of) /dev/urandom rather than /dev/random, you're back to 'practically' O(n). – Charles Sep 27 '10 at 14:25

-

4I'm a little confused, wouldn't the fact that you have to perform `N` iterations (11 in this example) to get the desired result each time mean it's `O(n)`? As you need to to do `N` iterations to get `N!` combinations from the same initial state, otherwise your output will only be one of N states. – Seph Dec 04 '11 at 08:13

-

1

-

It's completely unnecessary to perform all the _"11 iterations"_ before starting over. So, the method is strictly inferior to Fisher-Yates with no gain. Just the thing Jeff warned about in his blog [as pointed out by pro](http://stackoverflow.com/questions/196017/unique-non-repeating-random-numbers-in-o1#comment233862_196065). – ivan_pozdeev Sep 07 '16 at 21:35

You can do this:

- Create a list, 0..1000.

- Shuffle the list. (See Fisher-Yates shuffle for a good way to do this.)

- Return numbers in order from the shuffled list.

So this doesn't require a search of old values each time, but it still requires O(N) for the initial shuffle. But as Nils pointed out in comments, this is amortised O(1).

- 219,335

- 46

- 382

- 435

-

I disagree that it's amortized. The total algorithm is O(N) because of the shuffling. I guess you could say it's O(.001N) because each value only consumes 1/1000th of a O(N) shuffle, but that's still O(N) (albeit with a tiny coefficient). – Kirk Strauser Oct 14 '08 at 18:29

-

5@Just Some Guy N = 1000, so you are saying that it is O(N/N) which is O(1) – Guvante Oct 22 '08 at 08:40

-

1If each insert into the shuffled array is an operation, then after inserting 1 value, you can get 1 random value. 2 for 2 values, and so on, n for n values. It takes n operations to generate the list, so the entire algorithm is O(n). If you need 1,000,000 random values, it will take 1,000,000 ops – Kibbee Jan 03 '09 at 18:45

-

3Think about it this way, if it was constant time, it would take the same amount of time for 10 random numbers as it would for 10 billion. But due to the shuffling taking O(n), we know this is not true. – Kibbee Jan 03 '09 at 18:47

-

Any method which requires you to initialise an array of size N with values is going to have an O(N) initialisation cost; moving the shuffle to the initialise or doing one iteration of the shuffle per request doesn't make any difference. – Pete Kirkham Jan 19 '09 at 20:48

-

1This actually takes amortized time O(log n), since you need to generate n lg n random bits. – Charles Sep 24 '10 at 20:03

-

1I've been tempted for years to amend @AdamRosenfield's edit to say "amortised" instead of "amortized", but I can't bring myself to make such a small edit with no other changes, especially since said edit is 5 years old. Still, I should at least state so for the record. – C. K. Young Dec 19 '13 at 16:13

-

2And now, I have all the justification to do it! http://meta.stackoverflow.com/q/252503/13 – C. K. Young May 08 '14 at 12:59

Use a Maximal Linear Feedback Shift Register.

It's implementable in a few lines of C and at runtime does little more than a couple test/branches, a little addition and bit shifting. It's not random, but it fools most people.

- 48,267

- 11

- 78

- 120

-

12"It's not random, but it fools most people". That applies to all pseudo-random number generators and all feasible answers to this question. But most people won't think about it. So omitting this note would maybe result in more upvotes... – f3lix Mar 18 '09 at 14:43

-

Why fake it when you can [do it correctly](http://stackoverflow.com/a/196065/111307)? – bobobobo Apr 18 '13 at 16:42

-

4

-

To save 4KB of RAM? For this particular problem, I see no reason to fake it. Other problems maybe. – bobobobo Apr 20 '13 at 17:03

-

3

-

2Using that method, how do you generate numbers let's say between 0 and 800000 ? Some might use a LFSR which period is 1048575 (2^20 - 1) and get next one if number is out of range but this won't be efficient. – tigrou Jul 04 '16 at 09:35

-

1As an LFSR, this doesn't produce uniformly distributed _sequences:_ the entire sequence that would be generated is defined by the first element. – ivan_pozdeev Sep 07 '16 at 22:19

You could use Format-Preserving Encryption to encrypt a counter. Your counter just goes from 0 upwards, and the encryption uses a key of your choice to turn it into a seemingly random value of whatever radix and width you want. E.g. for the example in this question: radix 10, width 3.

Block ciphers normally have a fixed block size of e.g. 64 or 128 bits. But Format-Preserving Encryption allows you to take a standard cipher like AES and make a smaller-width cipher, of whatever radix and width you want, with an algorithm which is still cryptographically robust.

It is guaranteed to never have collisions (because cryptographic algorithms create a 1:1 mapping). It is also reversible (a 2-way mapping), so you can take the resulting number and get back to the counter value you started with.

This technique doesn't need memory to store a shuffled array etc, which can be an advantage on systems with limited memory.

AES-FFX is one proposed standard method to achieve this. I've experimented with some basic Python code which is based on the AES-FFX idea, although not fully conformant--see Python code here. It can e.g. encrypt a counter to a random-looking 7-digit decimal number, or a 16-bit number. Here is an example of radix 10, width 3 (to give a number between 0 and 999 inclusive) as the question stated:

000 733

001 374

002 882

003 684

004 593

005 578

006 233

007 811

008 072

009 337

010 119

011 103

012 797

013 257

014 932

015 433

... ...

To get different non-repeating pseudo-random sequences, change the encryption key. Each encryption key produces a different non-repeating pseudo-random sequence.

- 41,871

- 30

- 130

- 181

-

1This is essentially a simple mapping, thus not any different from LCG and LFSR, with all the relevant kinks (e.g. values more than `k` apart in the sequence can never occur together). – ivan_pozdeev Sep 07 '16 at 21:54

-

@ivan_pozdeev: I'm having difficulty understanding the meaning of your comment. Can you explain what is wrong with this mapping, what are "all the relevant kinks", and what is `k`? – Craig McQueen Sep 07 '16 at 23:31

-

All the "encryption" effectively does here is replace the sequence `1,2,...,N` with a sequence of the same numbers in some other, but still constant, order. The numbers are then pulled from this sequence one by one. `k` is the number of values picked (the OP didn't specify a letter for it so I had to introduce one). – ivan_pozdeev Sep 08 '16 at 18:21

-

Since the sequence is constant and every number in it is unique, the combination returned is fully defined by the first number. Thus it's not fully random - only a small subset of possible combinations can be generated. – ivan_pozdeev Sep 08 '16 at 18:22

-

LFSRs and LCGs have the same property, so their other defects related to it also apply. – ivan_pozdeev Sep 08 '16 at 18:27

-

I see. However, in the case of format-preserving encryption, you can obtain a different "random" sequence for every chosen encryption key. WIth a 128-bit or 256-bit key, that gives you plenty of possible sequences. For any chosen key, the generated sequence is guaranteed to be the complete set of numbers effectively shuffled. – Craig McQueen Sep 08 '16 at 22:55

-

Regarding the "values more than `k` apart" consideration — you just pick a suitable radix `r` and width `w` to suit your needs to get `k = rʷ`, and then generate as many `n` values as you want, where `n ≤ k`. For example, in my answer I showed how to get 16 non-repeating numbers in the range 0..999. – Craig McQueen Sep 08 '16 at 23:01

-

Of course, the deficiencies of a constant-sequence PRNG (strong correlation between results) only manifest themselves when you use the same setup more than once. If you use it once and never again, it's random alright. But generating a new setup each time (randomly, with a better RNG!) pretty much defeats the purpose of building one's own PRNG. – ivan_pozdeev Sep 09 '16 at 22:24

-

That's because the "key" roughly equals to the same amount of "good" random numbers (must have `N!` possible values to cover all possible sequences) in addition to all the extra work. – ivan_pozdeev Sep 09 '16 at 22:31

-

3@ivan_pozdeev It's not the case that FPE must implement a specific static mapping, or that "the combination returned is fully defined by the first number". Since the configuration parameter is much larger than the size of the first number (which has only a thousand states), there should be multiple sequences that start with the same initial value and then proceed to different subsequent values. Any realistic generator is going to fail to cover the entire possible space of permutations; it's not worth raising that failure mode when the OP didn't ask for it. – sh1 Sep 11 '16 at 19:40

-

5+1. When implemented correctly, using a secure block cipher with a key chosen uniformly at random, the sequences generated using this method will be computationally indistinguishable from a true random shuffle. That is to say, there is no way to distinguish the output of this method from a true random shuffle significantly faster than by testing all possible block cipher keys and seeing if any of them generates the same output. For a cipher with a 128-bit keyspace, this is probably beyond the computing power currently available to mankind; with 256-bit keys, it will probably forever remain so. – Ilmari Karonen Sep 12 '16 at 08:04

-

@IlmariKaronen Still, the answer is incomplete without specifying that one needs a new random key for each permutation, some points on its required length and the "[doesn't generate all possible permutations but indistinguishable](http://stackoverflow.com/questions/196017/unique-non-repeating-random-numbers-in-o1/309447#comment66213987_3094476)" speech. – ivan_pozdeev Sep 12 '16 at 13:19

-

Maybe this is not exactly the asker wants, but it's what I'm looking for ;) – tactoth Aug 10 '17 at 22:26

-

I think this is a great idea. On the other hand, it is not clear to me that the resulting distribution (in the random variable sense) is uniform. For some applications at least, a uniform distribution of permutations (i.e. each permutation has equal probability) is desired/required. Also, if there're more permutations than keys, some are not generated. If there're more keys than permutations, some are repeated. – Pablo H Nov 25 '21 at 14:26

-

@PabloH Statistically it is a uniform distribution, as good-quality cryptographic algorithms are. – Craig McQueen Nov 26 '21 at 05:33

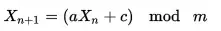

You could use A Linear Congruential Generator. Where m (the modulus) would be the nearest prime bigger than 1000. When you get a number out of the range, just get the next one. The sequence will only repeat once all elements have occurred, and you don't have to use a table. Be aware of the disadvantages of this generator though (including lack of randomness).

- 4,888

- 1

- 24

- 29

-

1

-

An LCG has high correlation between consecutive numbers, thus _combinations_ will not be quite random at large (e.g. numbers farther than `k` apart in the sequence can never occur together). – ivan_pozdeev Sep 07 '16 at 21:42

-

m should be the number of elements 1001 (1000 + 1 for zero) and you may use Next = (1002 * Current + 757) mod 1001; – Max Abramovich Dec 17 '16 at 04:03

I think that Linear congruential generator would be the simplest solution.

and there are only 3 restrictions on the a, c and m values

- m and c are relatively prime,

- a-1 is divisible by all prime factors of m

- a-1 is divisible by 4 if m is divisible by 4

PS the method was mentioned already but the post has a wrong assumptions about the constant values. The constants below should work fine for your case

In your case you may use a = 1002, c = 757, m = 1001

X = (1002 * X + 757) mod 1001

- 6,982

- 16

- 51

- 59

- 473

- 4

- 11

For low numbers like 0...1000, creating a list that contains all the numbers and shuffling it is straight forward. But if the set of numbers to draw from is very large there's another elegant way: You can build a pseudorandom permutation using a key and a cryptographic hash function. See the following C++-ish example pseudo code:

unsigned randperm(string key, unsigned bits, unsigned index) {

unsigned half1 = bits / 2;

unsigned half2 = (bits+1) / 2;

unsigned mask1 = (1 << half1) - 1;

unsigned mask2 = (1 << half2) - 1;

for (int round=0; round<5; ++round) {

unsigned temp = (index >> half1);

temp = (temp << 4) + round;

index ^= hash( key + "/" + int2str(temp) ) & mask1;

index = ((index & mask2) << half1) | ((index >> half2) & mask1);

}

return index;

}

Here, hash is just some arbitrary pseudo random function that maps a character string to a possibly huge unsigned integer. The function randperm is a permutation of all numbers within 0...pow(2,bits)-1 assuming a fixed key. This follows from the construction because every step that changes the variable index is reversible. This is inspired by a Feistel cipher.

- 27,611

- 3

- 75

- 95

-

Same as http://stackoverflow.com/a/16097246/648265, fails randomness for sequences just the same. – ivan_pozdeev Sep 11 '16 at 11:37

-

2@ivan_pozdeev: In theory, assuming infinite computing power, yes. However, assuming that `hash()`, as used in the code above, is a secure pseudorandom function, this construction will provably (Luby & Rackoff, 1988) yield a [pseudorandom permutation](https://en.wikipedia.org/wiki/Pseudorandom_permutation), which cannot be distinguished from a true random shuffle using significantly less effort than an exhaustive search of the entire key space, which is exponential in the key length. Even for reasonably sized keys (say, 128 bits), this is beyond the total computing power available on Earth. – Ilmari Karonen Sep 12 '16 at 08:22

-

(BTW, just to make this argument a bit more rigorous, I'd prefer to replace the ad hoc `hash( key + "/" + int2str(temp) )` construction above with [HMAC](https://en.wikipedia.org/wiki/Hash-based_message_authentication_code), whose security in turn can be provably reduced to that of the underlying hash compression function. Also, using HMAC might make it less likely for someone to mistakenly try to use this construction with an insecure non-crypto hash function.) – Ilmari Karonen Sep 12 '16 at 08:26

You may use my Xincrol algorithm described here:

http://openpatent.blogspot.co.il/2013/04/xincrol-unique-and-random-number.html

This is a pure algorithmic method of generating random but unique numbers without arrays, lists, permutations or heavy CPU load.

Latest version allows also to set the range of numbers, For example, if I want unique random numbers in range of 0-1073741821.

I've practically used it for

- MP3 player which plays every song randomly, but only once per album/directory

- Pixel wise video frames dissolving effect (fast and smooth)

- Creating a secret "noise" fog over image for signatures and markers (steganography)

- Data Object IDs for serialization of huge amount of Java objects via Databases

- Triple Majority memory bits protection

- Address+value encryption (every byte is not just only encrypted but also moved to a new encrypted location in buffer). This really made cryptanalysis fellows mad on me :-)

- Plain Text to Plain Like Crypt Text encryption for SMS, emails etc.

- My Texas Hold`em Poker Calculator (THC)

- Several of my games for simulations, "shuffling", ranking

- more

It is open, free. Give it a try...

- 172

- 2

- 1

-

Could that method work for a decimal value, e.g. scrambling a 3-digit decimal counter to always have a 3-digit decimal result? – Craig McQueen Aug 14 '16 at 03:21

-

As an example of [Xorshift](https://en.wikipedia.org/wiki/Xorshift) algorithm, it's an LFSR, with all related kinks (e.g. values more than `k` apart in the sequence can never occur together). – ivan_pozdeev Sep 07 '16 at 21:56

You don't even need an array to solve this one.

You need a bitmask and a counter.

Initialize the counter to zero and increment it on successive calls. XOR the counter with the bitmask (randomly selected at startup, or fixed) to generate a psuedorandom number. If you can't have numbers that exceed 1000, don't use a bitmask wider than 9 bits. (In other words, the bitmask is an integer not above 511.)

Make sure that when the counter passes 1000, you reset it to zero. At this time you can select another random bitmask — if you like — to produce the same set of numbers in a different order.

- 1,044

- 10

- 19

-

2

-

"bitmask" within 512...1023 is OK, too. For a little more fake randomness see my answer. :-) – sellibitze Jun 22 '10 at 15:35

-

Essentially equivalent to http://stackoverflow.com/a/16097246/648265, also fails randomness for sequences. – ivan_pozdeev Sep 07 '16 at 22:08

Here's some code I typed up that uses the logic of the first solution. I know this is "language agnostic" but just wanted to present this as an example in C# in case anyone is looking for a quick practical solution.

// Initialize variables

Random RandomClass = new Random();

int RandArrayNum;

int MaxNumber = 10;

int LastNumInArray;

int PickedNumInArray;

int[] OrderedArray = new int[MaxNumber]; // Ordered Array - set

int[] ShuffledArray = new int[MaxNumber]; // Shuffled Array - not set

// Populate the Ordered Array

for (int i = 0; i < MaxNumber; i++)

{

OrderedArray[i] = i;

listBox1.Items.Add(OrderedArray[i]);

}

// Execute the Shuffle

for (int i = MaxNumber - 1; i > 0; i--)

{

RandArrayNum = RandomClass.Next(i + 1); // Save random #

ShuffledArray[i] = OrderedArray[RandArrayNum]; // Populting the array in reverse

LastNumInArray = OrderedArray[i]; // Save Last Number in Test array

PickedNumInArray = OrderedArray[RandArrayNum]; // Save Picked Random #

OrderedArray[i] = PickedNumInArray; // The number is now moved to the back end

OrderedArray[RandArrayNum] = LastNumInArray; // The picked number is moved into position

}

for (int i = 0; i < MaxNumber; i++)

{

listBox2.Items.Add(ShuffledArray[i]);

}

- 4,142

- 2

- 31

- 40

- 3,573

- 10

- 34

- 51

The question How do you efficiently generate a list of K non-repeating integers between 0 and an upper bound N is linked as a duplicate - and if you want something that is O(1) per generated random number (with no O(n) startup cost)) there is a simple tweak of the accepted answer.

Create an empty unordered map (an empty ordered map will take O(log k) per element) from integer to integer - instead of using an initialized array. Set max to 1000 if that is the maximum,

- Pick a random number, r, between 0 and max.

- Ensure that both map elements r and max exist in the unordered map. If they don't exist create them with a value equal to their index.

- Swap elements r and max

- Return element max and decrement max by 1 (if max goes negative you are done).

- Back to step 1.

The only difference compared with using an initialized array is that the initialization of elements is postponed/skipped - but it will generate the exact same numbers from the same PRNG.

- 11,123

- 15

- 38

This method results appropiate when the limit is high and you only want to generate a few random numbers.

#!/usr/bin/perl

($top, $n) = @ARGV; # generate $n integer numbers in [0, $top)

$last = -1;

for $i (0 .. $n-1) {

$range = $top - $n + $i - $last;

$r = 1 - rand(1.0)**(1 / ($n - $i));

$last += int($r * $range + 1);

print "$last ($r)\n";

}

Note that the numbers are generated in ascending order, but you can shuffle then afterwards.

- 9,943

- 4

- 29

- 57

-

Since this generates combinations rather than permutations, it's more appropriate for http://stackoverflow.com/questions/2394246/algorithm-to-select-a-single-random-combination-of-values – ivan_pozdeev Sep 11 '16 at 12:04

-

1Testing shows this has a bias towards lower numbers: the measured probabilities for 2M samples with `(top,n)=(100,10)` are : `(0.01047705, 0.01044825, 0.01041225, ..., 0.0088324, 0.008723, 0.00863635)`. I tested in Python, so slight differences in math might play a role here (I did make sure all operations for calculating `r` are floating-point). – ivan_pozdeev Sep 11 '16 at 12:24

-

Yes, in order for this method to work correctly, the upper limit must be much bigger than the number of values to be extracted. – salva Sep 12 '16 at 12:52

-

It won't work "correctly" even if _"the upper limit [is] much bigger than the number of values"_. The probabilities will still be uneven, just by a lesser margin. – ivan_pozdeev Dec 16 '17 at 02:56

public static int[] randN(int n, int min, int max)

{

if (max <= min)

throw new ArgumentException("Max need to be greater than Min");

if (max - min < n)

throw new ArgumentException("Range needs to be longer than N");

var r = new Random();

HashSet<int> set = new HashSet<int>();

while (set.Count < n)

{

var i = r.Next(max - min) + min;

if (!set.Contains(i))

set.Add(i);

}

return set.ToArray();

}

N Non Repeating random numbers will be of O(n) complexity, as required.

Note: Random should be static with thread safety applied.

- 4,142

- 2

- 31

- 40

- 794

- 4

- 9

-

O(n^2), as the number of retries is proportional on average to the number of elements selected so far. – ivan_pozdeev Sep 07 '16 at 22:35

-

Think about it, if you select min=0 max=10000000 and N=5, retries ~=0 no matter how many selected. But yes you have a point that if max-min is small, o(N) breaks up. – Erez Robinson Sep 09 '16 at 10:15

-

If N<<(max-min) then it's still proportional, it's just the coefficient is very small. And coefficients don't matter for an asymptotic estimate. – ivan_pozdeev Sep 09 '16 at 22:04

-

This is not O(n). Each time the set contains the value this is and extra loop. – paparazzo Mar 01 '17 at 20:23

Here is some sample COBOL code you can play around with.

I can send you RANDGEN.exe file so you can play with it to see if it does want you want.

IDENTIFICATION DIVISION.

PROGRAM-ID. RANDGEN as "ConsoleApplication2.RANDGEN".

AUTHOR. Myron D Denson.

DATE-COMPILED.

* **************************************************************

* SUBROUTINE TO GENERATE RANDOM NUMBERS THAT ARE GREATER THAN

* ZERO AND LESS OR EQUAL TO THE RANDOM NUMBERS NEEDED WITH NO

* DUPLICATIONS. (CALL "RANDGEN" USING RANDGEN-AREA.)

*

* CALLING PROGRAM MUST HAVE A COMPARABLE LINKAGE SECTION

* AND SET 3 VARIABLES PRIOR TO THE FIRST CALL IN RANDGEN-AREA

*

* FORMULA CYCLES THROUGH EVERY NUMBER OF 2X2 ONLY ONCE.

* RANDOM-NUMBERS FROM 1 TO RANDOM-NUMBERS-NEEDED ARE CREATED

* AND PASSED BACK TO YOU.

*

* RULES TO USE RANDGEN:

*

* RANDOM-NUMBERS-NEEDED > ZERO

*

* COUNT-OF-ACCESSES MUST = ZERO FIRST TIME CALLED.

*

* RANDOM-NUMBER = ZERO, WILL BUILD A SEED FOR YOU

* WHEN COUNT-OF-ACCESSES IS ALSO = 0

*

* RANDOM-NUMBER NOT = ZERO, WILL BE NEXT SEED FOR RANDGEN

* (RANDOM-NUMBER MUST BE <= RANDOM-NUMBERS-NEEDED)

*

* YOU CAN PASS RANDGEN YOUR OWN RANDOM-NUMBER SEED

* THE FIRST TIME YOU USE RANDGEN.

*

* BY PLACING A NUMBER IN RANDOM-NUMBER FIELD

* THAT FOLLOWES THESE SIMPLE RULES:

* IF COUNT-OF-ACCESSES = ZERO AND

* RANDOM-NUMBER > ZERO AND

* RANDOM-NUMBER <= RANDOM-NUMBERS-NEEDED

*

* YOU CAN LET RANDGEN BUILD A SEED FOR YOU

*

* THAT FOLLOWES THESE SIMPLE RULES:

* IF COUNT-OF-ACCESSES = ZERO AND

* RANDOM-NUMBER = ZERO AND

* RANDOM-NUMBER-NEEDED > ZERO

*

* TO INSURING A DIFFERENT PATTERN OF RANDOM NUMBERS

* A LOW-RANGE AND HIGH-RANGE IS USED TO BUILD

* RANDOM NUMBERS.

* COMPUTE LOW-RANGE =

* ((SECONDS * HOURS * MINUTES * MS) / 3).

* A HIGH-RANGE = RANDOM-NUMBERS-NEEDED + LOW-RANGE

* AFTER RANDOM-NUMBER-BUILT IS CREATED

* AND IS BETWEEN LOW AND HIGH RANGE

* RANDUM-NUMBER = RANDOM-NUMBER-BUILT - LOW-RANGE

*

* **************************************************************

ENVIRONMENT DIVISION.

INPUT-OUTPUT SECTION.

FILE-CONTROL.

DATA DIVISION.

FILE SECTION.

WORKING-STORAGE SECTION.

01 WORK-AREA.

05 X2-POWER PIC 9 VALUE 2.

05 2X2 PIC 9(12) VALUE 2 COMP-3.

05 RANDOM-NUMBER-BUILT PIC 9(12) COMP.

05 FIRST-PART PIC 9(12) COMP.

05 WORKING-NUMBER PIC 9(12) COMP.

05 LOW-RANGE PIC 9(12) VALUE ZERO.

05 HIGH-RANGE PIC 9(12) VALUE ZERO.

05 YOU-PROVIDE-SEED PIC X VALUE SPACE.

05 RUN-AGAIN PIC X VALUE SPACE.

05 PAUSE-FOR-A-SECOND PIC X VALUE SPACE.

01 SEED-TIME.

05 HOURS PIC 99.

05 MINUTES PIC 99.

05 SECONDS PIC 99.

05 MS PIC 99.

*

* LINKAGE SECTION.

* Not used during testing

01 RANDGEN-AREA.

05 COUNT-OF-ACCESSES PIC 9(12) VALUE ZERO.

05 RANDOM-NUMBERS-NEEDED PIC 9(12) VALUE ZERO.

05 RANDOM-NUMBER PIC 9(12) VALUE ZERO.

05 RANDOM-MSG PIC X(60) VALUE SPACE.

*

* PROCEDURE DIVISION USING RANDGEN-AREA.

* Not used during testing

*

PROCEDURE DIVISION.

100-RANDGEN-EDIT-HOUSEKEEPING.

MOVE SPACE TO RANDOM-MSG.

IF RANDOM-NUMBERS-NEEDED = ZERO

DISPLAY 'RANDOM-NUMBERS-NEEDED ' NO ADVANCING

ACCEPT RANDOM-NUMBERS-NEEDED.

IF RANDOM-NUMBERS-NEEDED NOT NUMERIC

MOVE 'RANDOM-NUMBERS-NEEDED NOT NUMERIC' TO RANDOM-MSG

GO TO 900-EXIT-RANDGEN.

IF RANDOM-NUMBERS-NEEDED = ZERO

MOVE 'RANDOM-NUMBERS-NEEDED = ZERO' TO RANDOM-MSG

GO TO 900-EXIT-RANDGEN.

IF COUNT-OF-ACCESSES NOT NUMERIC

MOVE 'COUNT-OF-ACCESSES NOT NUMERIC' TO RANDOM-MSG

GO TO 900-EXIT-RANDGEN.

IF COUNT-OF-ACCESSES GREATER THAN RANDOM-NUMBERS-NEEDED

MOVE 'COUNT-OF-ACCESSES > THAT RANDOM-NUMBERS-NEEDED'

TO RANDOM-MSG

GO TO 900-EXIT-RANDGEN.

IF YOU-PROVIDE-SEED = SPACE AND RANDOM-NUMBER = ZERO

DISPLAY 'DO YOU WANT TO PROVIDE SEED Y OR N: '

NO ADVANCING

ACCEPT YOU-PROVIDE-SEED.

IF RANDOM-NUMBER = ZERO AND

(YOU-PROVIDE-SEED = 'Y' OR 'y')

DISPLAY 'ENTER SEED ' NO ADVANCING

ACCEPT RANDOM-NUMBER.

IF RANDOM-NUMBER NOT NUMERIC

MOVE 'RANDOM-NUMBER NOT NUMERIC' TO RANDOM-MSG

GO TO 900-EXIT-RANDGEN.

200-RANDGEN-DATA-HOUSEKEEPING.

MOVE FUNCTION CURRENT-DATE (9:8) TO SEED-TIME.

IF COUNT-OF-ACCESSES = ZERO

COMPUTE LOW-RANGE =

((SECONDS * HOURS * MINUTES * MS) / 3).

COMPUTE RANDOM-NUMBER-BUILT = RANDOM-NUMBER + LOW-RANGE.

COMPUTE HIGH-RANGE = RANDOM-NUMBERS-NEEDED + LOW-RANGE.

MOVE X2-POWER TO 2X2.

300-SET-2X2-DIVISOR.

IF 2X2 < (HIGH-RANGE + 1)

COMPUTE 2X2 = 2X2 * X2-POWER

GO TO 300-SET-2X2-DIVISOR.

* *********************************************************

* IF FIRST TIME THROUGH AND YOU WANT TO BUILD A SEED. *

* *********************************************************

IF COUNT-OF-ACCESSES = ZERO AND RANDOM-NUMBER = ZERO

COMPUTE RANDOM-NUMBER-BUILT =

((SECONDS * HOURS * MINUTES * MS) + HIGH-RANGE).

IF COUNT-OF-ACCESSES = ZERO

DISPLAY 'SEED TIME ' SEED-TIME

' RANDOM-NUMBER-BUILT ' RANDOM-NUMBER-BUILT

' LOW-RANGE ' LOW-RANGE.

* *********************************************

* END OF BUILDING A SEED IF YOU WANTED TO *

* *********************************************

* ***************************************************

* THIS PROCESS IS WHERE THE RANDOM-NUMBER IS BUILT *

* ***************************************************

400-RANDGEN-FORMULA.

COMPUTE FIRST-PART = (5 * RANDOM-NUMBER-BUILT) + 7.

DIVIDE FIRST-PART BY 2X2 GIVING WORKING-NUMBER

REMAINDER RANDOM-NUMBER-BUILT.

IF RANDOM-NUMBER-BUILT > LOW-RANGE AND

RANDOM-NUMBER-BUILT < (HIGH-RANGE + 1)

GO TO 600-RANDGEN-CLEANUP.

GO TO 400-RANDGEN-FORMULA.

* *********************************************

* GOOD RANDOM NUMBER HAS BEEN BUILT *

* *********************************************

600-RANDGEN-CLEANUP.

ADD 1 TO COUNT-OF-ACCESSES.

COMPUTE RANDOM-NUMBER =

RANDOM-NUMBER-BUILT - LOW-RANGE.

* *******************************************************

* THE NEXT 3 LINE OF CODE ARE FOR TESTING ON CONSOLE *

* *******************************************************

DISPLAY RANDOM-NUMBER.

IF COUNT-OF-ACCESSES < RANDOM-NUMBERS-NEEDED

GO TO 100-RANDGEN-EDIT-HOUSEKEEPING.

900-EXIT-RANDGEN.

IF RANDOM-MSG NOT = SPACE

DISPLAY 'RANDOM-MSG: ' RANDOM-MSG.

MOVE ZERO TO COUNT-OF-ACCESSES RANDOM-NUMBERS-NEEDED RANDOM-NUMBER.

MOVE SPACE TO YOU-PROVIDE-SEED RUN-AGAIN.

DISPLAY 'RUN AGAIN Y OR N '

NO ADVANCING.

ACCEPT RUN-AGAIN.

IF (RUN-AGAIN = 'Y' OR 'y')

GO TO 100-RANDGEN-EDIT-HOUSEKEEPING.

ACCEPT PAUSE-FOR-A-SECOND.

GOBACK.

- 29

- 2

-

1I have no idea if this can actually meet the OPs needs, but props for a COBOL contribution! – Mac May 27 '18 at 16:53

Let's say you want to go over shuffled lists over and over, without having the O(n) delay each time you start over to shuffle it again, in that case we can do this:

Create 2 lists A and B, with 0 to 1000, takes

2nspace.Shuffle list A using Fisher-Yates, takes

ntime.When drawing a number, do 1-step Fisher-Yates shuffle on the other list.

When cursor is at list end, switch to the other list.

Preprocess

cursor = 0

selector = A

other = B

shuffle(A)

Draw

temp = selector[cursor]

swap(other[cursor], other[random])

if cursor == N

then swap(selector, other); cursor = 0

else cursor = cursor + 1

return temp

- 5,828

- 1

- 33

- 51

-

It's not necessary to keep 2 lists - _or_ exhaust a list before staring over. Fisher-Yates gives uniformly random results from any initial state. See http://stackoverflow.com/a/158742/648265 for explanation. – ivan_pozdeev Sep 07 '16 at 22:36

-

@ivan_pozdeev Yes, it's the same result, but my idea here is to make it amortized O(1) by making the shuffle part of the drawing action. – Khaled.K Sep 08 '16 at 07:15

-

You didn't understand. You _don't need to reset the list at all_ before shuffling again. Shuffling `[1,3,4,5,2]` will produce the same result as shuffling `[1,2,3,4,5]`. – ivan_pozdeev Sep 08 '16 at 18:08

You could use a good pseudo-random number generator with 10 bits and throw away 1001 to 1023 leaving 0 to 1000.

From here we get the design for a 10 bit PRNG..

10 bits, feedback polynomial x^10 + x^7 + 1 (period 1023)

use a Galois LFSR to get fast code

- 953

- 2

- 9

- 24

-

@Phob No that won't happen, because a 10 bit PRNG based on a Linear Feedback Shift Register is typically made from a construct that assumes all values (except one) once, before returning to the first value. In other words, it will only pick 1001 exactly once during a cycle. – Nuoji Mar 22 '13 at 23:38

-

1@Phob the whole point of this question is to select each number exactly once. And then you complain that 1001 won't occur twice in a row? A LFSR with an optimal spread will traverse all numbers in its space in a pseudo random fashion, then restart the cycle. In other words, it is not used as a usual random function. When used as a random, we typically only use a subset of the bits. Read a bit about it and it'll soon make sense. – Nuoji Apr 19 '13 at 13:00

-

1The only problem is that a given LFSR has only one sequence, thus giving strong correlation between the picked numbers - in particular, not generating every possible combination. – ivan_pozdeev Sep 08 '16 at 18:32

Another posibility:

You can use an array of flags. And take the next one when it;s already chosen.

But, beware after 1000 calls, the function will never end so you must make a safeguard.

- 52,876

- 38

- 145

- 202

-

This one is O(k^2), what with a number of additional steps proportional on average to the number of values selected so far. – ivan_pozdeev Sep 07 '16 at 22:04

Most of the answers here fail to guarantee that they won't return the same number twice. Here's a correct solution:

int nrrand(void) {

static int s = 1;

static int start = -1;

do {

s = (s * 1103515245 + 12345) & 1023;

} while (s >= 1001);

if (start < 0) start = s;

else if (s == start) abort();

return s;

}

I'm not sure that the constraint is well specified. One assumes that after 1000 other outputs a value is allowed to repeat, but that naively allows 0 to follow immediately after 0 so long as they both appear at the end and start of sets of 1000. Conversely, while it's possible to keep a distance of 1000 other values between repetitions, doing so forces a situation where the sequence replays itself in exactly the same way every time because there's no other value that has occurred outside of that limit.

Here's a method that always guarantees at least 500 other values before a value can be repeated:

int nrrand(void) {

static int h[1001];

static int n = -1;

if (n < 0) {

int s = 1;

for (int i = 0; i < 1001; i++) {

do {

s = (s * 1103515245 + 12345) & 1023;

} while (s >= 1001);

/* If we used `i` rather than `s` then our early results would be poorly distributed. */

h[i] = s;

}

n = 0;

}

int i = rand(500);

if (i != 0) {

i = (n + i) % 1001;

int t = h[i];

h[i] = h[n];

h[n] = t;

}

i = h[n];

n = (n + 1) % 1001;

return i;

}

- 4,324

- 17

- 30

-

This is an LCG, like http://stackoverflow.com/a/196164/648265, non-random for sequences as well as other related kinks just the same. – ivan_pozdeev Sep 11 '16 at 12:45

-

@ivan_pozdeev mine's better than an LCG because it ensures that it won't return a duplicate on the 1001st call. – sh1 Sep 11 '16 at 17:57

When N is greater than 1000 and you need to draw K random samples you could use a set that contains the samples so far. For each draw you use rejection sampling, which will be an "almost" O(1) operation, so the total running time is nearly O(K) with O(N) storage.

This algorithm runs into collisions when K is "near" N. This means that running time will be a lot worse than O(K). A simple fix is to reverse the logic so that, for K > N/2, you keep a record of all the samples that have not been drawn yet. Each draw removes a sample from the rejection set.

The other obvious problem with rejection sampling is that it is O(N) storage, which is bad news if N is in the billions or more. However, there is an algorithm that solves that problem. This algorithm is called Vitter's algorithm after it's inventor. The algorithm is described here. The gist of Vitter's algorithm is that after each draw, you compute a random skip using a certain distribution which guarantees uniform sampling.

- 1,396

- 1

- 15

- 21

-

Guys, please! The Fisher-Yates method is broken. You select the first one with probability 1/N and the second one with probability 1/(N-1) != 1/N. This is a biased sampling method! You really need the Vittter's algorithm to resolve the bias. – Emanuel Landeholm Mar 07 '19 at 12:17

for i from n−1 downto 1 do

j ← random integer such that 0 ≤ j ≤ i

exchange a[j] and a[i]

It is actually O(n-1) as you only need one swap for the last two

This is C#

public static List<int> FisherYates(int n)

{

List<int> list = new List<int>(Enumerable.Range(0, n));

Random rand = new Random();

int swap;

int temp;

for (int i = n - 1; i > 0; i--)

{

swap = rand.Next(i + 1); //.net rand is not inclusive

if(swap != i) // it can stay in place - if you force a move it is not a uniform shuffle

{

temp = list[i];

list[i] = list[swap];

list[swap] = temp;

}

}

return list;

}

- 44,497

- 23

- 105

- 176

-

There is already an answer with this but it is fairly long winded and does not recognize you can stop at 1 (not 0) – paparazzo Mar 01 '17 at 20:43

Please see my answer at https://stackoverflow.com/a/46807110/8794687

It is one of the simplest algorithms that have average time complexity O(s log s), s denoting the sample size. There are also some links there to hash table algorithms who's complexity is claimed to be O(s).

- 61

- 7

Someone posted "creating random numbers in excel". I am using this ideal. Create a structure with 2 parts, str.index and str.ran; For 10 random numbers create an array of 10 structures. Set the str.index from 0 to 9 and str.ran to different random number.

for(i=0;i<10; ++i) {

arr[i].index = i;

arr[i].ran = rand();

}

Sort the array on the values in arr[i].ran. The str.index is now in a random order. Below is c code:

#include <stdio.h>

#include <stdlib.h>

struct RanStr { int index; int ran;};

struct RanStr arr[10];

int sort_function(const void *a, const void *b);

int main(int argc, char *argv[])

{

int cnt, i;

//seed(125);

for(i=0;i<10; ++i)

{

arr[i].ran = rand();

arr[i].index = i;

printf("arr[%d] Initial Order=%2d, random=%d\n", i, arr[i].index, arr[i].ran);

}

qsort( (void *)arr, 10, sizeof(arr[0]), sort_function);

printf("\n===================\n");

for(i=0;i<10; ++i)

{

printf("arr[%d] Random Order=%2d, random=%d\n", i, arr[i].index, arr[i].ran);

}

return 0;

}

int sort_function(const void *a, const void *b)

{

struct RanStr *a1, *b1;

a1=(struct RanStr *) a;

b1=(struct RanStr *) b;

return( a1->ran - b1->ran );

}

- 7,189

- 7

- 22

- 49

- 39

- 2