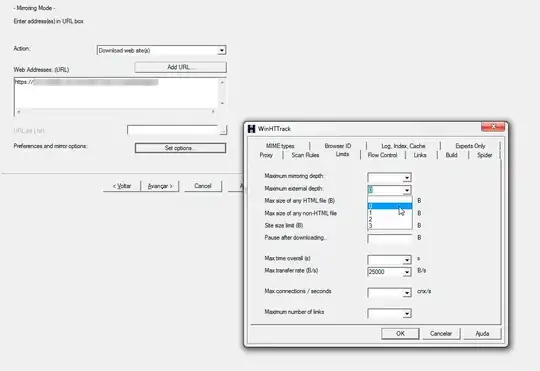

I am trying to use httrack (http://www.httrack.com/) in order to download a single page, not the entire site. So, for example, when using httrack in order to download www.google.com it should only download the html found under www.google.com along with all stylesheets, images and javascript and not follow any links to images.google.com, labs.google.com or www.google.com/subdir/ etc.

I tried the -w option but that did not make any difference.

What would be the right command?

EDIT

I tried using httrack "http://www.google.com/" -O "./www.google.com" "http://www.google.com/" -v -s0 --depth=1 but then it wont copy any images.

What I basically want is just downloading the index file of that domain along with all assets, but not the content of any external or internal links.