Does anyone have any information on the performance characteristics of Protocol Buffers versus BSON (binary JSON) or versus JSON in general?

- Wire size

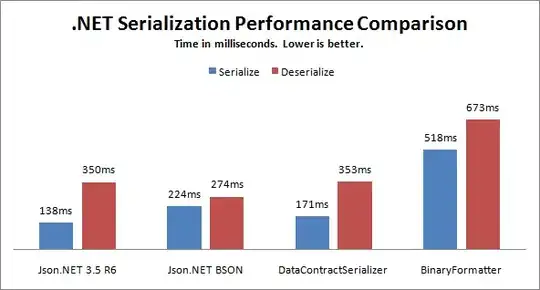

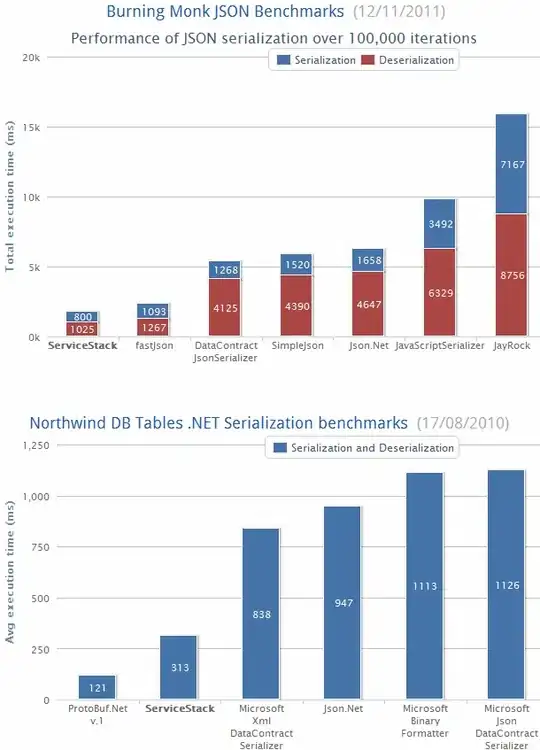

- Serialization speed

- Deserialization speed

These seem like good binary protocols for use over HTTP. I'm just wondering which would be better in the long run for a C# environment.

Here's some info that I was reading on BSON and Protocol Buffers.