One example where this can make a difference is that it can prevent a performance optimization that avoids adding row versioning information to tables with after triggers.

This is covered by Paul White here

The actual size of the data stored is immaterial – it is the potential

size that matters.

Similarly if using memory optimised tables since 2016 it has been possible to use LOB columns or combinations of column widths that could potentially exceed the inrow limit but with a penalty.

(Max) columns are always stored off-row. For other columns, if the data row size in the table definition can exceed 8,060 bytes, SQL Server pushes largest variable-length column(s) off-row. Again, it does not depend on amount of the data you store there.

This can have a large negative effect on memory consumption and performance

Another case where over declaring column widths can make a big difference is if the table will ever be processed using SSIS. The memory allocated for variable length (non BLOB) columns is fixed for each row in an execution tree and is per the columns' declared maximum length which can lead to inefficient usage of memory buffers (example). Whilst the SSIS package developer can declare a smaller column size than the source this analysis is best done up front and enforced there.

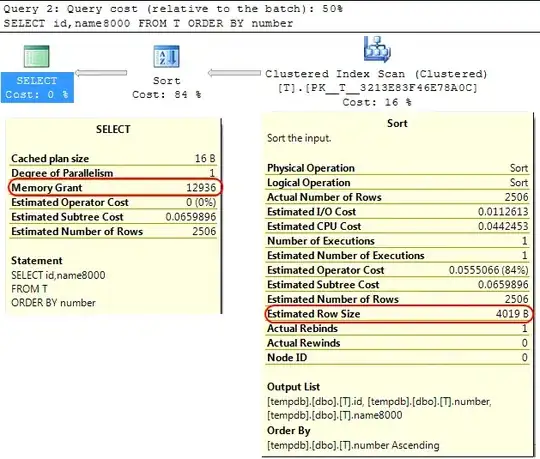

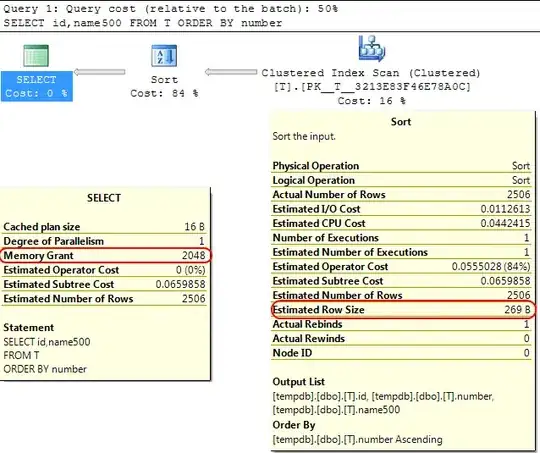

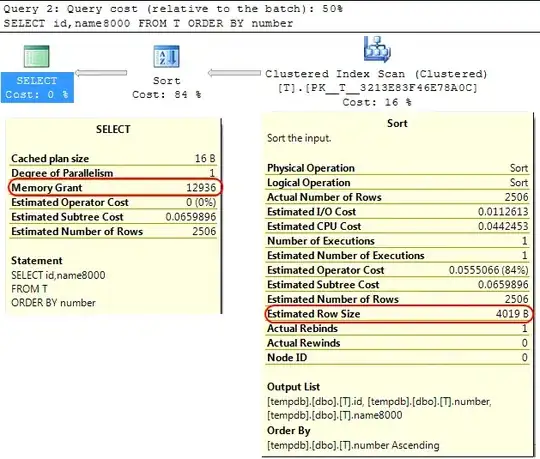

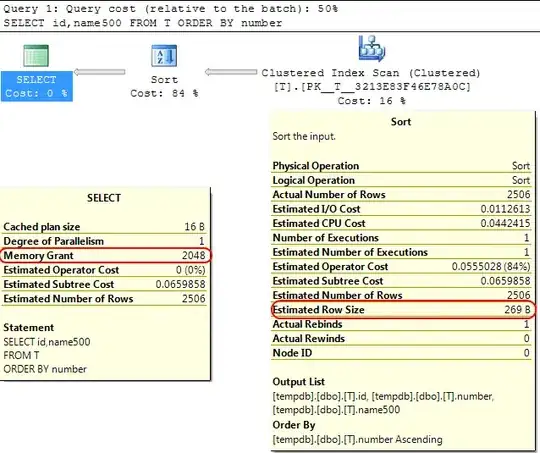

Back in the SQL Server engine itself a similar case is that when calculating the memory grant to allocate for SORT operations SQL Server assumes that varchar(x) columns will on average consume x/2 bytes.

If most of your varchar columns are fuller than that this can lead to the sort operations spilling to tempdb.

In your case if your varchar columns are declared as 8000 bytes but actually have contents much less than that your query will be allocated memory that it doesn't require which is obviously inefficient and can lead to waits for memory grants.

This is covered in Part 2 of SQL Workshops Webcast 1 downloadable from here or see below.

use tempdb;

CREATE TABLE T(

id INT IDENTITY(1,1) PRIMARY KEY,

number int,

name8000 VARCHAR(8000),

name500 VARCHAR(500))

INSERT INTO T

(number,name8000,name500)

SELECT number, name, name /*<--Same contents in both cols*/

FROM master..spt_values

SELECT id,name500

FROM T

ORDER BY number

SELECT id,name8000

FROM T

ORDER BY number