Alright, so this all started with my interest in hash codes. After doing some reading from a Jon Skeet post I asked this question. That got me really interested in pointer arithmetic, something I have almost no experience in. So, after reading through this page I began experimenting as I got a rudimentary understanding from there and my other fantastic peers here on SO!

Now I'm doing some more experimenting, and I believe I've accurately duplicated the hash code loop that's in the string implementation below (I reserve the right to be wrong about that):

Console.WriteLine("Iterating STRING (2) as INT ({0})", sizeof(int));

Console.WriteLine();

var val = "Hello World!";

unsafe

{

fixed (char* src = val)

{

var ptr = (int*)src;

var len = val.Length;

while (len > 2)

{

Console.WriteLine((char)*ptr);

Console.WriteLine((char)ptr[1]);

ptr += 2;

len -= sizeof(int);

}

if (len > 0)

{

Console.WriteLine((char)*ptr);

}

}

}

But, the results are a bit perplexing to me; kind of. Here are the results:

Iterating STRING (2) as INT (4)

H

l

o

W

r

d

I thought, originally, the value at ptr[1] would be the second letter that is read (or squished together) with the first. However, it's clearly not. Is that because ptr[1] is technically byte 4 on the first iteration and byte 12 on the second iteration?

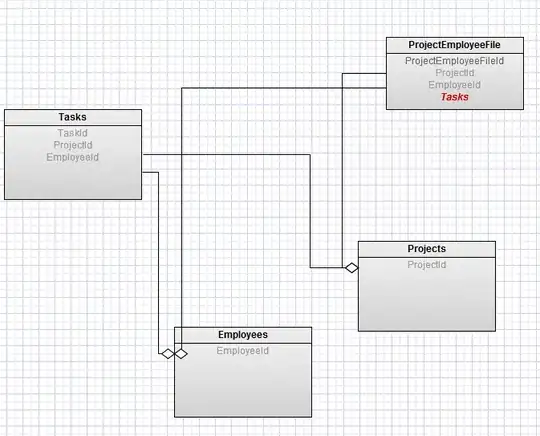

Sorry about the dodgy arrows.. I think my mouse batteries are dying

Sorry about the dodgy arrows.. I think my mouse batteries are dying