I need to process an XML file with the following structure:

<FolderSizes>

<Version></Version>

<DateTime Un=""></DateTime>

<Summary>

<TotalSize Bytes=""></TotalSize>

<TotalAllocated Bytes=""></TotalAllocated>

<TotalAvgFileSize Bytes=""></TotalAvgFileSize>

<TotalFolders Un=""></TotalFolders>

<TotalFiles Un=""></TotalFiles>

</Summary>

<DiskSpaceInfo>

<Drive Type="" Total="" TotalBytes="" Free="" FreeBytes="" Used=""

UsedBytes=""><![CDATA[ ]]></Drive>

</DiskSpaceInfo>

<Folder ScanState="">

<FullPath Name=""><![CDATA[ ]]></FullPath>

<Attribs Int=""></Attribs>

<Size Bytes=""></Size>

<Allocated Bytes=""></Allocated>

<AvgFileSz Bytes=""></AvgFileSz>

<Folders Un=""></Folders>

<Files Un=""></Files>

<Depth Un=""></Depth>

<Created Un=""></Created>

<Accessed Un=""></Accessed>

<LastMod Un=""></LastMod>

<CreatedCalc Un=""></CreatedCalc>

<AccessedCalc Un=""></AccessedCalc>

<LastModCalc Un=""></LastModCalc>

<Perc><![CDATA[ ]]></Perc>

<Owner><![CDATA[ ]]></Owner>

<!-- Special element; see paragraph below -->

<Folder></Folder>

</Folder>

</FolderSizes>

The <Folder> element is special in that it repeats within the <FolderSizes> element but can also appear within itself; I reckon up to about 5 levels.

The problem is that the file is really big at a whopping 11GB so I'm having difficulty processing it - I have experience with XML documents, but nothing on this scale.

What I would like to do is to import the information into a SQL database because then I will be able to process the information in any way necessary without having to concern myself with this immense, impractical file.

Here are the things I have tried:

- Simply load the file and attempt to process it with a simple C# program using an XmlDocument or XDocument object

- Before I even started I knew this would not work, as I'm sure everyone would agree, but I tried it anyway, and ran the application on a VM (since my notebook only has 4GB RAM) with 30GB memory. The application ended up using 24GB memory, and taking very, very long, so I just cancelled it.

- Attempt to process the file using an XmlReader object

- This approach worked better in that it didn't use as much memory, but I still had a few problems:

- It was taking really long because I was reading the file one line at a time.

- Processing the file one line at a time makes it difficult to really work with the data contained in the XML because now you have to detect the start of a tag, and then the end of that tag (hopefully), and then create a document from that information, read the info, attempt to determine which parent tag it belongs to because we have multiple levels... Sound prone to problems and errors

- Did I mention it takes really long reading the file one line at a time; and that still without actually processing that line - literally just reading it.

- This approach worked better in that it didn't use as much memory, but I still had a few problems:

- Import the information using SQL Server

- I created a stored procedure using XQuery and running it recursively within itself processing the

<Folder>elements. This went quite well - I think better than the other two approaches - until one of the<Folder>elements ended up being rather big, producing aAn XML operation resulted an XML data type exceeding 2GB in size. Operation aborted.error. I read up about it and I don't think it's an adjustable limit.

- I created a stored procedure using XQuery and running it recursively within itself processing the

Here are more things I think I should try:

- Re-write my C# application to use unmanaged code

- I don't have much experience with unmanaged code, so I'm not sure how well it will work and how to make it as unmanaged as possible.

- I once wrote a little application that works with my webcam, receiving the image, inverting the colours, and painting it to a panel. Using normal managed code didn't work - the result was about 2 frames per second. Re-writing the colour inversion method to use unmanaged code solved the problem. That's why I thought that unmanaged might be a solution.

- Rather go for C++ in stead of C#

- Not sure if this is really a solution. Would it necessarily be better that C#? Better than unmanaged C#?

- The problem here is that I haven't actually worked with C++ before, so I'll need to get to know a few things about C++ before I can really start working with it, and then probably not very efficiently yet.

I thought I'd ask for some advice before I go any further, possibly wasting my time.

Thanks in advance for you time and assistance.

EDIT

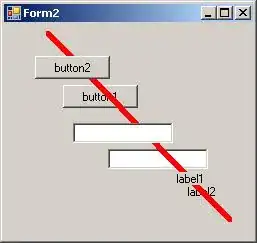

So before I start processing the file I run through it and check the size in a attempt to provide the user with feedback as to how long the processing might take; I made a screenshot of the calculation:

That's about 1500 lines per second; if the average line length is about 50 characters, that's 50 bytes per line, that's 75 kilobytes per second, for an 11GB file should take about 40 hours, if my maths is correct. But this is only stepping each line. It's not actually processing the line or doing anything with it, so when that starts, the processing rate drops significantly.

This is the method that runs during the size calculation:

private int _totalLines = 0;

private bool _cancel = false; // set to true when the cancel button is clicked

private void CalculateFileSize()

{

xmlStream = new StreamReader(_filePath);

xmlReader = new XmlTextReader(xmlStream);

while (xmlReader.Read())

{

if (_cancel)

return;

if (xmlReader.LineNumber > _totalLines)

_totalLines = xmlReader.LineNumber;

InterThreadHelper.ChangeText(

lblLinesRemaining,

string.Format("{0} lines", _totalLines));

string elapsed = string.Format(

"{0}:{1}:{2}:{3}",

timer.Elapsed.Days.ToString().PadLeft(2, '0'),

timer.Elapsed.Hours.ToString().PadLeft(2, '0'),

timer.Elapsed.Minutes.ToString().PadLeft(2, '0'),

timer.Elapsed.Seconds.ToString().PadLeft(2, '0'));

InterThreadHelper.ChangeText(lblElapsed, elapsed);

if (_cancel)

return;

}

xmlStream.Dispose();

}

Still runnig, 27 minutes in :(