I am pretty new to CV, so forgive my stupid questions...

What I want to do:

I want to recognize a RC plane in live video (for now its only a recorded video).

What I have done so far:

- Differences between frames

- Convert it to grey scale

- GaussianBlur

- Threshold

- findContours

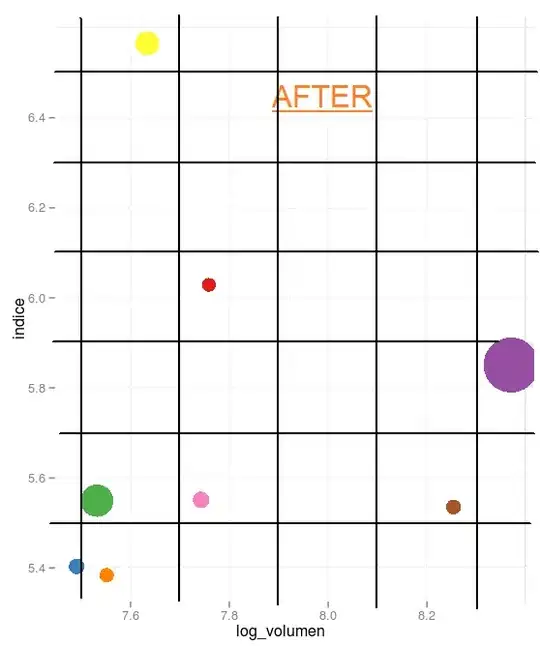

Here are some example frames:

But there are also frames with noise, so there are more objects in the frame.

I thought I could do something like this:

Use some object recognition algorithm for every contour that has been found. And compute only the feature vector for each of these bounding rectangles.

Is it possible to compute SURF/SIFT/... only for a specific patch (smaller part) of the image?

Since it will be important that the algorithm is capable of processing real time video I think it will only be possible if I don't look at the whole image all the time?! Or maybe decide for example if there are more than 10 bounding rectangles I check the whole image instead of every rectangle.

Then I will look at the next frame and try to match my feature vector with the previous one. That way I will be able to trace my objects. Once these objects cross the red line in the middle of the picture it will trigger another event. But that's not important here.

I need to make sure that not every object which is crossing or behind that red line is triggering that event. So there need to be at least 2 or 3 consecutive frames which contain that object and if it crosses then and only then the event should be triggered.

There are so many variations of object recognition algorithms, I am bit overwhelmed. Sift/Surf/Orb/... you get what I am saying.

Can anyone give me a hint which one I should chose or if what I am doing is even making sense?