Concept

Suppose we had an array of bool representing which numbers so far haven't been found (by way of summing).

For each number n we encounter in the ordered (increasing values) subset of S, we do the following:

- For each existing

True value at position i in numbers, we set numbers[i + n] to True

- We set

numbers[n] to True

With this sort of a sieve, we would mark all the found numbers as True, and iterating through the array when the algorithm finishes would find us the minimum unobtainable sum.

Refinement

Obviously, we can't have a solution like this because the array would have to be infinite in order to work for all sets of numbers.

The concept could be improved by making a few observations. With an input of 1, 1, 3, the array becomes (in sequence):

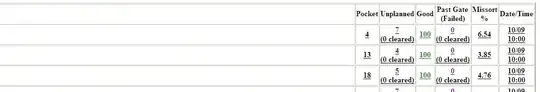

(numbers represent

(numbers represent true values)

An important observation can be made:

- (3) For each next number, if the previous numbers had already been found it will be added to all those numbers. This implies that if there were no gaps before a number, there will be no gaps after that number has been processed.

For the next input of 7 we can assert that:

- (4) Since the input set is ordered, there will be no number less than

7

- (5) If there is no number less than

7, then 6 cannot be obtained

We can come to a conclusion that:

- (6) the first gap represents the minimum unobtainable number.

Algorithm

Because of (3) and (6), we don't actually need the numbers array, we only need a single value, max to represent the maximum number found so far.

This way, if the next number n is greater than max + 1, then a gap would have been made, and max + 1 is the minimum unobtainable number.

Otherwise, max becomes max + n. If we've run through the entire S, the result is max + 1.

Actual code (C#, easily converted to C):

static int Calculate(int[] S)

{

int max = 0;

for (int i = 0; i < S.Length; i++)

{

if (S[i] <= max + 1)

max = max + S[i];

else

return max + 1;

}

return max + 1;

}

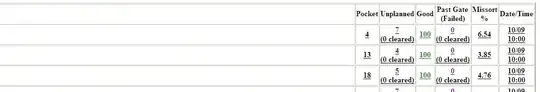

Should run pretty fast, since it's obviously linear time (O(n)). Since the input to the function should be sorted, with quicksort this would become O(nlogn). I've managed to get results M = N = 100000 on 8 cores in just under 5 minutes.

With numbers upper limit of 10^9, a radix sort could be used to approximate O(n) time for the sorting, however this would still be way over 2 seconds because of the sheer amount of sorts required.

But, we can use statistical probability of 1 being randomed to eliminate subsets before sorting. On the start, check if 1 exists in S, if not then every query's result is 1 because it cannot be obtained.

Statistically, if we random from 10^9 numbers 10^5 times, we have 99.9% chance of not getting a single 1.

Before each sort, check if that subset contains 1, if not then its result is one.

With this modification, the code runs in 2 miliseconds on my machine. Here's that code on http://pastebin.com/rF6VddTx

(numbers represent

(numbers represent