We have around 10,000+ images in a bucket in Amazon S3, how can I set the expires header on all of the images in one go?

6 Answers

Just a heads up that I found a great solution using the AWS CLI:

aws s3 cp s3://bucketname/optional_path s3://bucketname/optional_path --recursive --acl public-read --metadata-directive REPLACE --cache-control max-age=2592000

This will set the Cache-Control for 30 days. Note that you have the option to copy or replace the previous header data. Since AWS will automatically include the right meta content-type data for each media type and I had some bad headers I just chose to overwrite everything.

- 1

- 1

- 40,827

- 17

- 81

- 86

-

strange, I haven't configured the credentials but it didn't throw any error, simply print out all the image names @MauvisLedford – ericn May 19 '16 at 06:20

-

@Rollo after `cp` you need to repeat the path twice as they are source and destination – ericn May 19 '16 at 06:21

-

@ericn I know it's late but could it be you have public access on your images? – Maarten Wolzak Dec 06 '18 at 15:27

-

If we have `Cache-Control` set, do we need Expires? Services like pingdom and gtmetrix seem to balk at a missing Expires even if there's a Cache-control. – Khom Nazid Jul 14 '19 at 21:17

-

NOTE this does not MERGE the existing metadata. Unfortunately, there is no --metadata-directive MERGE option :( – xaunlopez May 17 '21 at 06:17

You can make bulk changes to bucket files with third party apps that use the S3 API. Those apps will not set the headers using only one request, but will automate the 10,000+ required requests.

The one I currently use is Cloudberry Explorer, which is a freeware utility to interact with your S3 buckets. In this tool I can select multiple files and specify HTTP headers that will be applied to all of them.

An alternative would be to develop your own script or tool using the S3 API libraries.

- 1,335

- 11

- 11

An alternative solution is to add response-expires parameter in your URL. It sets the Expires header of the response.

See Request Parameters section in http://docs.aws.amazon.com/AmazonS3/latest/API/RESTObjectGET.html for more detail.

- 3,607

- 1

- 31

- 36

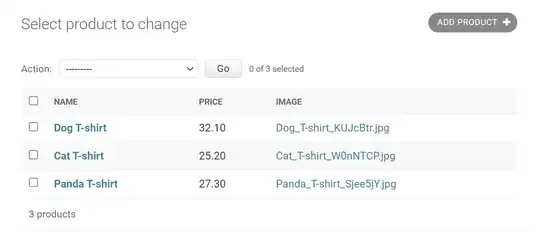

- Select the folder

- From the top menu, More

- Select Change Metadata

- Add Key as Expires

- Add Value as 2592000 (for example)

- 57

- 1

- 5

-

1The integer value is not allowed according to the RFC.https://developer.mozilla.org/en-US/docs/Web/HTTP/Headers/Expires – Yuki Matsukura Aug 07 '18 at 10:47

-

@Arun, was this in S3? I suppose the UI has changed because there's no "More" in the top menu. – Khom Nazid Mar 08 '19 at 06:37

-

1@YukiMatsukura how to add a specific date in S3, which works like Apache's `access plus 1 year`? If we set manually the year etc, we'll have to constantly manually edit Expires header! – Khom Nazid Mar 08 '19 at 13:30

Cyberduck will edit headers as well.

- Choose all the items

- command & i ( get info )

- Offers a GUI to edit various Headers with presets built in.

Just processed 6000 images in one bucket without a hitch.

- 21

- 3

Pretty sure it's not possible to do this in a single request. Instead you'll have to make 10,000 PUT requests, one for each key, with the new headers/metadata you want along with the x-amz-copy-source header pointing to the same key (so that you don't need to re-upload the object). The link I provided goes into more detail on the PUT-copy operation, but it's pretty much the way to change object metadata on s3.

- 2,556

- 3

- 18

- 24