Hey I was trying a test method for a WCF soap web service.

public Double TestDouble(Double x) { return x; }

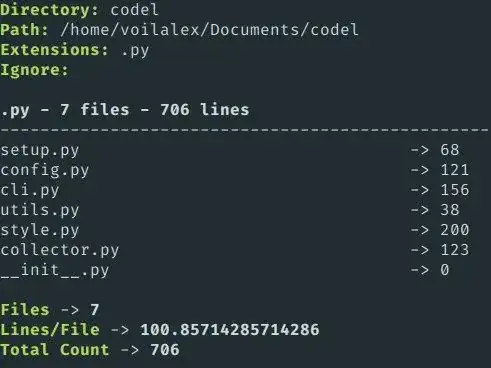

The test tool only let's me put 15 significant digits:

I can use Soap UI to add more digits, here's one with 17 significant figures:

<soapenv:Header/>

<soapenv:Body>

<td:TestDouble>

<!--Optional:-->

<td:x>13.075815372878123</td:x>

</td:TestDouble>

</soapenv:Body>

In general, clients tend to throw however many sig figs they want, so this was just a simple test to see some discrepant numbers coming back from the service.

The result is also 17 figures but slightly higher, so the input doesn't match the output (when it should?):

<s:Body>

<TestDoubleResponse xmlns="http://ocdusrow3rndd1">

<TestDoubleResult>13.075815372878124</TestDoubleResult>

</TestDoubleResponse>

</s:Body>

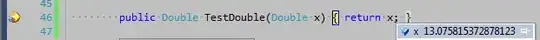

The input to the web service, when run in debug mode seems to have received the correct original value:

So how is it being changed before given back?