I am working on Scrapy 0.20 with Python 2.7. I found PyCharm has a good Python debugger. I want to test my Scrapy spiders using it. Anyone knows how to do that please?

What I have tried

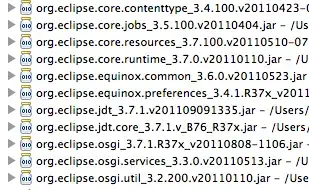

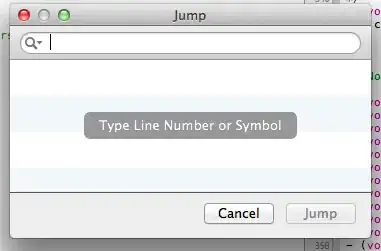

Actually I tried to run the spider as a script. As a result, I built that script. Then, I tried to add my Scrapy project to PyCharm as a model like this:File->Setting->Project structure->Add content root.

But I don't know what else I have to do