I have edited my question after previous comments (especially @Zboson) for better readability

I have always acted on, and observed, the conventional wisdom that the number of openmp threads should roughly match the number of hyper-threads on a machine for optimal performance. However, I am observing odd behaviour on my new laptop with Intel Core i7 4960HQ, 4 cores - 8 threads. (See Intel docs here)

Here is my test code:

#include <math.h>

#include <stdlib.h>

#include <stdio.h>

#include <omp.h>

int main() {

const int n = 256*8192*100;

double *A, *B;

posix_memalign((void**)&A, 64, n*sizeof(double));

posix_memalign((void**)&B, 64, n*sizeof(double));

for (int i = 0; i < n; ++i) {

A[i] = 0.1;

B[i] = 0.0;

}

double start = omp_get_wtime();

#pragma omp parallel for

for (int i = 0; i < n; ++i) {

B[i] = exp(A[i]) + sin(B[i]);

}

double end = omp_get_wtime();

double sum = 0.0;

for (int i = 0; i < n; ++i) {

sum += B[i];

}

printf("%g %g\n", end - start, sum);

return 0;

}

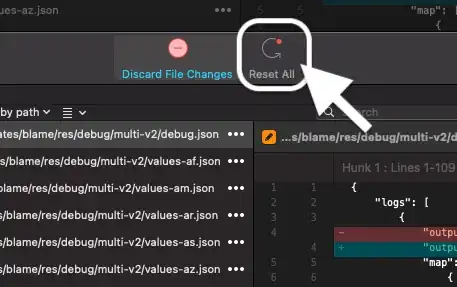

When I compile it using gcc 4.9-4.9-20140209, with the command: gcc -Ofast -march=native -std=c99 -fopenmp -Wa,-q I see the following performance as I change OMP_NUM_THREADS [the points are an average of 5 runs, the error bars (which are hardly visible) are the standard deviations]:

The plot is clearer when shown as the speed up with respect to OMP_NUM_THREADS=1:

The performance more or less monotonically increases with thread number, even when the the number of omp threads very greatly exceeds the core and also hyper-thread count! Usually the performance should drop off when too many threads are used (at least in my previous experience), due to the threading overhead. Especially as the calculation should be cpu (or at least memory) bound and not waiting on I/O.

Even more weirdly, the speed-up is 35 times!

Can anyone explain this?

I also tested this with much smaller arrays 8192*4, and see similar performance scaling.

In case it matters, I am on Mac OS 10.9 and the performance data where obtained by running (under bash):

for i in {1..128}; do

for k in {1..5}; do

export OMP_NUM_THREADS=$i;

echo -ne $i $k "";

./a.out;

done;

done > out

EDIT: Out of curiosity I decided to try much larger numbers of threads. My OS limits this to 2000. The odd results (both speed up and low thread overhead) speak for themselves!

EDIT: I tried @Zboson latest suggestion in their answer, i.e. putting VZEROUPPER before each math function within the loop, and it did fix the scaling problem! (It also sent the single threaded code from 22 s to 2 s!):