I think that stereoCalibrate is the way to work if you are interested in the depth map and in aligning the 2 images (and I think this is an important issue even if I don't know what you're trying to do and even if you're already have a depth map from the kinect).

But, If I understand it correctly what you need you also want to find the position of the cameras in the world. You can do that by having the same known geometry in both view. This is normally achieved via a chessboard pattern that is lying in the floor, send by both (fixed position) cameras.

Once you have a known geometry 3d points and the correspective 2d points projected in the image plane you can find independently the 3d position of the camera relative to the 3d world considering the world starting in one edge of the chessboard.

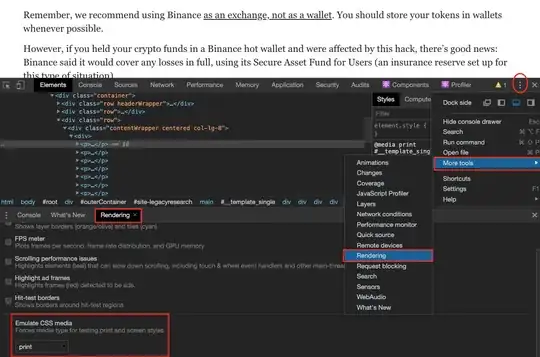

In this way what you're going to achieve is something like this image:

To find the 3d position of the camera relative to the chessboards you can use the cv::solvePnP to find the extrinsic matrix for each camera independently. The are some issues about the direction of the camera (the ray pointing from the camera to the origin world) and you have to handle them (the same: independently for each camera) if you want to visualise them (like in OpenGL). Some matrix algebra and angle handling too.

For a detailed description of the math I can address you to the famous Multiple View Geometry.

See also my previous answer on augmented reality and integration between OpenCV and OpenGL (i.e. hot to use the extrinsic matrix and T and R matrixes that can be decomposed from it and that represent position and orientation of the camera in the world).

Just for curiosity: why are you using a normal camera PLUS a kinect? The kinect gives you the depth map that we are try to achieve with 2 stereo camera. I don't understand exactly what kind of data an additional normal camera can give you more then a calibrated kinect with good use of the extrinsic matrix already gives you.

PS the image is taken from this nice OpenCV introductory blog but I think that post is not much relevant to your question because that post is about intrisinc matrix and distortion parameters that seems you already have. Just to clarify.

EDIT: when you're talking about units of the extrinsic data you are normally measure them in the same unit of the 3D points of the chessboard are, so if you identify a squared chessboard edge points in 3D with P(0,0) P(1,0) P(1,1) P(0,1) and use them with solvePnP the translation of the camera will be measured in the unit of "chessboard edge size". If it is 1 meter long, the unit of measure will be meters. For the rotations, the unit are normally angles in radians, but it depends how you are extracting them with the cv::Rodrigues and how you're getting the 3 angles yawn-pitch-roll from a rotation matrix.