I've managed to implement a logarithmic depth buffer in OpenGL, mainly courtesy of articles from Outerra (You can read them here, here, and here). However, I'm having some issues, and I'm not sure if these issues are inherent to using a logarithmic depth buffer or if there's some workaround I can't think of.

Just to start off, this is how I calculate logarithmic depth within the vertex shader:

gl_Position = MVP * vec4(inPosition, 1.0);

gl_Position.z = log2(max(ZNEAR, 1.0 + gl_Position.w)) * FCOEF - 1.0;

flogz = 1.0 + gl_Position.w;

And this is how I fix depth values in the fragment shader:

gl_FragDepth = log2(flogz) * HALF_FCOEF;

Where ZNEAR = 0.0001, ZFAR = 1000000.0, FCOEF = 2.0 / log2(ZFAR + 1.0), and HALF_FCOEF = 0.5 * FCOEF. C, in my case, is 1.0, to simplify my code and reduce calculations.

For starters, I'm extremely pleased with the level of precision I get. With normal depth buffering (znear = 0.1, zfar = 1000.0), I get quite a bit of z-fighting towards the edge of the view distance. Right now, with my MUCH further znear:zfar, I've put a second ground plane 0.01 units below the first, and I cannot find any z-fighting, no matter how far I zoom the camera out (I get a little z-fighting when it's only 0.0001 (0.1 mm) away, but meh).

I do have some issues/concerns, however.

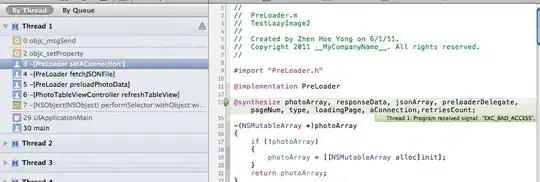

1) I get more near-plane clipping than I did with my normal depth buffer, and it looks ugly. It happens in cases where, logically, it really shouldn't. Here are a couple of screenshots of what I mean:

Clipping the ground.

Clipping a mesh.

Both of these cases are things that I did not experience with my normal depth buffer, and I'd rather not see (especially the former). EDIT: Problem 1 is officially solved by using glEnable(GL_DEPTH_CLAMP).

2) In order to get this to work, I need to write to gl_FragDepth. I tried not doing so, but the results were unacceptable. Writing to gl_FragDepth means that my graphics card can't do early z optimizations. This will inevitably drive me up the wall and so I want to fix it as soon as I can.

3) I need to be able to retrieve the value stored in the depth buffer (I already have a framebuffer and texture for this), and then convert it to a linear view-space co-ordinate. I don't really know where to start with this, the way I did it before involved the inverse projection matrix but I can't really do that here. Any advice?