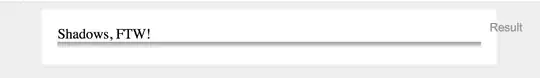

I have an image as shown in the attached figure.

Sometimes, the digit's black color intensity is not much difference from their neighbour pixels and I have problem to extract these digits (for example, setting a threshold is not efficient as black color's intensity is close to gray color's intensity because of reflection or not well focus during the image capture). I like to make it more difference between black and background gray color so I can extract the digit without much noise. What I do is I increase the difference using addWeighted function from OpenCV. color is the original RGB image. Does it make sense with my processing or is there any more efficient approach?

Mat blur_img = new Mat(color.size(), color.type());

org.opencv.core.Size size = new Size(9,9);

Imgproc.GaussianBlur(color, blur_img, size, 2);

Mat sharpened = new Mat(color.size(), CvType.CV_32FC3);

Core.addWeighted(color, 1.5, blur_img, -0.5, 0, sharpened);