I have a Java console app that's processing big xml files using DOM. Basically it creates xml files from data it takes from the DB. Now, as you guess it's using large amount of memory but, to my surprise, it's not related to bad code but to "java heap space not shrinking". I tried running my app from Eclipse using these JVM params:

-Xmx700m -XX:MinHeapFreeRatio=10 -XX:MaxHeapFreeRatio=20

i even added the

-XX:-UseSerialGC

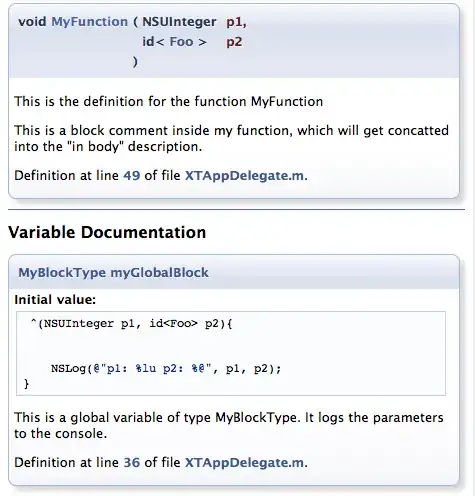

as i found out that parallel GC ignores "MinHeap" and "MaxHeap" options. Even with all those options graph of my app's memory use looks like this:

As you can see, at one point my app takes ~400 MB of heap space, heap grows to ~650 MB, but few seconds later (when xml generation is done) my app goes down to 12MB of used heap, but "heap size" remains at ~650 MB. It takes 650 MB of my ram! It's bizzare, don't you think?

**Is there a way to force JVS to shrink availabe heap size to, like 150% of current used heap?**Like, if my app needs 15 MB of ram, heap size is ~20MB, when my app asks for 400 MB of ram, heap grows to ~600 MB and DROPS back to ~20 MB as soon as my app finish heavy-lifting operation?