Why is it that when I run the C code

float x = 4.2

int y = 0

y = x*100

printf("%i\n", y);

I get 419 back? Shouldn't it be 420? This has me stumped.

Why is it that when I run the C code

float x = 4.2

int y = 0

y = x*100

printf("%i\n", y);

I get 419 back? Shouldn't it be 420? This has me stumped.

To illustrate, look at the intermediate values:

int main()

{

float x = 4.2;

int y;

printf("x = %f\n", x);

printf("x * 100 = %f\n", x * 100);

y = x * 100;

printf("y = %i\n", y);

return 0;

}

x = 4.200000 // Original x

x * 100 = 419.999981 // Floating point multiplication precision

y = 419 // Assign to int truncates

Per @Lutzi's excellent suggestion, this is more clearly illustrated if we print all the float values with precision that is higher than they represent:

...

printf("x = %.20f\n", x);

printf("x * 100 = %.20f\n", x * 100);

...

And then you can see that the value assigned to x isn't perfectly precise to start with:

x = 4.19999980926513671875

x * 100 = 419.99998092651367187500

y = 419

A floating point number is stored as an approximate value - not the exact floating point value. It has a representation due to which the result gets truncated when you convert it into an integer. You can see more information about the representation here.

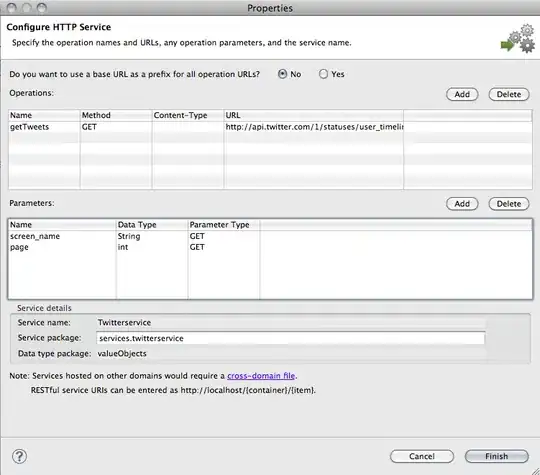

This is an example representation of a single precision floating point number :

4.2 is not in the finite number space of a float, so the system uses the closest possible approximation, which is slightly below 4.2. If you now multiply this with 100 (which is an exact float), you get 419.99something. printf()ing this with %i performs not rounding, but truncation - so you get 419.

float isn't large enough to store 4.2 precisely. If you print x with enough precision you'll probably see it come out as 4.19999995 or so. Multiplying by 100 yields 419.999995 and the integer assignment truncates (rounds down). It should work if you make x a double.