(UPDATE BELOW...)

OpenGL Cocoa/OSX Desktop ap targeting 10.8+

I have a situation where I am receiving an NSImage object once per frame and would like to convert it to an openGL texture (it is a video frame from an IPCamera). From SO and the internet, the most helpful utility method I could find for converting NSImage to glTexture is the code below.

While this is fine for occasional (IE Loading) situations, it is a massive performance hog and terrible to run once per frame. I have profiled the code and narrowed the bottleneck to two calls. Together these calls account for nearly two thirds of the running time of my entire application.

The bitmaprep creation

NSBitmapImageRep *bitmap = [[NSBitmapImageRep alloc] initWithData:[inputImage TIFFRepresentation]];Thinking that the problem was perhaps in the way I was getting the bitmap, and because I've heard bad things performance wise about TIFFRepresentation, I tried this instead, which is indeed faster, but only a little and also introduced some odd color-shifting (everything looks red):

NSRect rect = NSMakeRect(0, 0, inputImage.size.width, inputImage.size.height); CGImageRef cgImage = [inputImage CGImageForProposedRect:&rect context:[NSGraphicsContext currentContext] hints:nil]; NSBitmapImageRep *bitmap = [[NSBitmapImageRep alloc] initWithCGImage:cgImage];The glTexImage2D call (I have no idea how to improve this.)

How can I make the method below more efficient?

Alternatively, tell me I'm doing it wrong and I should look elsewhere? The frames are coming in as MJPEG, so I could potentially use the NSData before it's converted to NSImage. However, it's jpeg encoded so I'd have to deal with that. I also actually want the NSImage object for another part of the application.

-(void)loadNSImage:(NSImage*)inputImage intoTexture:(GLuint)glTexture{

// If we are passed an empty image, just quit

if (inputImage == nil){

//NSLog(@"LOADTEXTUREFROMNSIMAGE: Error: you called me with an empty image!");

return;

}

// We need to save and restore the pixel state

[self GLpushPixelState];

glEnable(GL_TEXTURE_2D);

glBindTexture(GL_TEXTURE_2D, glTexture);

// Aquire and flip the data

NSSize imageSize = inputImage.size;

if (![inputImage isFlipped]) {

NSImage *drawImage = [[NSImage alloc] initWithSize:imageSize];

NSAffineTransform *transform = [NSAffineTransform transform];

[drawImage lockFocus];

[transform translateXBy:0 yBy:imageSize.height];

[transform scaleXBy:1 yBy:-1];

[transform concat];

[inputImage drawAtPoint:NSZeroPoint

fromRect:(NSRect){NSZeroPoint, imageSize}

operation:NSCompositeCopy

fraction:1];

[drawImage unlockFocus];

inputImage = drawImage;

}

NSBitmapImageRep *bitmap = [[NSBitmapImageRep alloc] initWithData:[inputImage TIFFRepresentation]];

// Now make a texture out of the bitmap data

// Set proper unpacking row length for bitmap.

glPixelStorei(GL_UNPACK_ROW_LENGTH, (GLint)[bitmap pixelsWide]);

// Set byte aligned unpacking (needed for 3 byte per pixel bitmaps).

glPixelStorei(GL_UNPACK_ALIGNMENT, 1);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_REPEAT);

NSInteger samplesPerPixel = [bitmap samplesPerPixel];

// Nonplanar, RGB 24 bit bitmap, or RGBA 32 bit bitmap.

if(![bitmap isPlanar] && (samplesPerPixel == 3 || samplesPerPixel == 4)) {

// Create one OpenGL texture

// FIXME: Very slow

glTexImage2D(GL_TEXTURE_2D, 0,

GL_RGBA,//samplesPerPixel == 4 ? GL_RGBA8 : GL_RGB8,

(GLint)[bitmap pixelsWide],

(GLint)[bitmap pixelsHigh],

0,

GL_RGBA,//samplesPerPixel == 4 ? GL_RGBA : GL_RGB,

GL_UNSIGNED_BYTE,

[bitmap bitmapData]);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP);

}else{

[[NSException exceptionWithName:@"ImageFormat" reason:@"Unsupported image format" userInfo:nil] raise];

}

[self GLpopPixelState];

}

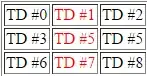

Screenshot of the profile:

Update

Based on Brad's comments below, I decided to look at simply bypassing NSImage. In my particular case I was able to get access to the JPG data as an NSData object prior to it being converted into an NSImage and so this worked great:

[NSBitmapImageRep imageRepWithData: imgData];

Using more or less the same method above but starting directly with the bitmap rep, CPU usage fell from 80% to 20% and I'm satisfied with the speed. I have a solution for my ap.

I would still like to know if there is an answer to my original question or if it's best to just accept this as an object lesson in what to avoid. Finally I'm still wondering if it's possible to improve the load time on the glTexImage2D call - although it's now well within the realm of reasonable, it still profiles as taking up 99% of the load of the method (but maybe that's ok.)

===========