A nice way to do this and return a nicely formatter series is combining pandas.Series.value_counts and pandas.DataFrame.stack.

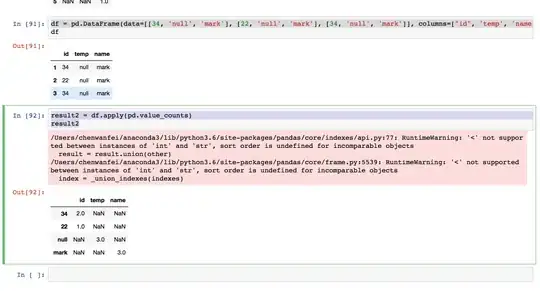

For the DataFrame

df = pandas.DataFrame(data=[[34, 'null', 'mark'], [22, 'null', 'mark'], [34, 'null', 'mark']], columns=['id', 'temp', 'name'], index=[1, 2, 3])

You can do something like

df.apply(lambda x: x.value_counts()).T.stack()

In this code, df.apply(lambda x: x.value_counts()) applies value_counts to every column and appends it to the resulting DataFrame, so you end up with a DataFrame with the same columns and one row per every different value in every column (and a lot of null for each value that doesn't appear in each column).

After that, T transposes the DataFrame (so you end up with a DataFrame with an index equal to the columns and the columns equal to the possible values), and stack turns the columns of the DataFrame into a new level of the MultiIndex and "deletes" all the Null values, making the whole thing a Series.

The result of this is

id 22 1

34 2

temp null 3

name mark 3

dtype: float64