PROBLEM

I have a picture that is taken from a swinging vehicle. For simplicity I have converted it into a black and white image. An example is shown below:

The image shows the high intensity returns and has a pattern in it that is found it all of the valid images is circled in red. This image can be taken from multiple angles depending on the rotation of the vehicle. Another example is here:

The intention here is to attempt to identify the picture cells in which this pattern exists.

CURRENT APPROACHES

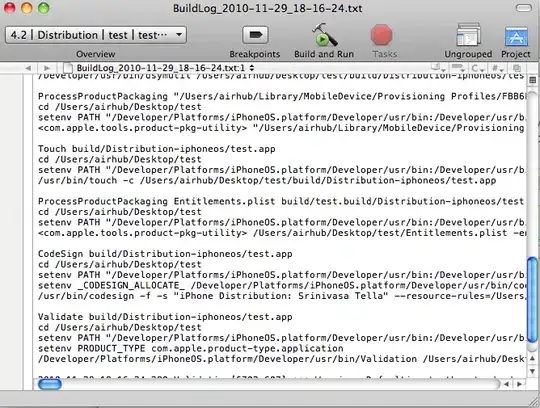

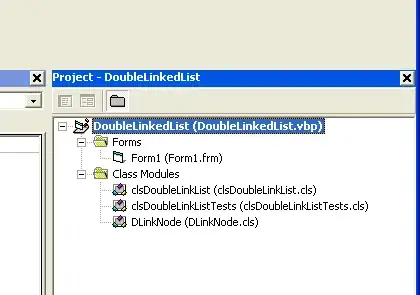

I have tried a couple of methods so far, I am using Matlab to test but will eventually be implementing in c++. It is desirable for the algorithm to be time efficient, however, I am interested in any suggestions.

SURF (Speeded Up Robust Features) Feature Recognition

I tried the default matlab implementation of SURF to attempt to find features. Matlab SURF is able to identify features in 2 examples (not the same as above) however, it is not able to identify common ones:

I know that the points are different but the pattern is still somewhat identifiable. I have tried on multiple sets of pictures and there are almost never common points. From reading about SURF it seems like it is not robust to skewed images anyway. Perhaps some recommendations on pre-processing here?

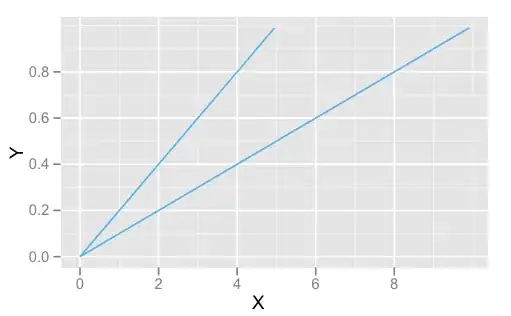

Template Matching

So template matching was tried but is definitely not ideal for the application because it is not robust to scale or skew change. I am open to pre-processing ideas to fix the skew. This could be quite easy, some discussion on extra information on the picture is provided further down.

For now lets investigate template matching: Say we have the following two images as the template and the current image:

The template is chosen from one of the most forward facing images. And using it on a very similar image we can match the position:

But then (and somewhat obviously) if we change the picture to a different angle it won't work. Of course we expect this because the template no-longer looks like the pattern in the image:

So we obviously need some pre-processing work here as well.

Hough Lines and RANSAC

Hough lines and RANSAC might be able to identify the lines for us but then how do we get the pattern position?

Other that I don't know about yet

I am pretty new to the image processing scene so i would love to hear about any other techniques that would suit this simple yet difficult image rec problem.

The sensor and how it will help pre-processing

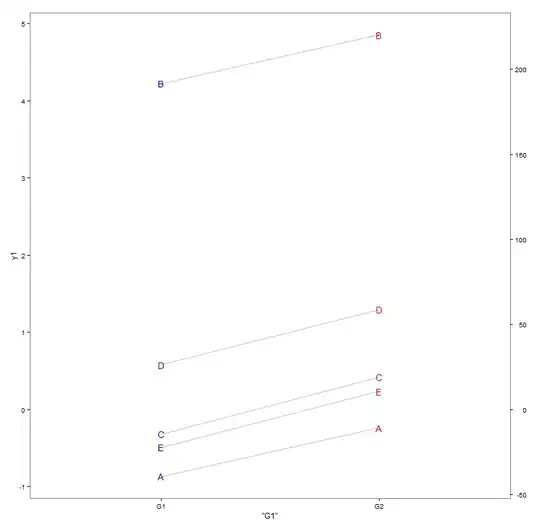

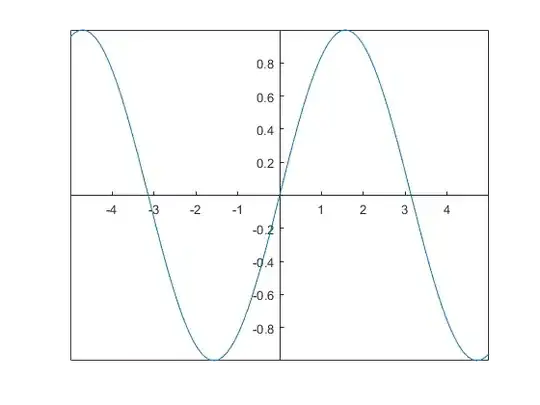

The sensor is a 3d laser, it has been turned into an image for this experiment but still retains its distance information. If we plot with distance scaled from 0 - 255 we get the following image:

Where lighter is further away. This could definitely help us to align the image, some thoughts on the best way?. So far I have thought of things like calculating the normal of the cells that are not 0, we could also do some sort of gradient descent or least squares fitting such that the difference in the distance is 0, that could align the image so that it is always straight. The problem with that is that the solid white stripe is further away? Maybe we could segment that out? We are sort of building algorithms on our algorithms then so we need to be careful so this doesn't become a monster.

Any help or ideas would be great, I am happy to look into any serious answer!