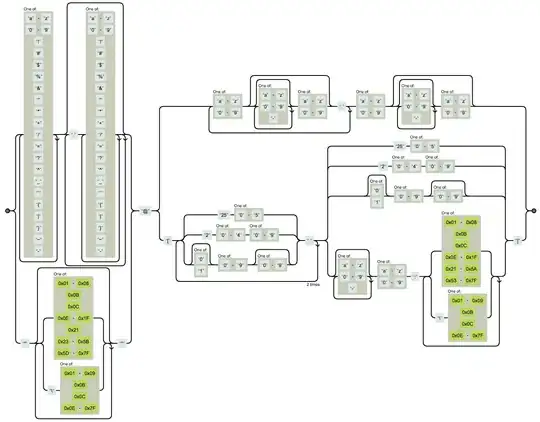

my objective here is simply to detect the seat no matter where's the image is positioned. To make things even simpler this is the only image that will be shown on the screen but the position of the image may change. User may move it right, left, up down and maybe show part of the image.

I read this thread that shows how to 'Brute-force' the image to detect a subset of an image but when I tried it - it took my 100+ seconds to detect it (really long time, though I'm not looking for real-time) and also, I think my challenge is simpler.

Q: What should be my approach here? I've never tried anything with image processing and ready to go this path (if its applicable here).

Thanks!

this is the image that will be shown on the screen (might be shown only part of it, say user moved it all the way to the right and it shows only the rear wheal with the seat)

subset image is always like this: