I am doing image stitching in OpenCV (A panorama) but I have one problem.

I can't use the class Stitching from OpenCV so I must create it with only feature points and homographies.

OrbFeatureDetector detector( minHessian );

std::vector<KeyPoint> keypoints_1, keypoints_2;

Mat descriptors_1a, descriptors_2a;

detector.detect( img_1, keypoints_1 , descriptors_1a);

detector.detect( img_2, keypoints_2 , descriptors_2a);

//-- Step 2: Calculate descriptors (feature vectors)

OrbDescriptorExtractor extractor;

Mat descriptors_1, descriptors_2;

cout<<"La distancia es " <<endl;

extractor.compute( img_1, keypoints_1, descriptors_1 );

extractor.compute( img_2, keypoints_2, descriptors_2 );

//-- Step 3: Matching descriptor vectors with a brute force matcher

BFMatcher matcher(NORM_HAMMING, true);

std::vector< DMatch > matches;

matcher.match( descriptors_1, descriptors_2, matches );

Here I obtain the feature points in matches, but I need to filter it:

double max_dist = 0; double min_dist = 100;

//-- Quick calculation of max and min distances between keypoints

for( int i = 0; i < matches.size(); i++ )

{

double dist = matches[i].distance;

//cout<<"La distancia es " << i<<endl;

if( dist < min_dist && dist >3)

{

min_dist = dist;

}

if( dist > max_dist) max_dist = dist;

}

//-- Draw only "good" matches (i.e. whose distance is less than 3*min_dist )

std::vector< DMatch > good_matches;

for( int i = 0; i < matches.size(); i++ )

{

//cout<<matches[i].distance<<endl;

if( matches[i].distance < 3*min_dist && matches[i].distance > 3)

{

good_matches.push_back( matches[i]); }

}

Now, I calculate the Homography

vector<Point2f> p1, p2;

for (unsigned int i = 0; i < matches.size(); i++) {

p1.push_back(keypoints_1[matches[i].queryIdx].pt);

p2.push_back(keypoints_2[matches[i].trainIdx].pt);

}

// Homografía

vector<unsigned char> match_mask;

Mat h = findHomography(Mat(p1),Mat(p2), match_mask,CV_RANSAC);

ANd finally, obtain the transform matrix and apply warpPerspective to obtain the join of the two images, but my problem is that in the final image, appears black areas around the photo, and when I loop again, the final image will be ilegible.

// Transformar perspectiva para imagen 2

vector<Point2f> cuatroPuntos;

cuatroPuntos.push_back(Point2f (0,0));

cuatroPuntos.push_back(Point2f (img_1.size().width,0));

cuatroPuntos.push_back(Point2f (0, img_1.size().height));

cuatroPuntos.push_back(Point2f (img_1.size().width, img_1.size().height));

Mat MDestino;

perspectiveTransform(Mat(cuatroPuntos), MDestino, h);

// Calcular esquinas de imagen 2

double min_x, min_y, tam_x, tam_y;

float min_x1, min_x2, min_y1, min_y2, max_x1, max_x2, max_y1, max_y2;

min_x1 = min(MDestino.at<Point2f>(0).x, MDestino.at<Point2f>(1).x);

min_x2 = min(MDestino.at<Point2f>(2).x, MDestino.at<Point2f>(3).x);

min_y1 = min(MDestino.at<Point2f>(0).y, MDestino.at<Point2f>(1).y);

min_y2 = min(MDestino.at<Point2f>(2).y, MDestino.at<Point2f>(3).y);

max_x1 = max(MDestino.at<Point2f>(0).x, MDestino.at<Point2f>(1).x);

max_x2 = max(MDestino.at<Point2f>(2).x, MDestino.at<Point2f>(3).x);

max_y1 = max(MDestino.at<Point2f>(0).y, MDestino.at<Point2f>(1).y);

max_y2 = max(MDestino.at<Point2f>(2).y, MDestino.at<Point2f>(3).y);

min_x = min(min_x1, min_x2);

min_y = min(min_y1, min_y2);

tam_x = max(max_x1, max_x2);

tam_y = max(max_y1, max_y2);

// Matriz de transformación

Mat Htr = Mat::eye(3,3,CV_64F);

if (min_x < 0){

tam_x = img_2.size().width - min_x;

Htr.at<double>(0,2)= -min_x;

}

if (min_y < 0){

tam_y = img_2.size().height - min_y;

Htr.at<double>(1,2)= -min_y;

}

// Construir panorama

Mat Panorama;

Panorama = Mat(Size(tam_x,tam_y), CV_32F);

warpPerspective(img_2, Panorama, Htr, Panorama.size(), INTER_LINEAR, BORDER_CONSTANT, 0);

warpPerspective(img_1, Panorama, (Htr*h), Panorama.size(), INTER_LINEAR, BORDER_TRANSPARENT,0);

Anyone knows how can I eliminate this black areas? Is something that I do bad? Anyone knows a functional code that I can see to compare it?

Thanks for your time

EDIT:

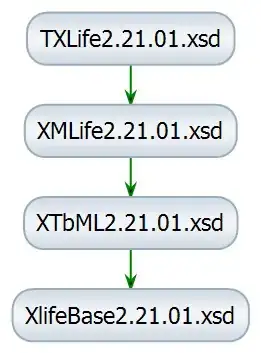

That is my image:

And I want to eliminate the black part.