Use splines. The relevant part of the function is shown in the figure below. It varies approximately like the 5th root, so the problematic zone is close to p / p0 = 0. There is mathematical theory how to optimally place the knots of splines to minimize the error (see Carl de Boor: A Practical Guide to Splines). Usually one constructs the spline in B form ahead of time (using toolboxes such as Matlab's spline toolbox - also written by C. de Boor), then converts to Piecewise Polynomial representation for fast evaluation.

In C. de Boor, PGS, the function g(x) = sqrt(x + 1) is actually taken as an example (Chapter 12, Example II). This is exactly what you need here. The book comes back to this case a few times, since it is admittedly a hard problem for any interpolation scheme due to the infinite derivatives at x = -1. All software from PGS is available for free as PPPACK in netlib, and most of it is also part of SLATEC (also from netlib).

Edit (Removed)

(Multiplying by x once does not significantly help, since it only regularizes the first derivative, while all other derivatives at x = 0 are still infinite.)

Edit 2

My feeling is that optimally constructed splines (following de Boor) will be best (and fastest) for relatively low accuracy requirements. If the accuracy requirements are high (say 1e-8), one may be forced to get back to the algorithms that mathematicians have been researching for centuries. At this point, it may be best to simply download the sources of glibc and copy (provided GPL is acceptable) whatever is in

glibc-2.19/sysdeps/ieee754/dbl-64/e_pow.c

Since we don't have to include the whole math.h, there shouldn't be a problem with memory, but we will only marginally profit from having a fixed exponent.

Edit 3

Here is an adapted version of e_pow.c from netlib, as found by @Joni. This seems to be the grandfather of glibc's more modern implementation mentioned above. The old version has two advantages: (1) It is public domain, and (2) it uses a limited number of constants, which is beneficial if memory is a tight resource (glibc's version defines over 10000 lines of constants!). The following is completely standalone code, which calculates x^0.19029 for 0 <= x <= 1 to double precision (I tested it against Python's power function and found that at most 2 bits differed):

#define __LITTLE_ENDIAN

#ifdef __LITTLE_ENDIAN

#define __HI(x) *(1+(int*)&x)

#define __LO(x) *(int*)&x

#else

#define __HI(x) *(int*)&x

#define __LO(x) *(1+(int*)&x)

#endif

static const double

bp[] = {1.0, 1.5,},

dp_h[] = { 0.0, 5.84962487220764160156e-01,}, /* 0x3FE2B803, 0x40000000 */

dp_l[] = { 0.0, 1.35003920212974897128e-08,}, /* 0x3E4CFDEB, 0x43CFD006 */

zero = 0.0,

one = 1.0,

two = 2.0,

two53 = 9007199254740992.0, /* 0x43400000, 0x00000000 */

/* poly coefs for (3/2)*(log(x)-2s-2/3*s**3 */

L1 = 5.99999999999994648725e-01, /* 0x3FE33333, 0x33333303 */

L2 = 4.28571428578550184252e-01, /* 0x3FDB6DB6, 0xDB6FABFF */

L3 = 3.33333329818377432918e-01, /* 0x3FD55555, 0x518F264D */

L4 = 2.72728123808534006489e-01, /* 0x3FD17460, 0xA91D4101 */

L5 = 2.30660745775561754067e-01, /* 0x3FCD864A, 0x93C9DB65 */

L6 = 2.06975017800338417784e-01, /* 0x3FCA7E28, 0x4A454EEF */

P1 = 1.66666666666666019037e-01, /* 0x3FC55555, 0x5555553E */

P2 = -2.77777777770155933842e-03, /* 0xBF66C16C, 0x16BEBD93 */

P3 = 6.61375632143793436117e-05, /* 0x3F11566A, 0xAF25DE2C */

P4 = -1.65339022054652515390e-06, /* 0xBEBBBD41, 0xC5D26BF1 */

P5 = 4.13813679705723846039e-08, /* 0x3E663769, 0x72BEA4D0 */

lg2 = 6.93147180559945286227e-01, /* 0x3FE62E42, 0xFEFA39EF */

lg2_h = 6.93147182464599609375e-01, /* 0x3FE62E43, 0x00000000 */

lg2_l = -1.90465429995776804525e-09, /* 0xBE205C61, 0x0CA86C39 */

ovt = 8.0085662595372944372e-0017, /* -(1024-log2(ovfl+.5ulp)) */

cp = 9.61796693925975554329e-01, /* 0x3FEEC709, 0xDC3A03FD =2/(3ln2) */

cp_h = 9.61796700954437255859e-01, /* 0x3FEEC709, 0xE0000000 =(float)cp */

cp_l = -7.02846165095275826516e-09, /* 0xBE3E2FE0, 0x145B01F5 =tail of cp_h*/

ivln2 = 1.44269504088896338700e+00, /* 0x3FF71547, 0x652B82FE =1/ln2 */

ivln2_h = 1.44269502162933349609e+00, /* 0x3FF71547, 0x60000000 =24b 1/ln2*/

ivln2_l = 1.92596299112661746887e-08; /* 0x3E54AE0B, 0xF85DDF44 =1/ln2 tail*/

double pow0p19029(double x)

{

double y = 0.19029e+00;

double z,ax,z_h,z_l,p_h,p_l;

double y1,t1,t2,r,s,t,u,v,w;

int i,j,k,n;

int hx,hy,ix,iy;

unsigned lx,ly;

hx = __HI(x); lx = __LO(x);

hy = __HI(y); ly = __LO(y);

ix = hx&0x7fffffff; iy = hy&0x7fffffff;

ax = x;

/* special value of x */

if(lx==0) {

if(ix==0x7ff00000||ix==0||ix==0x3ff00000){

z = ax; /*x is +-0,+-inf,+-1*/

return z;

}

}

s = one; /* s (sign of result -ve**odd) = -1 else = 1 */

double ss,s2,s_h,s_l,t_h,t_l;

n = ((ix)>>20)-0x3ff;

j = ix&0x000fffff;

/* determine interval */

ix = j|0x3ff00000; /* normalize ix */

if(j<=0x3988E) k=0; /* |x|<sqrt(3/2) */

else if(j<0xBB67A) k=1; /* |x|<sqrt(3) */

else {k=0;n+=1;ix -= 0x00100000;}

__HI(ax) = ix;

/* compute ss = s_h+s_l = (x-1)/(x+1) or (x-1.5)/(x+1.5) */

u = ax-bp[k]; /* bp[0]=1.0, bp[1]=1.5 */

v = one/(ax+bp[k]);

ss = u*v;

s_h = ss;

__LO(s_h) = 0;

/* t_h=ax+bp[k] High */

t_h = zero;

__HI(t_h)=((ix>>1)|0x20000000)+0x00080000+(k<<18);

t_l = ax - (t_h-bp[k]);

s_l = v*((u-s_h*t_h)-s_h*t_l);

/* compute log(ax) */

s2 = ss*ss;

r = s2*s2*(L1+s2*(L2+s2*(L3+s2*(L4+s2*(L5+s2*L6)))));

r += s_l*(s_h+ss);

s2 = s_h*s_h;

t_h = 3.0+s2+r;

__LO(t_h) = 0;

t_l = r-((t_h-3.0)-s2);

/* u+v = ss*(1+...) */

u = s_h*t_h;

v = s_l*t_h+t_l*ss;

/* 2/(3log2)*(ss+...) */

p_h = u+v;

__LO(p_h) = 0;

p_l = v-(p_h-u);

z_h = cp_h*p_h; /* cp_h+cp_l = 2/(3*log2) */

z_l = cp_l*p_h+p_l*cp+dp_l[k];

/* log2(ax) = (ss+..)*2/(3*log2) = n + dp_h + z_h + z_l */

t = (double)n;

t1 = (((z_h+z_l)+dp_h[k])+t);

__LO(t1) = 0;

t2 = z_l-(((t1-t)-dp_h[k])-z_h);

/* split up y into y1+y2 and compute (y1+y2)*(t1+t2) */

y1 = y;

__LO(y1) = 0;

p_l = (y-y1)*t1+y*t2;

p_h = y1*t1;

z = p_l+p_h;

j = __HI(z);

i = __LO(z);

/*

* compute 2**(p_h+p_l)

*/

i = j&0x7fffffff;

k = (i>>20)-0x3ff;

n = 0;

if(i>0x3fe00000) { /* if |z| > 0.5, set n = [z+0.5] */

n = j+(0x00100000>>(k+1));

k = ((n&0x7fffffff)>>20)-0x3ff; /* new k for n */

t = zero;

__HI(t) = (n&~(0x000fffff>>k));

n = ((n&0x000fffff)|0x00100000)>>(20-k);

if(j<0) n = -n;

p_h -= t;

}

t = p_l+p_h;

__LO(t) = 0;

u = t*lg2_h;

v = (p_l-(t-p_h))*lg2+t*lg2_l;

z = u+v;

w = v-(z-u);

t = z*z;

t1 = z - t*(P1+t*(P2+t*(P3+t*(P4+t*P5))));

r = (z*t1)/(t1-two)-(w+z*w);

z = one-(r-z);

__HI(z) += (n<<20);

return s*z;

}

Clearly, 50+ years of research have gone into this, so it's probably very hard to do any better. (One has to appreciate that there are 0 loops, only 2 divisions, and only 6 if statements in the whole algorithm!) The reason for this is, again, the behavior at x = 0, where all derivatives diverge, which makes it extremely hard to keep the error under control: I once had a spline representation with 18 knots that was good up to x = 1e-4, with absolute and relative errors < 5e-4 everywhere, but going to x = 1e-5 ruined everything again.

So, unless the requirement to go arbitrarily close to zero is relaxed, I recommend using the adapted version of e_pow.c given above.

Edit 4

Now that we know that the domain 0.3 <= x <= 1 is sufficient, and that we have very low accuracy requirements, Edit 3 is clearly overkill. As @MvG has demonstrated, the function is so well behaved that a polynomial of degree 7 is sufficient to satisfy the accuracy requirements, which can be considered a single spline segment. @MvG's solution minimizes the integral error, which already looks very good.

The question arises as to how much better we can still do? It would be interesting to find the polynomial of a given degree that minimizes the maximum error in the interval of interest. The answer is the minimax

polynomial, which can be found using Remez' algorithm, which is implemented in the Boost library. I like @MvG's idea to clamp the value at x = 1 to 1, which I will do as well. Here is minimax.cpp:

#include <ostream>

#define TARG_PREC 64

#define WORK_PREC (TARG_PREC*2)

#include <boost/multiprecision/cpp_dec_float.hpp>

typedef boost::multiprecision::number<boost::multiprecision::cpp_dec_float<WORK_PREC> > dtype;

using boost::math::pow;

#include <boost/math/tools/remez.hpp>

boost::shared_ptr<boost::math::tools::remez_minimax<dtype> > p_remez;

dtype f(const dtype& x) {

static const dtype one(1), y(0.19029);

return one - pow(one - x, y);

}

void out(const char *descr, const dtype& x, const char *sep="") {

std::cout << descr << boost::math::tools::real_cast<double>(x) << sep << std::endl;

}

int main() {

dtype a(0), b(0.7); // range to optimise over

bool rel_error(false), pin(true);

int orderN(7), orderD(0), skew(0), brake(50);

int prec = 2 + (TARG_PREC * 3010LL)/10000;

std::cout << std::scientific << std::setprecision(prec);

p_remez.reset(new boost::math::tools::remez_minimax<dtype>(

&f, orderN, orderD, a, b, pin, rel_error, skew, WORK_PREC));

out("Max error in interpolated form: ", p_remez->max_error());

p_remez->set_brake(brake);

unsigned i, count(50);

for (i = 0; i < count; ++i) {

std::cout << "Stepping..." << std::endl;

dtype r = p_remez->iterate();

out("Maximum Deviation Found: ", p_remez->max_error());

out("Expected Error Term: ", p_remez->error_term());

out("Maximum Relative Change in Control Points: ", r);

}

boost::math::tools::polynomial<dtype> n = p_remez->numerator();

for(i = n.size(); i--; ) {

out("", n[i], ",");

}

}

Since all parts of boost that we use are header-only, simply build with:

c++ -O3 -I<path/to/boost/headers> minimax.cpp -o minimax

We finally get the coefficients, which are after multiplication by 44330:

24538.3409, -42811.1497, 34300.7501, -11284.1276, 4564.5847, 3186.7541, 8442.5236, 0.

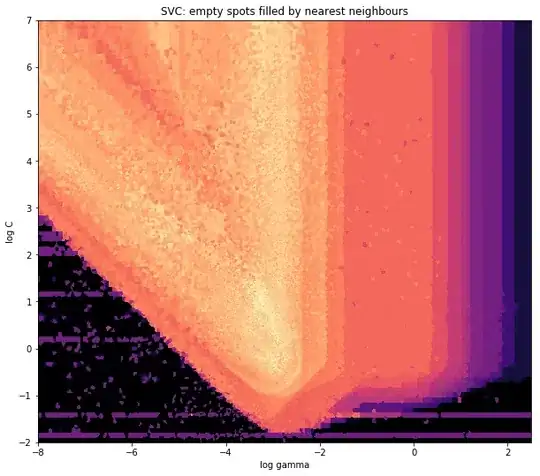

The following error plot demonstrates that this is really the best possible degree-7 polynomial approximation, since all extrema are of equal magnitude (0.06659):

Should the requirements ever change (while still keeping well away from 0!), the C++ program above can be simply adapted to spit out the new optimal polynomial approximation.