gprof is a venerable and ground-breaking tool.

But you're finding out that it's very limited.

It's based on sampling the program counter, plus counting calls between functions, all of which have a very tenuous connection to what costs time.

To find out what costs time in your program, it's actually quite simple.

The method I and others use is this.

The point is, as the program runs, there is a call stack, consisting of the current program counter, plus a return address back to every function call instruction it is currently in the process of executing, on the thread.

If you can take an X-Ray snapshot of the call stack at a random point in time, and examine all its levels in the context of a debugger, you can tell exactly what it was trying to do, and why, at that point in time.

If it is spending 30% of its time doing something you never would have guessed, but that you don't really need, you will spot it on 3 out of 10 stack samples, more or less, and that's good enough to find it.

You won't know precisely what the percent is, but you will know precisely what the problem is.

The time that any instruction costs (the time you'd save if you got rid of it) is just the percent of time it is on the stack, whether it is a non-call instruction, or a call instruction.

If it costs enough time to be worth fixing, it will show up in a moderate number of samples.

ADDED: Not to belabor the point, but there's always somebody who says "That's too few samples, it will find the wrong stuff!"

Well, OK. Suppose you take ten random-time samples of the stack, and you see something you could get rid of on three of them.

How much does it cost?

Well you don't know for sure. It has a probability distribution that looks exactly like this:

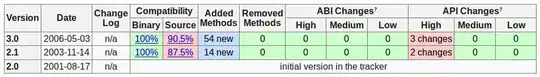

You can see that the most likely cost is 30% (no surprise), for a speedup of 10/7 = 1.4x, but it will be more or less than that.

How much more or less?

Well, the clear space between the two shaded regions holds 95% of the probability.

In other words, yes there is a chance that the cost is less than 10%, namely about 2.5%.

If the cost is 10%, the speedup is 10/9 = 1.1x.

On the other hand, there is an equal probability that the cost is higher than 60%, for a speedup of 10/4 = 2.5x.

So the estimated speedup is 1.4, but though it could be as low as 1.1, don't throw away the equal chance that it could be as high as 2.5.

Of course, if you take 20 samples instead of 10, the curve will be narrower.