I'm having problems for counting the number of lines in a messy csv.bz2 file.

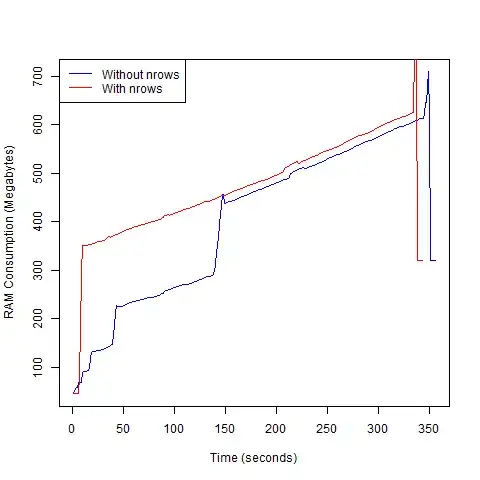

Since this is a huge file I want to be able to preallocate a data frame before reading the bzip2 file with the read.csv() function.

As you can see in the following tests, my results are widely variable, and none of the correspond with the number of actual rows in the csv.bz2 file.

> system.time(nrec1 <- as.numeric(shell('type "MyFile.csv" | find /c ","', intern=T)))

user system elapsed

0.02 0.00 53.50

> nrec1

[1] 1060906

> system.time(nrec2 <- as.numeric(shell('type "MyFile.csv.bz2" | find /c ","', intern=T)))

user system elapsed

0.00 0.02 10.15

> nrec2

[1] 126715

> system.time(nrec3 <- as.numeric(shell('type "MyFile.csv" | find /v /c ""', intern=T)))

user system elapsed

0.00 0.02 53.10

> nrec3

[1] 1232705

> system.time(nrec4 <- as.numeric(shell('type "MyFile.csv.bz2" | find /v /c ""', intern=T)))

user system elapsed

0.00 0.01 4.96

> nrec4

[1] 533062

The most interesting result is the one I called nrec4 since it takes no time, and it returns roughly half the number of rows of nrec1, but I'm totally unsure if the naive multiplication by 2 will be ok.

I have tried several other methods including fread() and hsTableReader() but the former crashes and the later is so slow that I won't even consider it further.

My questions are:

- Which reliable method can I use for counting the number of rows in a csv.bz2 file?

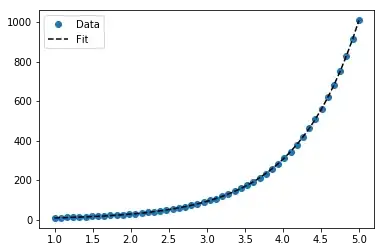

- It's ok to use a formula for calculating the number of rows directly in a csv.bz2 file without decompressing it?

Thanks in advance,

Diego