Does anyone know a good method to calculate the empirical/sample covariogram, if possible in Python?

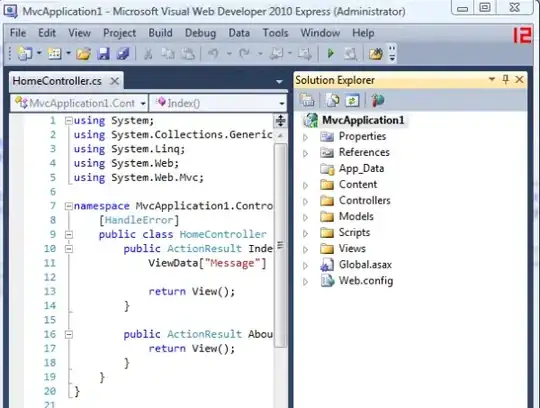

This is a screenshot of a book which contains a good definition of covariagram:

If I understood it correctly, for a given lag/width h, I'm supposed to get all the pair of points that are separated by h (or less than h), multiply its values and for each of these points, calculate its mean, which in this case, are defined as m(x_i). However, according to the definition of m(x_{i}), if I want to compute m(x1), I need to obtain the average of the values located within distance h from x1. This looks like a very intensive computation.

First of all, am I understanding this correctly? If so, what is a good way to compute this assuming a two dimensional space? I tried to code this in Python (using numpy and pandas), but it takes a couple of seconds and I'm not even sure it is correct, that is why I will refrain from posting the code here. Here is another attempt of a very naive implementation:

from scipy.spatial.distance import pdist, squareform

distances = squareform(pdist(np.array(coordinates))) # coordinates is a nx2 array

z = np.array(z) # z are the values

cutoff = np.max(distances)/3.0 # somewhat arbitrary cutoff

width = cutoff/15.0

widths = np.arange(0, cutoff + width, width)

Z = []

Cov = []

for w in np.arange(len(widths)-1): # for each width

# for each pairwise distance

for i in np.arange(distances.shape[0]):

for j in np.arange(distances.shape[1]):

if distances[i, j] <= widths[w+1] and distances[i, j] > widths[w]:

m1 = []

m2 = []

# when a distance is within a given width, calculate the means of

# the points involved

for x in np.arange(distances.shape[1]):

if distances[i,x] <= widths[w+1] and distances[i, x] > widths[w]:

m1.append(z[x])

for y in np.arange(distances.shape[1]):

if distances[j,y] <= widths[w+1] and distances[j, y] > widths[w]:

m2.append(z[y])

mean_m1 = np.array(m1).mean()

mean_m2 = np.array(m2).mean()

Z.append(z[i]*z[j] - mean_m1*mean_m2)

Z_mean = np.array(Z).mean() # calculate covariogram for width w

Cov.append(Z_mean) # collect covariances for all widths

However, now I have confirmed that there is an error in my code. I know that because I used the variogram to calculate the covariogram (covariogram(h) = covariogram(0) - variogram(h)) and I get a different plot:

And it is supposed to look like this:

Finally, if you know a Python/R/MATLAB library to calculate empirical covariograms, let me know. At least, that way I can verify what I did.