I have created a view controller and 50% of the view is camera view other 50% are buttons etc.

The problem that I am facing is when I capture image much larger image is captured and I want to capture only what I can see in that 50% of the view.

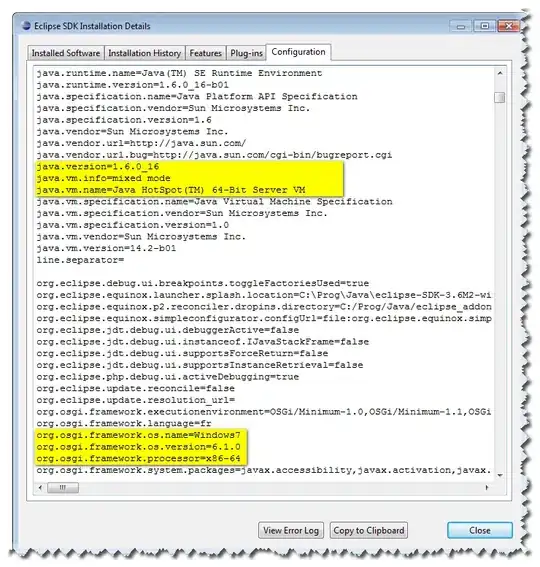

So it looks something like this:

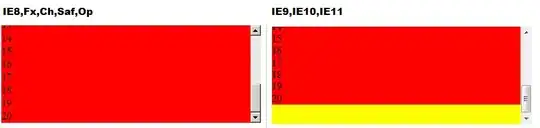

This is what I see in view:

And this is what I get as image after capture:

Code behind this is:

-(void) viewDidAppear:(BOOL)animated

{

AVCaptureSession *session = [[AVCaptureSession alloc] init];

session.sessionPreset = AVCaptureSessionPresetMedium;

CALayer *viewLayer = self.vImagePreview.view.layer;

NSLog(@"viewLayer = %@", viewLayer);

AVCaptureVideoPreviewLayer *captureVideoPreviewLayer = [[AVCaptureVideoPreviewLayer alloc] initWithSession:session];

[captureVideoPreviewLayer setVideoGravity:AVLayerVideoGravityResizeAspectFill];

captureVideoPreviewLayer.frame = self.vImagePreview.view.bounds;

[self.vImagePreview.view.layer addSublayer:captureVideoPreviewLayer];

NSLog(@"Rect of self.view: %@",NSStringFromCGRect(self.vImagePreview.view.frame));

AVCaptureDevice *device = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeVideo];

NSError *error = nil;

AVCaptureDeviceInput *input = [AVCaptureDeviceInput deviceInputWithDevice:device error:&error];

if (!input) {

NSLog(@"ERROR: trying to open camera: %@", error);

}

[session addInput:input];

stillImageOutput = [[AVCaptureStillImageOutput alloc] init];

NSDictionary *outputSettings = [[NSDictionary alloc] initWithObjectsAndKeys: AVVideoCodecJPEG, AVVideoCodecKey, nil];

[stillImageOutput setOutputSettings:outputSettings];

[session addOutput:stillImageOutput];

[session startRunning];

}

- (void)viewDidLoad

{

[super viewDidLoad];

// Load camera

vImagePreview = [[CameraViewController alloc]init];

vImagePreview.view.frame = CGRectMake(10, 10, 300, 500);

[self.view addSubview:vImagePreview.view];

vImage = [[UIImageView alloc]init];

vImage.frame = CGRectMake(10, 10, 300, 300);

[self.view addSubview:vImage];

}

And this is event when I try to capture image:

AVCaptureConnection *videoConnection = nil;

for (AVCaptureConnection *connection in stillImageOutput.connections)

{

for (AVCaptureInputPort *port in [connection inputPorts])

{

if ([[port mediaType] isEqual:AVMediaTypeVideo] )

{

videoConnection = connection;

break;

}

}

if (videoConnection) { break; }

}

NSLog(@"about to request a capture from: %@", stillImageOutput);

[stillImageOutput captureStillImageAsynchronouslyFromConnection:videoConnection completionHandler: ^(CMSampleBufferRef imageSampleBuffer, NSError *error)

{

CFDictionaryRef exifAttachments = CMGetAttachment( imageSampleBuffer, kCGImagePropertyExifDictionary, NULL);

if (exifAttachments)

{

// Do something with the attachments.

NSLog(@"attachements: %@", exifAttachments);

}

else

NSLog(@"no attachments");

NSData *imageData = [AVCaptureStillImageOutput jpegStillImageNSDataRepresentation:imageSampleBuffer];

image = [[UIImage alloc] initWithData:imageData];

UIAlertView *successAlert = [[UIAlertView alloc] init];

successAlert.title = @"Review Picture";

successAlert.message = @"";

[successAlert addButtonWithTitle:@"Save"];

[successAlert addButtonWithTitle:@"Retake"];

[successAlert setDelegate:self];

UIImageView *_imageView = [[UIImageView alloc] initWithFrame:CGRectMake(220, 10, 40, 40)];

_imageView.image = image;

[successAlert addSubview:_imageView];

[successAlert show];

UIImage *_image = [self imageByScalingAndCroppingForSize:CGSizeMake(640,480) :_imageView.image];

NSData *idata = [NSData dataWithData:UIImagePNGRepresentation(_image)];

encodedImage = [self encodeBase64WithData:idata];

}];

Why I am getting whole camera view how I can shrink size of what camera capture so I can capture only what is seen in camera view?