I have really weird problem I cannot pin down for days now. I am making a simple per-vertex lighting and it works fine on Nvidia, but doesn't render anything shaded with lights on AMD/ATI. I tracked down the problem being something to do with attributes - especially the color attribute.

This is my vertex shader:

#version 140

uniform mat4 modelViewProjectionMatrix;

in vec3 in_Position; // (x,y,z)

in vec4 in_Color; // (r,g,b,a)

in vec2 in_TextureCoord; // (u,v)

out vec2 v_TextureCoord;

out vec4 v_Color;

uniform bool en_ColorEnabled;

uniform bool en_LightingEnabled;

void main()

{

if (en_LightingEnabled == true){

v_Color = vec4(0.0,1.0,0.0,1.0);

}else{

if (en_ColorEnabled == true){

v_Color = in_Color;

}else{

v_Color = vec4(0.0,0.0,1.0,1.0);

}

}

gl_Position = modelViewProjectionMatrix * vec4( in_Position.xyz, 1.0);

v_TextureCoord = in_TextureCoord;

}

This is pixel shader:

#version 140

uniform sampler2D en_TexSampler;

uniform bool en_TexturingEnabled;

uniform bool en_ColorEnabled;

uniform vec4 en_bound_color;

in vec2 v_TextureCoord;

in vec4 v_Color;

out vec4 out_FragColor;

void main()

{

vec4 TexColor;

if (en_TexturingEnabled == true){

TexColor = texture2D( en_TexSampler, v_TextureCoord.st ) * v_Color;

}else if (en_ColorEnabled == true){

TexColor = v_Color;

}else{

TexColor = en_bound_color;

}

out_FragColor = TexColor;

}

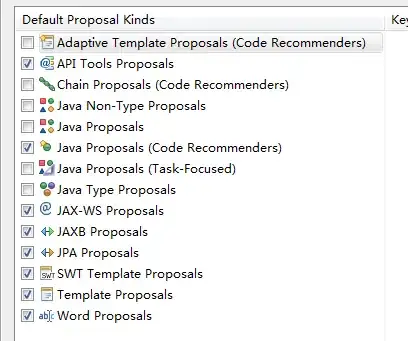

So this shader allows 3 states - when I use lights, when I don't use lights, but use a per-vertex color and when I use a single color for all vertices. I removed all the light calculation code, because I tracked down the problem to an attribute. This is the output from the shader:

As you can see, everything is blue (so it renders everything that doesn't have shading or color

As you can see, everything is blue (so it renders everything that doesn't have shading or color (en_LightingEnabled == false and en_ColorEnabled == false)).

When I replace this code in vertex shader:

if (en_ColorEnabled == true){

v_Color = in_Color;

}else{

with this:

if (en_ColorEnabled == true){

v_Color = vec4(1.0,0.0,0.0,1.0);

}else{

I get the following:

So you can see that it renders everything without shading still blue, but now it also renders everything that uses lights (en_LightingEnabled == true) in green. That is how it should be. The problem is that in_Color attribute should not ever interfere with lights, as it wouldn't ever even run if lights are enabled. You can also see that nothing in the image is red, which means in this scene en_ColorEnabled is always false. So in_Color is totally useless here, yet it causes bugs in places it logically shouldn't. On Nvidia it works fine and green areas draw with or without in_Color.

Am I using something AMD doesn't support? Am I using something outside GLSL spec? I know AMD is stricter with GL spec than Nvidia, so it's possible I do something undefined. I made the code as simple as possible and still have the problem, so I don't see it.

Also, I noticed that it won't draw ANYTHING (even blue areas) on the screen if in_Color vertex attribute is disabled (no glEnableVertexAttribArray), but why? I don't even use the color. Disabled attributes need to return (0,0,0,1) by the spec right? I tried using glVertexAttrib4f to change that color, but still got black. Compiler and linker doesn't return any errors or warnings. Attribute in_Color is found just fine with glGetAttribLocation. But as I said, nothing of this should matter, as that branch is never executed in my code. Any ideas?

Tested on Nvidia660Ti and works fine, tested on ATI mobility radeon HD 5000 laptop and have this error. My only idea was that maybe I exceed the capabilities of the GPU (too many attributes and so on), but when I reduced my code to this then I stopped believing that. I use only 3 attributes here.

Also, some of my friends tested on much newer AMD cards and got the same problem.

UPDATE 1

Vertex attributes are bound like so:

if (useColors){

glUniform1i(uni_colorEnable,1);

glEnableVertexAttribArray(att_color);

glVertexAttribPointer(att_color, 4, GL_UNSIGNED_BYTE, GL_TRUE, STRIDE, OFFSET(offset));

}else{

glUniform1i(uni_colorEnable,0);

}

Also, I found how to show the weirdness even easier - take this vertex shader:

void main()

{

v_Color = clamp(vec4(1.,1.,1.,1.) * vec4(0.5,0.5,0.25,1.),0.0,1.0) + in_Color;

gl_Position = modelViewProjectionMatrix * vec4( in_Position.xyz, 1.0);

v_TextureCoord = in_TextureCoord;

}

So no branching. And I get this:

If I change that code by removing

If I change that code by removing in_Color like so:

void main()

{

v_Color = clamp(vec4(1.,1.,1.,1.) * vec4(0.5,0.5,0.25,1.),0.0,1.0);

gl_Position = modelViewProjectionMatrix * vec4( in_Position.xyz, 1.0);

v_TextureCoord = in_TextureCoord;

}

I get this:

This last one is what I expect, but the previous one doesn't draw anything even though I do addition (+) instead of anything else. So if attrib is not bound and (0,0,0,0) or (0,0,0,1) is given, then it shouldn't have done anything. And yet it still fails.

Also, all logs are empty - compilation, linking and validation. No errors or warnings. Though I noticed that the shader debug info AMD/ATI gives is vastly inferior to what Nvidia gives.

UPDATE 2

The only fix I found was to enable attribarray in all cases (so it basically sends junk to GPU when color is not enabled), but as I use a uniform to check for color, then I just don't use it. So it's like this:

if (useColors){

glUniform1i(uni_colorEnable,1);

}else{

glUniform1i(uni_colorEnable,0);

}

glEnableVertexAttribArray(att_color);

glVertexAttribPointer(att_color, 4, GL_UNSIGNED_BYTE, GL_TRUE, STRIDE, OFFSET(offset));

I have no idea on why that is happening or why I need to use this. I don't need that on Nvidia. So I guess this is slower and I am wasting bandwidth? But at least it works... Help on how to do this better would be useful though.