I’m working on an app that creates it’s own texture atlas. The elements on the atlas can vary in size but are placed in a grid pattern.

It’s all working fine except for the fact that when I write over the section of the atlas with a new element (the data from an NSImage), the image is shifted a pixel to the right.

The code I’m using to write the pixels onto the atlas is:

-(void)writeToPlateWithImage:(NSImage*)anImage atCoord:(MyGridPoint)gridPos;

{

static NSSize insetSize; //ultimately this is the size of the image in the box

static NSSize boundingBox; //this is the size of the box that holds the image in the grid

static CGFloat multiplier;

multiplier = 1.0;

NSSize plateSize = NSMakeSize(atlas.width, atlas.height);//Size of entire atlas

MyGridPoint _gridPos;

//make sure the column and row position is legal

_gridPos.column= gridPos.column >= m_numOfColumns ? m_numOfColumns - 1 : gridPos.column;

_gridPos.row = gridPos.row >= m_numOfRows ? m_numOfRows - 1 : gridPos.row;

_gridPos.column = gridPos.column < 0 ? 0 : gridPos.column;

_gridPos.row = gridPos.row < 0 ? 0 : gridPos.row;

insetSize = NSMakeSize(plateSize.width / m_numOfColumns, plateSize.height / m_numOfRows);

boundingBox = insetSize;

//…code here to calculate the size to make anImage so that it fits into the space allowed

//on the atlas.

//multiplier var will hold a value that sizes up or down the image…

insetSize.width = anImage.size.width * multiplier;

insetSize.height = anImage.size.height * multiplier;

//provide a padding around the image so that when mipmaps are created the image doesn’t ‘bleed’

//if it’s the same size as the grid’s boxes.

insetSize.width -= ((insetSize.width * (insetPadding / 100)) * 2);

insetSize.height -= ((insetSize.height * (insetPadding / 100)) * 2);

//roundUp() is a handy function I found somewhere (I can’t remember now)

//that makes the first param a multiple of the the second..

//here we make sure the image lines are aligned as it’s a RGBA so we make

//it a multiple of 4

insetSize.width = (CGFloat)roundUp((int)insetSize.width, 4);

insetSize.height = (CGFloat)roundUp((int)insetSize.height, 4);

NSImage *insetImage = [self resizeImage:[anImage copy] toSize:insetSize];

NSData *insetData = [insetImage TIFFRepresentation];

GLubyte *data = malloc(insetData.length);

memcpy(data, [insetData bytes], insetData.length);

insetImage = NULL;

insetData = NULL;

glEnable(GL_TEXTURE_2D);

glBindTexture(GL_TEXTURE_2D, atlas.textureIndex);

glPixelStorei(GL_UNPACK_ALIGNMENT, 1); //have also tried 2,4, and 8

GLint Xplace = (GLint)(boundingBox.width * _gridPos.column) + (GLint)((boundingBox.width - insetSize.width) / 2);

GLint Yplace = (GLint)(boundingBox.height * _gridPos.row) + (GLint)((boundingBox.height - insetSize.height) / 2);

glTexSubImage2D(GL_TEXTURE_2D, 0, Xplace, Yplace, (GLsizei)insetSize.width, (GLsizei)insetSize.height, GL_RGBA, GL_UNSIGNED_BYTE, data);

glGenerateMipmap(GL_TEXTURE_2D);

free(data);

glBindTexture(GL_TEXTURE_2D, 0);

glGetError();

}

The images are RGBA, 8bit (as reported by PhotoShop), here's a test image I've been using:

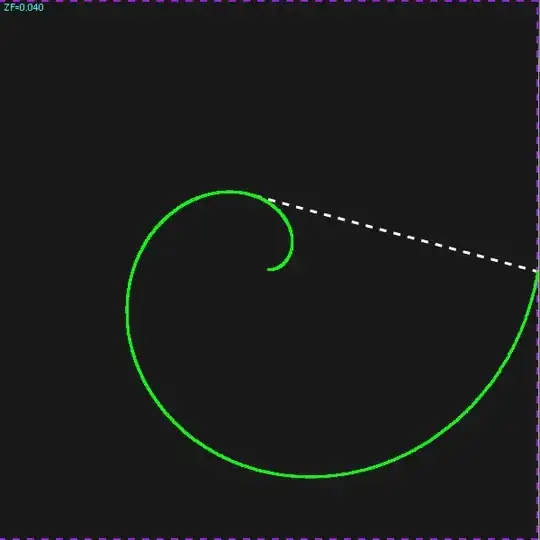

and here's a screen grab of the result in my app:

Am I unpacking the image incorrectly...? I know the resizeImage: function works as I've saved it's result to disk as well as bypassed it so the problem is somewhere in the gl-code...

EDIT: just to clarify, the section of the atlas being rendered is larger than the box diagram. So the shift is occurring withing the area that's written to with glTexSubImage2D.

EDIT 2: Sorted, finally, by offsetting the copied data that goes into the section of the atlas.

I don't fully understand why that is, perhaps it's a hack instead of a proper solution but here it is.

//resize the image to fit into the section of the atlas

NSImage *insetImage = [self resizeImage:[anImage copy] toSize:NSMakeSize(insetSize.width, insetSize.height)];

//pointer to the raw data

const void* insetDataPtr = [[insetImage TIFFRepresentation] bytes];

//for debugging, I placed the offset value next

int offset = 8;//it needed a 2 pixel (2 * 4 byte for RGBA) offset

//copy the data with the offset into a temporary data buffer

memcpy(data, insetDataPtr + offset, insetData.length - offset);

/*

.

. Calculate it's position with the texture

.

*/

//And finally overwrite the texture

glTexSubImage2D(GL_TEXTURE_2D, 0, Xplace, Yplace, (GLsizei)insetSize.width, (GLsizei)insetSize.height, GL_RGBA, GL_UNSIGNED_BYTE, data);