I'm trying to convert BGR to YUV with cvCvtColor method AND then get reference to each component.

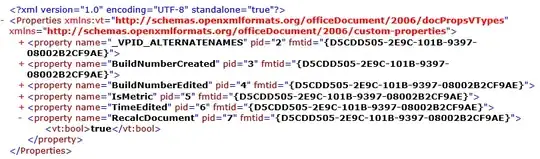

The source image (IplImage1) has following parameters:

- depth = 8

- nChannels = 3

- colorModel = RGB

- channelSeq = BGR

- width = 1620

- height = 1220

Convert and get the components after conversion:

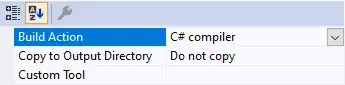

IplImage* yuvImage = cvCreateImage(cvSize(1620, 1220), 8, 3);

cvCvtColor(IplImage1, yuvImage, CV_BGR2YCrCb);

yPtr = yuvImage->imageData;

uPtr = yPtr + height*width;

vPtr = uPtr + height*width/4;

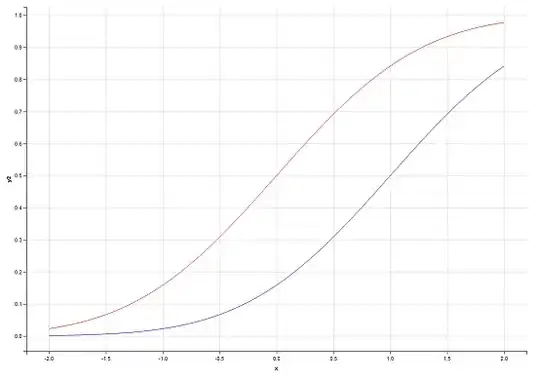

I have method that converts the YUV back to RGB and saves to file. When I create the YUV components manually (I create blue image) it works and when I open the image it's really blue. But, when I create YUV components using the method above I get black image. I think that maybe I get reference to YUV components wrongly

yPtr = yuvImage->imageData;

uPtr = yPtr + height*width;

vPtr = uPtr + height*width/4;

What could be the problem?