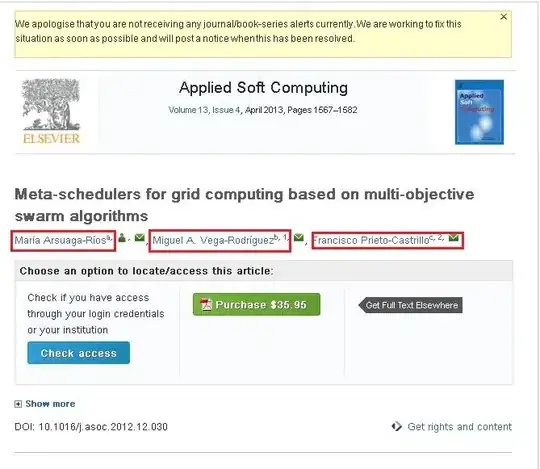

I am very new to this web crawling. I am using crawler4j to crawl the websites. I am collecting the required information by crawling these sites. My problem here is I was unable to crawl the content for the following site. http://www.sciencedirect.com/science/article/pii/S1568494612005741. I want to crawl the following information from the aforementioned site (Please take a look at the attached screenshot).

If you observe the attached screenshot it has three names (Highlighted in red boxes). If you click one of the link you will see a popup and that popup contains the whole information about that author. I want to crawl the information which are there in that popup.

I am using the following code to crawl the content.

public class WebContentDownloader {

private Parser parser;

private PageFetcher pageFetcher;

public WebContentDownloader() {

CrawlConfig config = new CrawlConfig();

parser = new Parser(config);

pageFetcher = new PageFetcher(config);

}

private Page download(String url) {

WebURL curURL = new WebURL();

curURL.setURL(url);

PageFetchResult fetchResult = null;

try {

fetchResult = pageFetcher.fetchHeader(curURL);

if (fetchResult.getStatusCode() == HttpStatus.SC_OK) {

try {

Page page = new Page(curURL);

fetchResult.fetchContent(page);

if (parser.parse(page, curURL.getURL())) {

return page;

}

} catch (Exception e) {

e.printStackTrace();

}

}

} finally {

if (fetchResult != null) {

fetchResult.discardContentIfNotConsumed();

}

}

return null;

}

private String processUrl(String url) {

System.out.println("Processing: " + url);

Page page = download(url);

if (page != null) {

ParseData parseData = page.getParseData();

if (parseData != null) {

if (parseData instanceof HtmlParseData) {

HtmlParseData htmlParseData = (HtmlParseData) parseData;

return htmlParseData.getHtml();

}

} else {

System.out.println("Couldn't parse the content of the page.");

}

} else {

System.out.println("Couldn't fetch the content of the page.");

}

return null;

}

public String getHtmlContent(String argUrl) {

return this.processUrl(argUrl);

}

}

I was able to crawl the content from the aforementioned link/site. But it doesn't have the information what I marked in the red boxes. I think those are the dynamic links.

- My question is how can I crawl the content from the aforementioned link/website...???

- How to crawl the content from Ajax/JavaScript based websites...???

Please can anyone help me on this.

Thanks & Regards, Amar