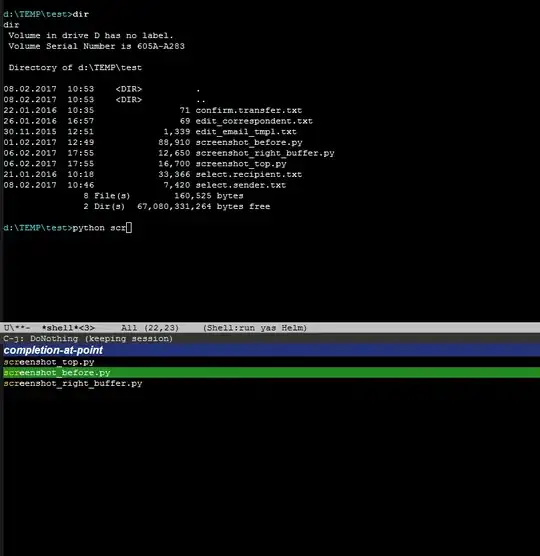

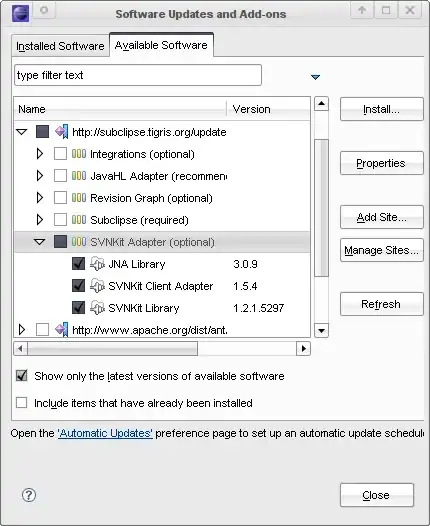

The difference is simply too small to see with the naked eye at this scale:

Using your HeapSort results (600ms for 1000000 entries), here is a O(n) function (green) and a O(n log n) function (red):

(from http://fooplot.com/plot/gnbb0vdgno )

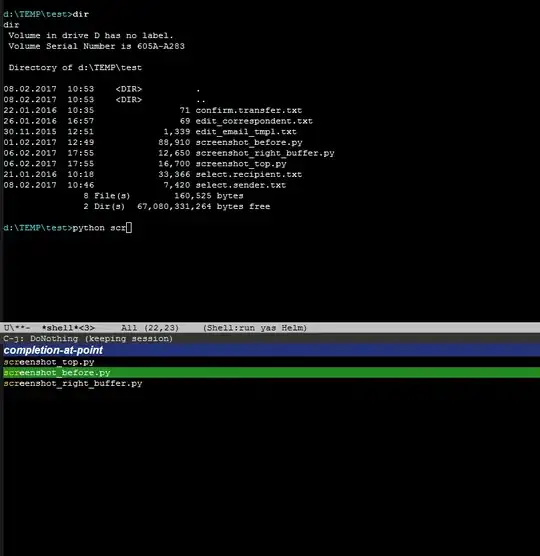

(from http://fooplot.com/plot/gnbb0vdgno )

The two functions in this picture are:

y = 600/1000000 * x greeny = 1/(10000 log(10)) * x*log(x) red

(Note that these functions have vastly different constant scaling factors, but of course these do not matter in Big-O notation.)

However just because they are hard to see in a diagram, does not mean they are impossible to distinguish.

As mentioned in the comments, your main options are bigger datasets, or slower comparison functions. Most sorting algorithms will allow you to specify a comparison function, which should not, under normal circumstances, change the O() time complexity. (beware of non-transitive comparison functions though)

If that is not possible, and you just want to thread the algorithms as black boxes, you could simply repeat the experiment and average the results until noise is low enough to distinguish between these two curves.

To get the appropriate "ideal" n log n curve for comparison with your averaged data, you need to solve the equation y = a*x * log(x); y=MAXIMUM_TIME; x=MAXIMUM_INPUT_LENGTH;, for example with Wolfram Alpha

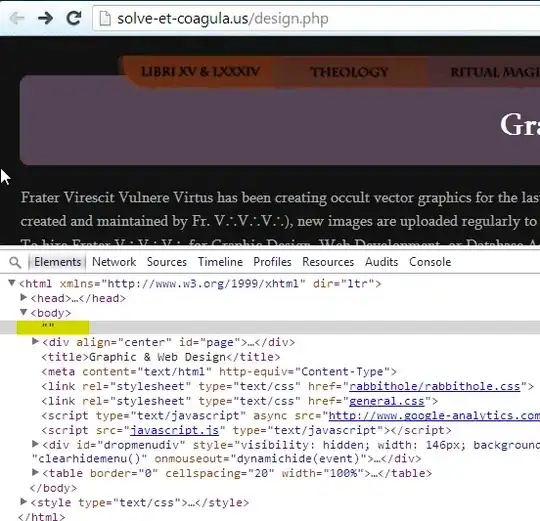

One important point here is that even though these curves look similar, this does not mean that the run-time of a hypothetical linear sorting algorithm would not be worthwhile for less than a million entries. If you managed to come up with a linear sorting algorithm with the same constant factor as the n log n algorithm, the curves would look like this:

(from

(from