Historically, I like to break expressions so that the "it's clearly incomplete" bias is shown on the continued line:

var something = foo + bar

+ baz(mumble);

This is an attitude that comes from working in languages which need semicolons to terminate expressions. The first line is already obviously incomplete due to no-semicolon, so it's better to make it clear to the reader that the second line is not complete.

The alternative would be:

var something = foo + bar +

baz(mumble);

That's not as good for me. Now the only way to tell that baz(mumble); isn't standalone (indentation aside) is to scan your eyes to the end of the previous line. * (Which is presumably long, as you needed to break it in the first place.)*

But in bizarro JavaScript land, I started seeing people changing code from things that looked like the first form to the second, and warning about "automatic semicolon insertion". It certainly causes some surprising behaviors. Not really wanting to delve into that tangent at the time I put "learn what automatic semicolon insertion is and whether I should be doing that too" into my infinite task queue.

When I did look into it, I found myself semi-sure... but not certain... that there aren't dangers with how I use it. It seems the problems would come from if I had left off the + on accident and written:

var something = foo + bar

baz(mumble);

...then JavaScript inserts a semicolon after foo + bar for you, seemingly because both lines stand alone as complete expressions. I deduced perhaps those other JavaScript programmers thought biasing the "clearly intentionally incomplete" bit to the end of the line-to-be-continued was better, because it pinpointed the location where the semicolon wasn't.

Yet if I have stated my premise correctly, I am styling my broken lines in a way that the ensuing lines are "obviously incomplete". If that weren't the case then I wouldn't consider my way an advantage in the first place.

Am I correct, that my way is not a risk for the conditions I describe of how I use line continuations? Are there any "incomplete seeming" expression pitfalls that are actually complete in surprising Ways?

To offer an example of "complete in surprising ways", consider if + 1; could be interpreted as just positive one on a line by itself. Which it seems it can:

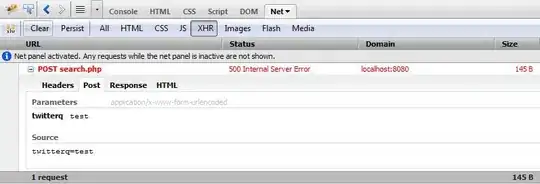

But JSFiddle gives back 6 for this:

var x = 3 + 2

+ 1;

alert(x)

Maybe that's just a quirk in the console, but it causes me to worry about my "it's okay so long as the second line doesn't stand alone as complete expression" interpretation.