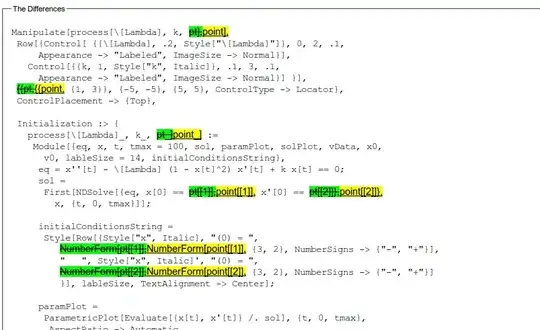

I tried to extract SIFT key points. It is working fine for a sample image I downloaded (height 400px width 247px horizontal and vertical resolutions 300dpi). Below image shows the extracted points.

Then I tried to apply the same code to a image that was taken and edited by me (height 443px width 541px horizontal and vertical resolutions 72dpi).

To create the above image I rotated the original image then removed its background and resized it using Photoshop, but my code, for that image doesn't extract features like in the first image.

See the result :

It just extract very few points. I expect a result as in the first case. For the second case when I'm using the original image without any edit the program gives points as the first case. Here is the simple code I have used

#include<opencv\cv.h>

#include<opencv\highgui.h>

#include<opencv2\nonfree\nonfree.hpp>

using namespace cv;

int main(){

Mat src, descriptors,dest;

vector<KeyPoint> keypoints;

src = imread(". . .");

cvtColor(src, src, CV_BGR2GRAY);

SIFT sift;

sift(src, src, keypoints, descriptors, false);

drawKeypoints(src, keypoints, dest);

imshow("Sift", dest);

cvWaitKey(0);

return 0;

}

What I'm doing wrong here? what do I need to do to get a result like in the first case to my own image after resizing ?

Thank you!