Ok i finally managed to do it without using the --privileged mode.

I'm running on ubuntu server 14.04 and i'm using the latest cuda (6.0.37 for linux 13.04 64 bits).

Preparation

Install nvidia driver and cuda on your host. (it can be a little tricky so i will suggest you follow this guide https://askubuntu.com/questions/451672/installing-and-testing-cuda-in-ubuntu-14-04)

ATTENTION : It's really important that you keep the files you used for the host cuda installation

Get the Docker Daemon to run using lxc

We need to run docker daemon using lxc driver to be able to modify the configuration and give the container access to the device.

One time utilization :

sudo service docker stop

sudo docker -d -e lxc

Permanent configuration

Modify your docker configuration file located in /etc/default/docker

Change the line DOCKER_OPTS by adding '-e lxc'

Here is my line after modification

DOCKER_OPTS="--dns 8.8.8.8 --dns 8.8.4.4 -e lxc"

Then restart the daemon using

sudo service docker restart

How to check if the daemon effectively use lxc driver ?

docker info

The Execution Driver line should look like that :

Execution Driver: lxc-1.0.5

Build your image with the NVIDIA and CUDA driver.

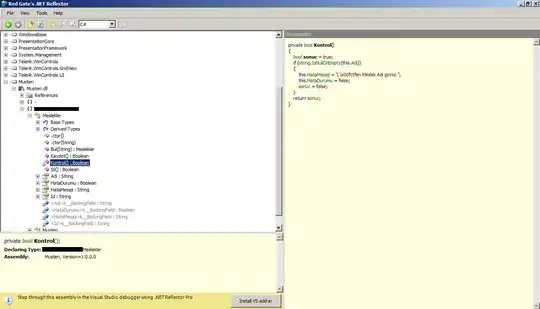

Here is a basic Dockerfile to build a CUDA compatible image.

FROM ubuntu:14.04

MAINTAINER Regan <http://stackoverflow.com/questions/25185405/using-gpu-from-a-docker-container>

RUN apt-get update && apt-get install -y build-essential

RUN apt-get --purge remove -y nvidia*

ADD ./Downloads/nvidia_installers /tmp/nvidia > Get the install files you used to install CUDA and the NVIDIA drivers on your host

RUN /tmp/nvidia/NVIDIA-Linux-x86_64-331.62.run -s -N --no-kernel-module > Install the driver.

RUN rm -rf /tmp/selfgz7 > For some reason the driver installer left temp files when used during a docker build (i don't have any explanation why) and the CUDA installer will fail if there still there so we delete them.

RUN /tmp/nvidia/cuda-linux64-rel-6.0.37-18176142.run -noprompt > CUDA driver installer.

RUN /tmp/nvidia/cuda-samples-linux-6.0.37-18176142.run -noprompt -cudaprefix=/usr/local/cuda-6.0 > CUDA samples comment if you don't want them.

RUN export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/cuda/lib64 > Add CUDA library into your PATH

RUN touch /etc/ld.so.conf.d/cuda.conf > Update the ld.so.conf.d directory

RUN rm -rf /temp/* > Delete installer files.

Run your image.

First you need to identify your the major number associated with your device.

Easiest way is to do the following command :

ls -la /dev | grep nvidia

If the result is blank, use launching one of the samples on the host should do the trick.

The result should look like that

As you can see there is a set of 2 numbers between the group and the date.

These 2 numbers are called major and minor numbers (wrote in that order) and design a device.

We will just use the major numbers for convenience.

As you can see there is a set of 2 numbers between the group and the date.

These 2 numbers are called major and minor numbers (wrote in that order) and design a device.

We will just use the major numbers for convenience.

Why do we activated lxc driver?

To use the lxc conf option that allow us to permit our container to access those devices.

The option is : (i recommend using * for the minor number cause it reduce the length of the run command)

--lxc-conf='lxc.cgroup.devices.allow = c [major number]:[minor number or *] rwm'

So if i want to launch a container (Supposing your image name is cuda).

docker run -ti --lxc-conf='lxc.cgroup.devices.allow = c 195:* rwm' --lxc-conf='lxc.cgroup.devices.allow = c 243:* rwm' cuda

As you can see there is a set of 2 numbers between the group and the date.

These 2 numbers are called major and minor numbers (wrote in that order) and design a device.

We will just use the major numbers for convenience.

As you can see there is a set of 2 numbers between the group and the date.

These 2 numbers are called major and minor numbers (wrote in that order) and design a device.

We will just use the major numbers for convenience.