I have a running service that gets 50-100 queries per minute. And these are not high cost queries. This service has been running for around 3-4 months without any errors.

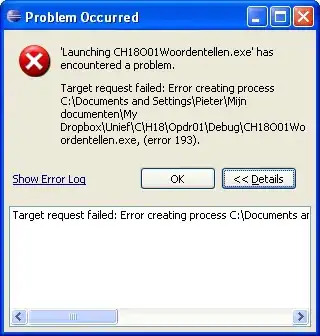

Suddenly few days ago it started giving "There is insufficient system memory in resource pool 'default' to run this query." error occasionally. When I investigate the problem I saw that sqlservr.exe was using ~1.5 gb ram and %25 of CPU(all of 1/4CPU). And when I restarted the sqlservr.exe the ram starts from ~50mb and slowly increase till it becomes ~1.5gb then leads to crashes in the apps using it.

After I have made little bit of research I figured that it is caused by the edition of sql server I use. It was express edition limiting the numbers to those. So I have upgraded my sql server from '2008r2 express' to '2012 enterprise'. When I started the service I thought my problems are finally over, since the service uses only ~60mb of memory, but in an hour unfortunately same problem started occurring, but this time the used memory I see on windows task manager is still ~60mb, not excessing any limits.

I use EntityFramework as ORM in a wcf service. And along with it I have SqlQueryNotification(broker and stuff) system for some caching operations.

Am I missing some crucial configuration points? Or 6gbs of memory and my 4 CPU is really not enough for this? But it can't be that because same load was like that for 3 months and there wasn't any error back then, and there is not any change of codes either.