What the other answers are saying is:

- maybe you should make stack-local instances because it shouldn't cost much, and

- maybe you shouldn't do so, because memory allocation can be costly.

These are guesses - educated guesses - but still guesses.

You are the only one who can answer the question, by actually finding out (not guessing) if those news are taking a large enough percent of wall-clock time to be worth worrying about.

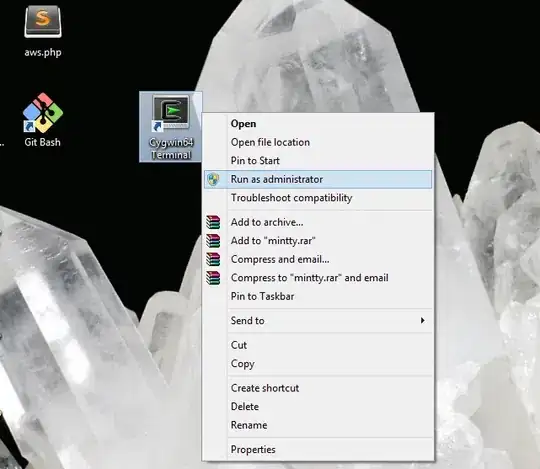

The method I (and many others) rely on for answering that kind of question is random pausing.

The idea is simple.

Suppose those news, if somehow eliminated, would save - pick a percent, like 20% - of time.

That means if you simply hit the pause button and display the call stack, you have at least a 20% chance of catching it in the act.

So if you do that 20 times, you will see it doing it roughly 4 times, give or take.

If you do that, you will see what's accounting for the time.

- If it's the news, you will see it.

- If it's something else, you will see it.

You won't know exactly how much it costs, but you don't need to know that.

What you need to know is what the problem is, and that's what it tells you.

ADDED: If you'll bear with me to explain how this kind of performance tuning can go, here's an illustration of a hypothetical situation:

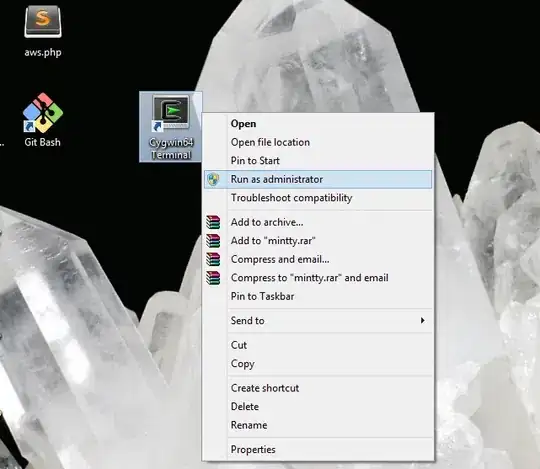

When you take stack samples, you may find a number of things that could be improved, of which one of them could be memory allocation, and it might not even be very big, as in this case it is (C) taking only 14%.

It tells you something else is taking a lot more time, namely (A).

So if you fix (A) you get a speedup factor of 1.67x. Not bad.

Now if you repeat the process, it tells you that (B) would save you a lot of time.

So you fix it and (in this example) get another 1.67x, for an overall speedup of 2.78x.

Now you do it again, and you see that the original thing you suspected, memory allocation, is indeed a large fraction of the time.

So you fix it and (in this example) get another 1.67x, for an overall speedup of 4.63x.

Now that is serious speedup.

So the point is 1) keep an open mind about what to speed up - let the diagnostic tell you what to fix, and 2) repeat the process, to make multiple speedups. That's how you get real speedup, because things that were small to begin with become much more significant when you've trimmed away other stuff.