I have a huge collection of documents in my DB and I'm wondering how can I run through all the documents and update them, each document with a different value.

-

2It depends on the driver you're using to connect to MongoDB. – Leonid Beschastny Aug 26 '14 at 14:11

-

Im using the mongodb driver – Alex Brodov Aug 26 '14 at 14:15

-

Can you give me some example with the update inside the forEach(), and specify where are you closing the connection to the DB because i had problems with that – Alex Brodov Aug 26 '14 at 14:16

10 Answers

The answer depends on the driver you're using. All MongoDB drivers I know have cursor.forEach() implemented one way or another.

Here are some examples:

node-mongodb-native

collection.find(query).forEach(function(doc) {

// handle

}, function(err) {

// done or error

});

mongojs

db.collection.find(query).forEach(function(err, doc) {

// handle

});

monk

collection.find(query, { stream: true })

.each(function(doc){

// handle doc

})

.error(function(err){

// handle error

})

.success(function(){

// final callback

});

mongoose

collection.find(query).stream()

.on('data', function(doc){

// handle doc

})

.on('error', function(err){

// handle error

})

.on('end', function(){

// final callback

});

Updating documents inside of .forEach callback

The only problem with updating documents inside of .forEach callback is that you have no idea when all documents are updated.

To solve this problem you should use some asynchronous control flow solution. Here are some options:

Here is an example of using async, using its queue feature:

var q = async.queue(function (doc, callback) {

// code for your update

collection.update({

_id: doc._id

}, {

$set: {hi: 'there'}

}, {

w: 1

}, callback);

}, Infinity);

var cursor = collection.find(query);

cursor.each(function(err, doc) {

if (err) throw err;

if (doc) q.push(doc); // dispatching doc to async.queue

});

q.drain = function() {

if (cursor.isClosed()) {

console.log('all items have been processed');

db.close();

}

}

- 50,364

- 10

- 118

- 122

-

Can i use an update function inside that will get doc._id as a query from the for each? – Alex Brodov Aug 26 '14 at 14:22

-

1Yes, you can. But you'll need to wait for all update operations to finish before closing your connection. – Leonid Beschastny Aug 26 '14 at 14:24

-

I've made a few changes and i modified the cursor to db.collection("myCollecion").find(query) I don't understand where are you taking from the query ? On Line 4 you wrote this : _id: doc._id where are you taking from the doc._id , i mean where are you passing it ? It should be in a for loop right? – Alex Brodov Aug 26 '14 at 18:05

-

When i'm running your code my documents are updating but i'm getting and error at the end : TypeError: Cannot read property '_id' of null, what i think that it's not stopping to tu push the docs and then the async.queue is trying to update a null document which is not existing but i've tried to add before the push if(doc!=null) and now it's working without any errors – Alex Brodov Aug 26 '14 at 18:55

-

@user3502786 You're absolutely right, I completely forgot that the last emitted document is always `null`. Edited my answer. – Leonid Beschastny Aug 26 '14 at 19:38

-

@user3502786 as for the `query`, what I posted is just a scratch, it's incomplete in many ways. For example, I assumed that you already established `db` connection and selected `collection` to work with. So, `query` is just any query you would like to use. Simply omit it if you want to process the whole collection. – Leonid Beschastny Aug 26 '14 at 19:43

-

So in the place where you wrote // code for update i can use a request module in order to make a connection to yahoo server and get a value for a specific doc.ticker which i have in every doumentand then pass this value to the update function is it correct? – Alex Brodov Aug 26 '14 at 19:50

-

-

The async example is a nice solution. However, I think without some tweaks you risk of running out of memory (if the dataset is big, and items are added to the queue more quickly than they are processed and removed from the queue). Not sure how you could combat this (there doesn't seem to be a way to 'pause' adding to the queue until items have been processed). Any ideas? – UpTheCreek Feb 29 '16 at 10:07

-

1

-

1For what it worth, now the node-mongodb-native has an API called forEach that takes a [callback](http://mongodb.github.io/node-mongodb-native/2.2/api/Cursor.html#forEach). – Richard Feb 06 '17 at 09:38

-

6Just a small note for mongoose - the `.stream` method is deprecated and now we should use `.cursor` – Alex K Jul 07 '17 at 12:41

Using the mongodb driver, and modern NodeJS with async/await, a good solution is to use next():

const collection = db.collection('things')

const cursor = collection.find({

bla: 42 // find all things where bla is 42

});

let document;

while ((document = await cursor.next())) {

await collection.findOneAndUpdate({

_id: document._id

}, {

$set: {

blu: 43

}

});

}

This results in only one document at a time being required in memory, as opposed to e.g. the accepted answer, where many documents get sucked into memory, before processing of the documents starts. In cases of "huge collections" (as per the question) this may be important.

If documents are large, this can be improved further by using a projection, so that only those fields of documents that are required are fetched from the database.

- 143,271

- 52

- 317

- 404

- 957

- 1

- 8

- 19

-

3@ZachSmith: this is correct, but very slow. It can be sped up 10X or more by using [bulkWrite](https://stackoverflow.com/questions/25507866/how-can-i-use-a-cursor-foreach-in-mongodb-using-node-js/56333962#56333962). – Dan Dascalescu May 28 '19 at 01:17

-

1there is [hasNext](https://mongodb.github.io/node-mongodb-native/3.2/api/Cursor.html#hasNext) method. and it should be used instead of `while(document=....)` pattern – Sang Jul 12 '19 at 11:06

-

Why do you state this will only load 1 doc into memory? Don't you need to add to the find call tge batchSize option and assign it the value 1 for it to be that way? – Ziv Glazer Sep 16 '20 at 21:25

-

1@ZivGlazer that's not what I'm saying: The code presented *requires* only one doc in memory at any time. The batch size you are referring to determines how many documents get fetched in one request from the database, and that determines how many docs will reside in memory at any time, so you are right in that sense; but a batch size of 1 is probably not a good idea... finding a good batch size is a different question :) – chris6953 Sep 17 '20 at 14:24

-

I agree, I wanted to do a similar process of reading a big collection with big documents and updating each one I read, I end up implementing something like this: – Ziv Glazer Sep 18 '20 at 15:05

-

static async concurrentCursorBatchProcessing(cursor, batchSize, callback) { let doc; const docsBatch = []; while ((doc = await cursor.next())) { docsBatch.push(doc); if (docsBatch.length >= batchSize) { await PromiseUtils.concurrentPromiseAll(docsBatch, async (currDoc) => { return callback(currDoc); }); // Emptying the batch array docsBatch.splice(0, docsBatch.length); } } – Ziv Glazer Sep 18 '20 at 15:05

-

// Checking if there is a last batch remaining since it was small than batchSize if (docsBatch.length > 0) { await PromiseUtils.concurrentPromiseAll(docsBatch, async (currDoc) => { return callback(currDoc); }); } – Ziv Glazer Sep 18 '20 at 15:06

-

Where batchSize should be given the same value as the batchSize option when using the "find" in the cursor options. – Ziv Glazer Sep 18 '20 at 15:06

-

I added my code in a more readable state as an answer to this question, should be easier to read it there ^^ – Ziv Glazer Sep 18 '20 at 15:25

var MongoClient = require('mongodb').MongoClient,

assert = require('assert');

MongoClient.connect('mongodb://localhost:27017/crunchbase', function(err, db) {

assert.equal(err, null);

console.log("Successfully connected to MongoDB.");

var query = {

"category_code": "biotech"

};

db.collection('companies').find(query).toArray(function(err, docs) {

assert.equal(err, null);

assert.notEqual(docs.length, 0);

docs.forEach(function(doc) {

console.log(doc.name + " is a " + doc.category_code + " company.");

});

db.close();

});

});

Notice that the call .toArray is making the application to fetch the entire dataset.

var MongoClient = require('mongodb').MongoClient,

assert = require('assert');

MongoClient.connect('mongodb://localhost:27017/crunchbase', function(err, db) {

assert.equal(err, null);

console.log("Successfully connected to MongoDB.");

var query = {

"category_code": "biotech"

};

var cursor = db.collection('companies').find(query);

function(doc) {

cursor.forEach(

console.log(doc.name + " is a " + doc.category_code + " company.");

},

function(err) {

assert.equal(err, null);

return db.close();

}

);

});

Notice that the cursor returned by the find() is assigned to var cursor. With this approach, instead of fetching all data in memory and consuming data at once, we're streaming the data to our application. find() can create a cursor immediately because it doesn't actually make a request to the database until we try to use some of the documents it will provide. The point of cursor is to describe our query. The 2nd parameter to cursor.forEach shows what to do when the driver gets exhausted or an error occurs.

In the initial version of the above code, it was toArray() which forced the database call. It meant we needed ALL the documents and wanted them to be in an array.

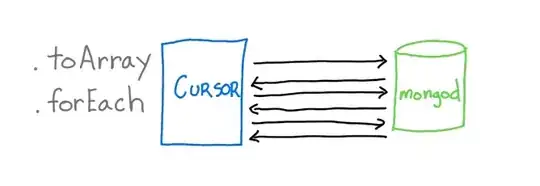

Also, MongoDB returns data in batch format. The image below shows, requests from cursors (from application) to MongoDB

forEach is better than toArray because we can process documents as they come in until we reach the end. Contrast it with toArray - where we wait for ALL the documents to be retrieved and the entire array is built. This means we're not getting any advantage from the fact that the driver and the database system are working together to batch results to your application. Batching is meant to provide efficiency in terms of memory overhead and the execution time. Take advantage of it, if you can in your application.

- 2,442

- 2

- 21

- 17

- 28,977

- 24

- 140

- 219

-

2cursor causes extra problems in handling data on client side.. the array is easier to consume due to the entire async nature of the system.. – DragonFire May 20 '20 at 07:11

None of the previous answers mentions batching the updates. That makes them extremely slow - tens or hundreds of times slower than a solution using bulkWrite.

Let's say you want to double the value of a field in each document. Here's how to do that fast and with fixed memory consumption:

// Double the value of the 'foo' field in all documents

let bulkWrites = [];

const bulkDocumentsSize = 100; // how many documents to write at once

let i = 0;

db.collection.find({ ... }).forEach(doc => {

i++;

// Update the document...

doc.foo = doc.foo * 2;

// Add the update to an array of bulk operations to execute later

bulkWrites.push({

replaceOne: {

filter: { _id: doc._id },

replacement: doc,

},

});

// Update the documents and log progress every `bulkDocumentsSize` documents

if (i % bulkDocumentsSize === 0) {

db.collection.bulkWrite(bulkWrites);

bulkWrites = [];

print(`Updated ${i} documents`);

}

});

// Flush the last <100 bulk writes

db.collection.bulkWrite(bulkWrites);

- 143,271

- 52

- 317

- 404

And here is an example of using a Mongoose cursor async with promises:

new Promise(function (resolve, reject) {

collection.find(query).cursor()

.on('data', function(doc) {

// ...

})

.on('error', reject)

.on('end', resolve);

})

.then(function () {

// ...

});

Reference:

- 18,848

- 11

- 103

- 80

-

If you have a sizable list of documents won't this exhaust memory? (ie: 10M docs) – chovy Dec 14 '16 at 20:58

-

@chovy in theory it shouldn't, this is why you use a cursor vs loading everything in an array in the first place. The promise then gets fulfilled only after the cursor ends or err. If you do have such a db then it shouldn't be too difficult to test this, I'd be curious myself. – Wtower Dec 15 '16 at 05:54

-

you can use limit to avoid getting 10M docs, I don't know of any human who can read 10M docs at once, or a screen which can render them visually on one page (mobile, laptop or a desktop - forget about watches – DragonFire May 20 '20 at 07:14

You can now use (in an async function, of course):

for await (let doc of collection.find(query)) {

await updateDoc(doc);

}

// all done

which nicely serializes all updates.

- 1,414

- 1

- 14

- 20

Leonid's answer is great, but I want to reinforce the importance of using async/promises and to give a different solution with a promises example.

The simplest solution to this problem is to loop forEach document and call an update. Usually, you don't need close the db connection after each request, but if you do need to close the connection, be careful. You must just close it if you are sure that all updates have finished executing.

A common mistake here is to call db.close() after all updates are dispatched without knowing if they have completed. If you do that, you'll get errors.

Wrong implementation:

collection.find(query).each(function(err, doc) {

if (err) throw err;

if (doc) {

collection.update(query, update, function(err, updated) {

// handle

});

}

else {

db.close(); // if there is any pending update, it will throw an error there

}

});

However, as db.close() is also an async operation (its signature have a callback option) you may be lucky and this code can finish without errors. It may work only when you need to update just a few docs in a small collection (so, don't try).

Correct solution:

As a solution with async was already proposed by Leonid, below follows a solution using Q promises.

var Q = require('q');

var client = require('mongodb').MongoClient;

var url = 'mongodb://localhost:27017/test';

client.connect(url, function(err, db) {

if (err) throw err;

var promises = [];

var query = {}; // select all docs

var collection = db.collection('demo');

var cursor = collection.find(query);

// read all docs

cursor.each(function(err, doc) {

if (err) throw err;

if (doc) {

// create a promise to update the doc

var query = doc;

var update = { $set: {hi: 'there'} };

var promise =

Q.npost(collection, 'update', [query, update])

.then(function(updated){

console.log('Updated: ' + updated);

});

promises.push(promise);

} else {

// close the connection after executing all promises

Q.all(promises)

.then(function() {

if (cursor.isClosed()) {

console.log('all items have been processed');

db.close();

}

})

.fail(console.error);

}

});

});

-

When I try this I get the error `doc is not defined` for the line `var promises = calls.map(myUpdateFunction(doc));` – Philip O'Brien Oct 21 '15 at 13:55

-

1@robocode, ty! I've fixed the error and the example is working fine now. – Zanon Oct 23 '15 at 01:11

-

1This approach requires you to load the whole dataset into memory, before processing the results. This isn't a practical approach for large datasets (you will run out of memory). You might as well just us .toArray(), which is much simpler (but also requires loading whole dataset of course). – UpTheCreek Feb 29 '16 at 10:00

-

@UpTheCreek, thanks for your feedback. I've updated my answer to store only the promise object instead of the doc object. It uses much less memory because the promises object is a small JSON that stores the state. – Zanon Mar 20 '16 at 23:54

-

@UpTheCreek, for an even better solution regarding the memory usage, you would need to execute the query two times: first with a "count" and second to get the cursor. After each update is completed, you would increment a variable and only stops the program (and close the db connection) after this variable reach the total count of results. – Zanon Mar 20 '16 at 23:58

-

The point of queue in the above example is so you don't exhaust memory, your example using Promises loads all docs into memory. – chovy Dec 14 '16 at 04:50

-

@chovy, I'm not saving the documents in memory. The promise will receive the doc, dispatch an update request and wait for the result to log it. After dispatching the update, the doc is not used again and the memory can be released. I Would appreciate if you could improve this answer or post another one that is more memory friendly. – Zanon Dec 14 '16 at 08:49

-

You are returning a promise for each doc. How will that work with 10 million docs? – chovy Dec 14 '16 at 08:52

-

@chovy, it will be 10 million promises handlers in memory, but not 10 million docs. The memory size which is used is much smaller. I know that this solution is not perfect, that's why I would be glad to improve the answer with a better alternative. – Zanon Dec 14 '16 at 11:13

-

https://stackoverflow.com/questions/49685529/how-to-do-find-using-node-and-mongodb .help me out – its me Apr 06 '18 at 05:26

The node-mongodb-native now supports a endCallback parameter to cursor.forEach as for one to handle the event AFTER the whole iteration, refer to the official document for details http://mongodb.github.io/node-mongodb-native/2.2/api/Cursor.html#forEach.

Also note that .each is deprecated in the nodejs native driver now.

- 41

- 2

-

Can you provide an example of firing a callback after all results have been processed? – chovy Dec 14 '16 at 05:04

-

@chovy in `forEach(iteratorCallback, endCallback)` `endCallback(error)` is called when there is no more data (with `error` is undefined). – Tien Do May 09 '19 at 04:55

let's assume that we have the below MongoDB data in place.

Database name: users

Collection name: jobs

===========================

Documents

{ "_id" : ObjectId("1"), "job" : "Security", "name" : "Jack", "age" : 35 }

{ "_id" : ObjectId("2"), "job" : "Development", "name" : "Tito" }

{ "_id" : ObjectId("3"), "job" : "Design", "name" : "Ben", "age" : 45}

{ "_id" : ObjectId("4"), "job" : "Programming", "name" : "John", "age" : 25 }

{ "_id" : ObjectId("5"), "job" : "IT", "name" : "ricko", "age" : 45 }

==========================

This code:

var MongoClient = require('mongodb').MongoClient;

var dbURL = 'mongodb://localhost/users';

MongoClient.connect(dbURL, (err, db) => {

if (err) {

throw err;

} else {

console.log('Connection successful');

var dataBase = db.db();

// loop forEach

dataBase.collection('jobs').find().forEach(function(myDoc){

console.log('There is a job called :'+ myDoc.job +'in Database')})

});

- 7,394

- 40

- 45

- 64

- 11

- 1

I looked for a solution with good performance and I end up creating a mix of what I found which I think works good:

/**

* This method will read the documents from the cursor in batches and invoke the callback

* for each batch in parallel.

* IT IS VERY RECOMMENDED TO CREATE THE CURSOR TO AN OPTION OF BATCH SIZE THAT WILL MATCH

* THE VALUE OF batchSize. This way the performance benefits are maxed out since

* the mongo instance will send into our process memory the same number of documents

* that we handle in concurrent each time, so no memory space is wasted

* and also the memory usage is limited.

*

* Example of usage:

* const cursor = await collection.aggregate([

{...}, ...],

{

cursor: {batchSize: BATCH_SIZE} // Limiting memory use

});

DbUtil.concurrentCursorBatchProcessing(cursor, BATCH_SIZE, async (doc) => ...)

* @param cursor - A cursor to batch process on.

* We can get this from our collection.js API by either using aggregateCursor/findCursor

* @param batchSize - The batch size, should match the batchSize of the cursor option.

* @param callback - Callback that should be async, will be called in parallel for each batch.

* @return {Promise<void>}

*/

static async concurrentCursorBatchProcessing(cursor, batchSize, callback) {

let doc;

const docsBatch = [];

while ((doc = await cursor.next())) {

docsBatch.push(doc);

if (docsBatch.length >= batchSize) {

await PromiseUtils.concurrentPromiseAll(docsBatch, async (currDoc) => {

return callback(currDoc);

});

// Emptying the batch array

docsBatch.splice(0, docsBatch.length);

}

}

// Checking if there is a last batch remaining since it was small than batchSize

if (docsBatch.length > 0) {

await PromiseUtils.concurrentPromiseAll(docsBatch, async (currDoc) => {

return callback(currDoc);

});

}

}

An example of usage for reading many big documents and updating them:

const cursor = await collection.aggregate([

{

...

}

], {

cursor: {batchSize: BATCH_SIZE}, // Limiting memory use

allowDiskUse: true

});

const bulkUpdates = [];

await DbUtil.concurrentCursorBatchProcessing(cursor, BATCH_SIZE, async (doc: any) => {

const update: any = {

updateOne: {

filter: {

...

},

update: {

...

}

}

};

bulkUpdates.push(update);

// Updating if we read too many docs to clear space in memory

await this.bulkWriteIfNeeded(bulkUpdates, collection);

});

// Making sure we updated everything

await this.bulkWriteIfNeeded(bulkUpdates, collection, true);

...

private async bulkWriteParametersIfNeeded(

bulkUpdates: any[], collection: any,

forceUpdate = false, flushBatchSize) {

if (bulkUpdates.length >= flushBatchSize || forceUpdate) {

// concurrentPromiseChunked is a method that loops over an array in a concurrent way using lodash.chunk and Promise.map

await PromiseUtils.concurrentPromiseChunked(bulkUpsertParameters, (upsertChunk: any) => {

return techniquesParametersCollection.bulkWrite(upsertChunk);

});

// Emptying the array

bulkUpsertParameters.splice(0, bulkUpsertParameters.length);

}

}

- 786

- 2

- 8

- 24