I wrote a Hello-world console application using C#

class Program

{

static void Main(string[] args)

{

Console.WriteLine("Hello World");

Console.Read();

}

}

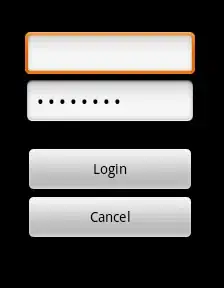

, and when I launched it, the memory it taken is:

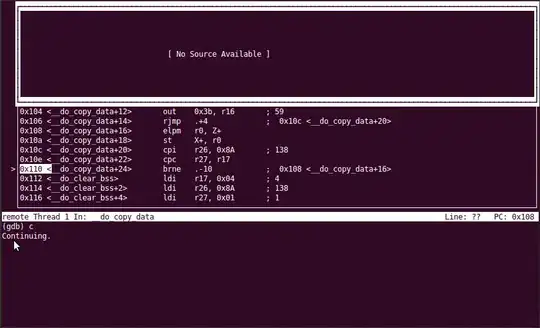

Then, I tried to create a dump file of this process:

After dump is created, the memory this process took is:

And you could see a big change for working set size, which is a suprise to me.

Something else interesting about this memory increment are:

- after my process memory working set increase to 46 MB, seems it didn't decrease back to 7 MB any more.

- the size of dump file is not 7MB, but 46 MB.

- Private working set increase from 1.83MB to 2.29MB

And, here is my questions:

1.why there is a memory increment along with create dump operation?

2.In the past, when tester report a memory leak issue and send me a dump file, I treat the dump file size as the memory size that the target process took in lab environment. But from the simple example above, seems I was always wrong?

3.I am super curious about what's inside the 46MB dump file (another way to say, I am super curious about why current hello world application took 46 MB memory). I am familiar with SOS commands, like !DumpHeap or !eeheap, but these commands are not enough to tell everything inside the 46MB size file. Could anyone share some useful tools, links or instructions?

Thanks very much for any help !