My application contains several latency-critical threads that "spin", i.e. never blocks. Such thread expected to take 100% of one CPU core. However it seems modern operation systems often transfer threads from one core to another. So, for example, with this Windows code:

void Processor::ConnectionThread()

{

while (work)

{

Iterate();

}

}

I do not see "100% occupied" core in Task manager, overall system load is 36-40%.

But if I change it to this:

void Processor::ConnectionThread()

{

SetThreadAffinityMask(GetCurrentThread(), 2);

while (work)

{

Iterate();

}

}

Then I do see that one of the CPU cores is 100% occupied, also overall system load is reduced to 34-36%.

Does it mean that I should tend to SetThreadAffinityMask for "spin" threads? If I improved latency adding SetThreadAffinityMask in this case? What else should I do for "spin" threads to improve latency?

I'm in the middle of porting my application to Linux, so this question is more about Linux if this matters.

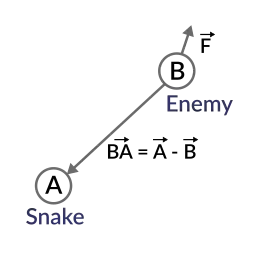

upd found this slide which shows that binding busy-waiting thread to CPU may help: